As a part, of the bug fixes, I would like to talk about 4 issues that all have the same, uncomfortable solution.

1) changing the hud colour (a favourite request which requires an overhaul of the whole game code to make that HUD colour-code “changeable”)

2) Problems with overlapping Control-binds with regards to FSS/DSS/“Store preview” only to be told “that” bind is used for some "basic helm function",

even when the helm isn’t theoretically accessible.

This has more to do with the new screens tacked on as an overlaying of “the cockpit” rather than the new "function" being a separate state due to the active view/screen/UI or however you want to call it.

3) Convenience for 3rd party macro/control screens like voice attack, to call the "control-function" directly and not "press the mapped key".

(That ships function may or may not HAVE a key mapped to it, and that mapped button/key may alter over time, needing the change to bubble down manually).

4) Future-proofing

The plan

1) Rip out the direct key mappings to ships functions.

2) create an abstract "event/listener" system for the "ship" and other game functions.

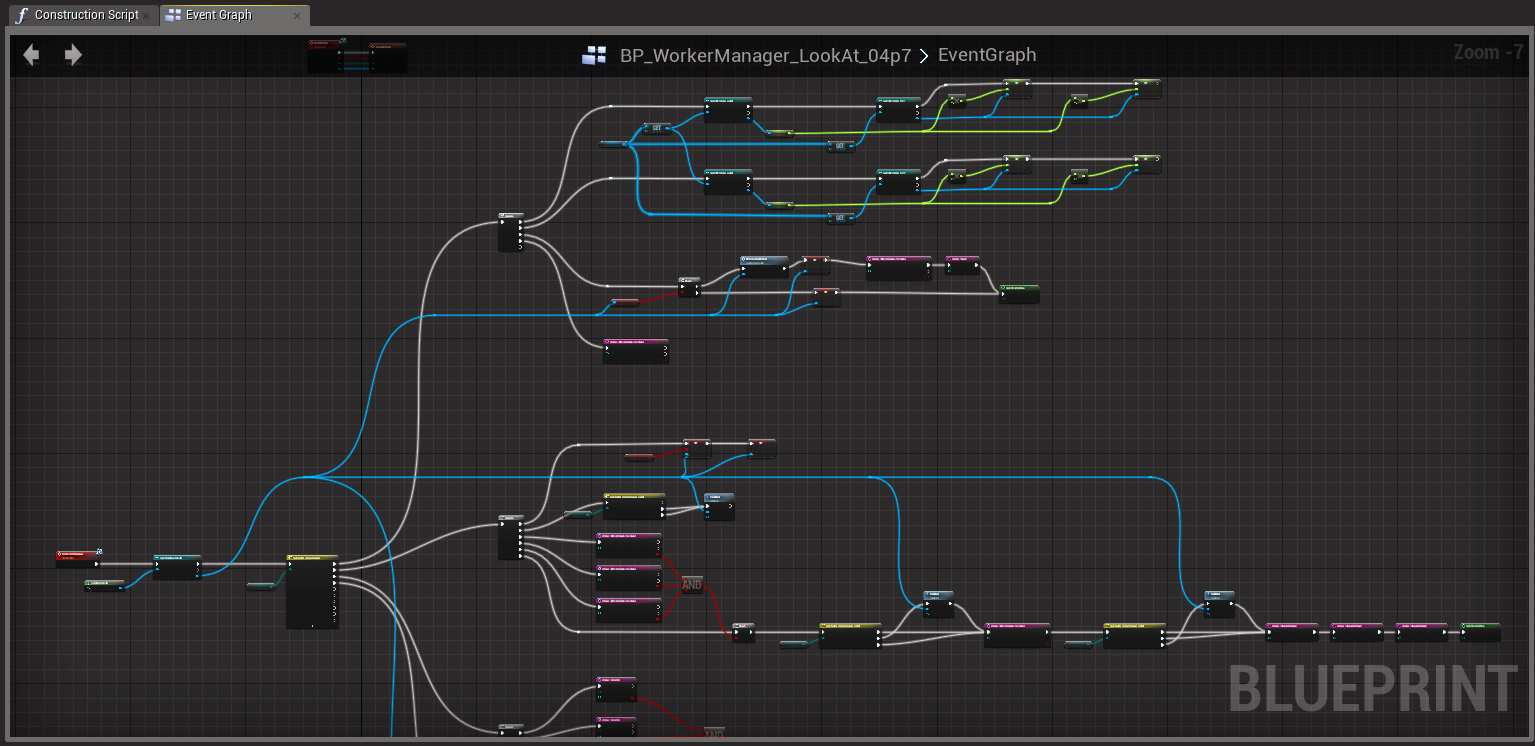

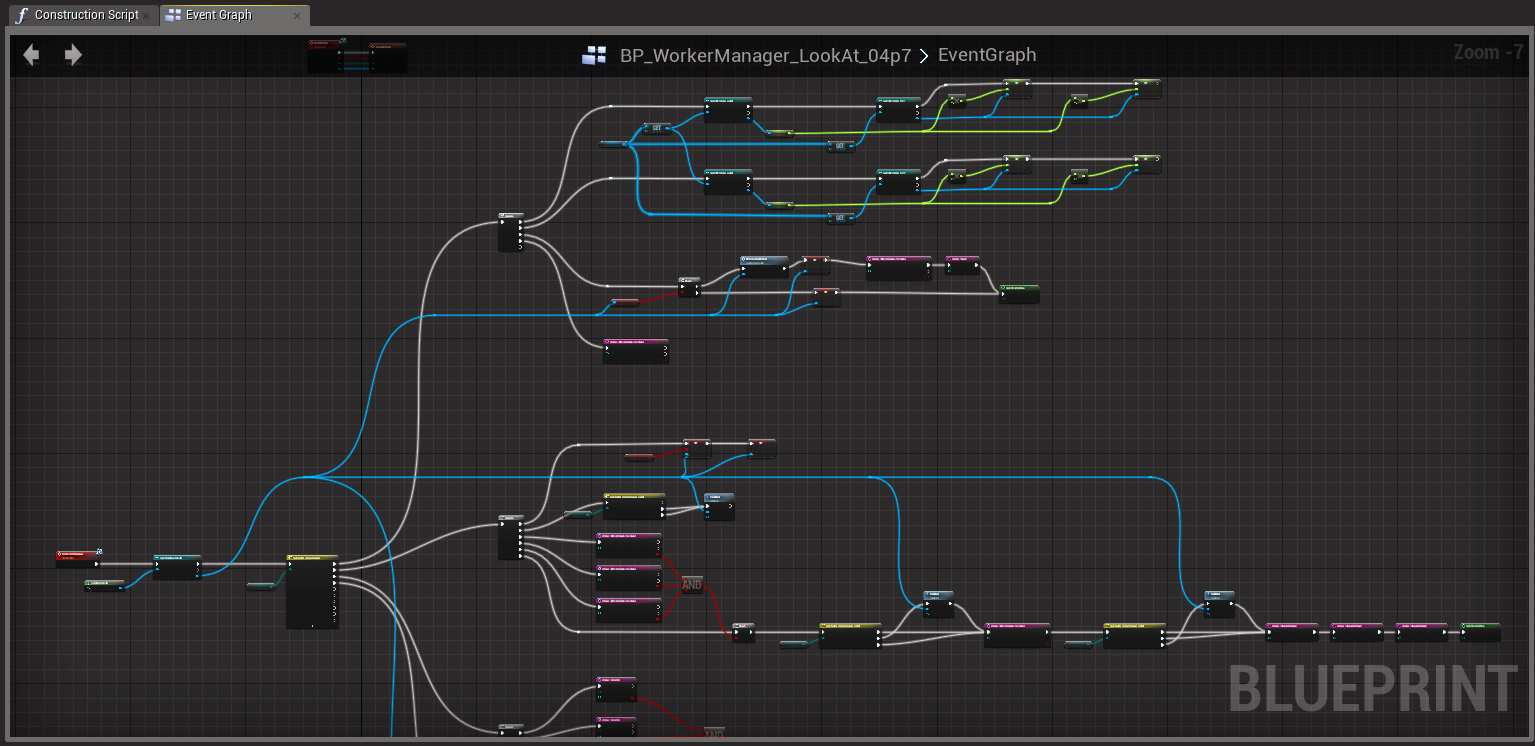

3) create a node-graph where ALL the game event calls can be managed.

4) Allow for plug and play grouping and hierarchies of the function calls and which control schemes would be "allowed" to be used,

and allow cross overs (so how we navigate a menu is still the same in the codex as it is on the internal/external panels).

Giving visually more clues for designers and direct controls devs without the need to dig into specific game code and re-implement a UI.

So, for example, the Galaxy map control system, and the system orrery controls can be "the same" even though they were created at different times.

1) changing the hud colour (a favourite request which requires an overhaul of the whole game code to make that HUD colour-code “changeable”)

2) Problems with overlapping Control-binds with regards to FSS/DSS/“Store preview” only to be told “that” bind is used for some "basic helm function",

even when the helm isn’t theoretically accessible.

This has more to do with the new screens tacked on as an overlaying of “the cockpit” rather than the new "function" being a separate state due to the active view/screen/UI or however you want to call it.

3) Convenience for 3rd party macro/control screens like voice attack, to call the "control-function" directly and not "press the mapped key".

(That ships function may or may not HAVE a key mapped to it, and that mapped button/key may alter over time, needing the change to bubble down manually).

4) Future-proofing

- Ease of code management / debugging for engine updates.

- Having open API system for ship functions to called directly would free up devs internally AND externally. (it would allow injection cheats)

- Streamlining UI's by easily seeing structure and groupings.

- Additions of New player menu

- Additions of new vehicle & ships menu's

- Additions of new game features with their own specific UI's and control schemes

- A crazy need to have multiple UI's in different places that activate the same function inside the ship.

- Example a button "cargo hatch open/close button" ON the cargo hatch in the cargo bay when we get space legs

it all starts with Bug fixing the overlapping screens and their key-binds clashes (problem 2).

Creating and organizing a node-based state/flow/system of the ui which devs can organize/regroup on the fly in a separate in-game window which will help them manage which “ui state is currently active” and “which control binds are valid”.

Before the binds are even set, the abstract event calls and listeners are being set from the node-graph interface.

so functions can be Unit-tested prior to baking out an entire UI implementation.

However, this starts chipping away at problem 3, as you start creating an abstract layer to communicate "event calls” which would facilitate an open api allowing control schemes like voice attack and other macros systems access a function, and not just press the key.

But this is a mammoth undertaking and requires a restructuring of the entire UI code.

Which in turn is what the devs claim the UI-colour change problem would need.

Creating and organizing a node-based state/flow/system of the ui which devs can organize/regroup on the fly in a separate in-game window which will help them manage which “ui state is currently active” and “which control binds are valid”.

Before the binds are even set, the abstract event calls and listeners are being set from the node-graph interface.

so functions can be Unit-tested prior to baking out an entire UI implementation.

However, this starts chipping away at problem 3, as you start creating an abstract layer to communicate "event calls” which would facilitate an open api allowing control schemes like voice attack and other macros systems access a function, and not just press the key.

But this is a mammoth undertaking and requires a restructuring of the entire UI code.

Which in turn is what the devs claim the UI-colour change problem would need.

The plan

1) Rip out the direct key mappings to ships functions.

2) create an abstract "event/listener" system for the "ship" and other game functions.

3) create a node-graph where ALL the game event calls can be managed.

4) Allow for plug and play grouping and hierarchies of the function calls and which control schemes would be "allowed" to be used,

and allow cross overs (so how we navigate a menu is still the same in the codex as it is on the internal/external panels).

Giving visually more clues for designers and direct controls devs without the need to dig into specific game code and re-implement a UI.

So, for example, the Galaxy map control system, and the system orrery controls can be "the same" even though they were created at different times.