So last year I decided to get a new monitor, and through reviews and stats I settled on the Dell S2417DG. Unfortunatly it didn't quite offer a good experience.

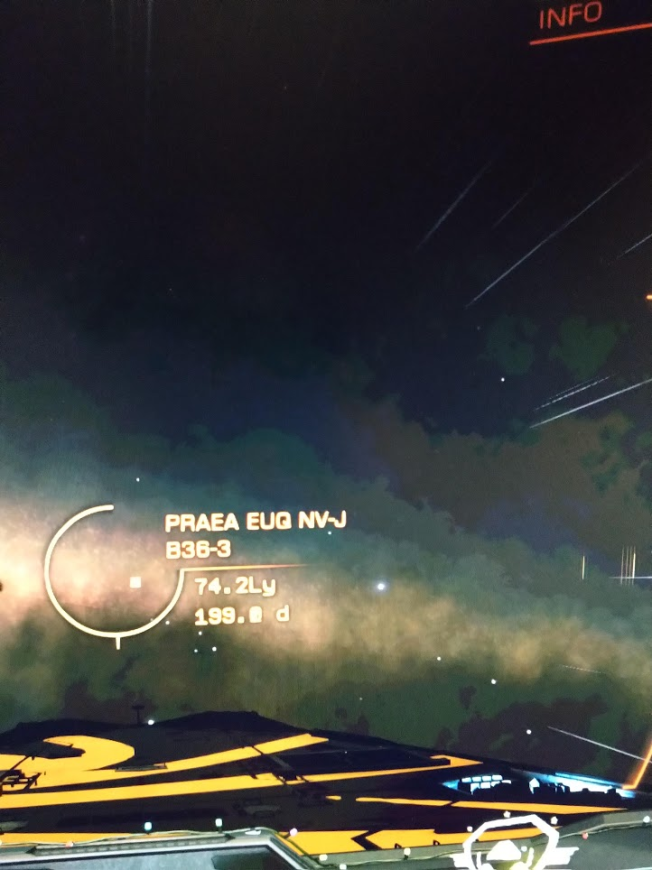

Sadly ED seems to be the game most affected by this, since the problem only occurs on dark gradients. On other games the performance of the monitor is up to par.

I've tried googling the issue but it seems a bit too much of an edgecase to offer any help in choosing a replacement that won't nessecarily have the same issue on it.

I temporarily worked around the issue with reshade, but I kinda want to solve the underlying issue rather than blurring the problem out with a PP filter.

Fellow commanders, what are your recommendations?

Sadly ED seems to be the game most affected by this, since the problem only occurs on dark gradients. On other games the performance of the monitor is up to par.

I've tried googling the issue but it seems a bit too much of an edgecase to offer any help in choosing a replacement that won't nessecarily have the same issue on it.

I temporarily worked around the issue with reshade, but I kinda want to solve the underlying issue rather than blurring the problem out with a PP filter.

Fellow commanders, what are your recommendations?