my CPU does not have a bottleneck with the GPU

Your CPU will bottle neck a 2080 at 1080p (Your CPU will be at 98-100 usage now during modern games!). You'll still get good frames on most games, but your CPU will reduce the available instructions back to the 2080 (The IPS is just not fast enough to keep up with modern cards), thus reducing the amount of FPS that card could possibly display.

As 1080p uses the CPU more for rendering than 4k, that's why you see less performance at the reduced resolution. Its sounds illogical, but you do need a faster CPU for 1080p, than you would with 4k, as your card would do more of the heavy lifting!

It's the reason I retired my 4790k last year for a Ryzen 3600. It was bottlenecking my 3070 (which is about the same as your 2080). It was not the silicon lottery winner, and would only be comfortable at 46Ghz on all cores. But still lasted me so, so many years!

At 1080p you'll be getting about 20-30% bottleneck and you'll be getting about 0 to 5% at 4k with a RTX 2080. Check out this link (

Link to Bottleneck Calculator).

The Haswells/Devils Canyons were an amazing chip, but unfortunately they are starting to really show their age when paired with new mid to high end cards. They simply can not keep up! It was when the i3 10100f was beating my 4790k in FPS, that made me decide for something a little newer. (

Link to i3 10100f vs i7 4790k).

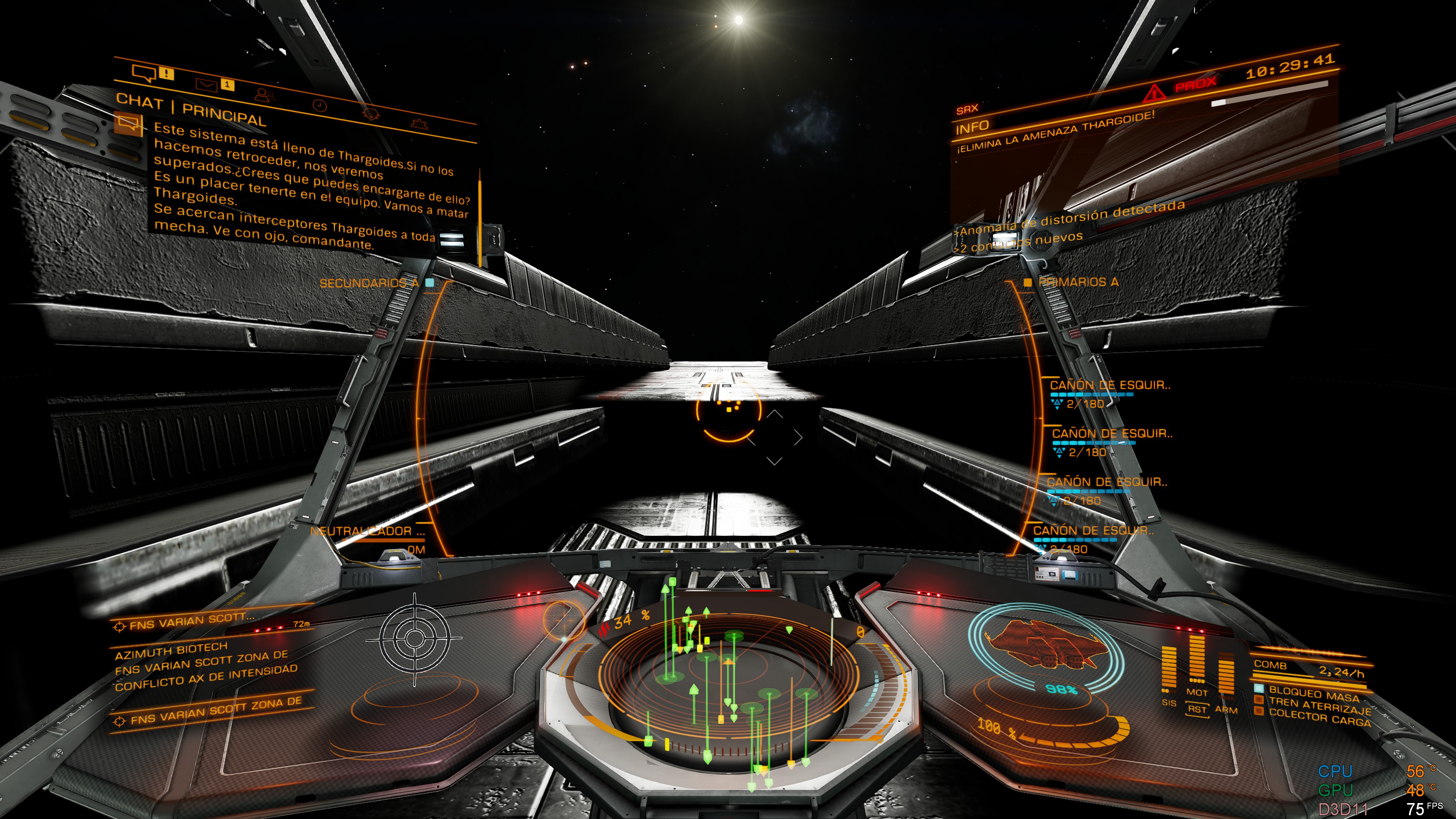

Its not saying that your CPU is incapable or now worthless. Its just means that it limits what your card could do, if your CPU had a higher IPS. One thing I have always suggested about upgrades is, if your getting the frames and smoothness your happy with? Then don't spend £1000 to gain an extra 30-50FPS when your already getting what your monitor can already display. As you see my old i7 4790k and a 1070 could run comfortably at 7680x1440p with Horizons. But the 3070 was held back until I got the Ryzen 3600.

Elite Horizons CPU I7 4790k GPU GTX 1070 7680x1440

Source: https://www.youtube.com/watch?v=zbinImBkjNA

Elite Odyssey CPU Ryzen 3600 GPU RTX 3070 7680x1440

Source: https://www.youtube.com/watch?v=8ivT7kj4J18