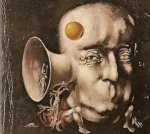

I Have No Mouth, And I Must Scream is a 1967 post-apocalyptic sci-fi short story by Harlan Ellison.

It deals with, amongst other things, the philosophical issues surrounding the (im)morality of creating self-aware conscious entities that are 'locked in' - intelligent, hyper-aware - yet unable to interact in any meaningful way with the external world, and how this severe restriction might affect their state of 'mind'

"Tell me Mister Anderson, what good is a phone call, if you are unable to speak?"The recent aeon.co article, 'Consciousness Creep' by George Musser, deals with a related concept: Given the difficulty we have in even defining or detecting what 'consciousness' is - humans may already have created (or be very close to creating) self-aware entities which we would be ill-equipped to recognise as self-aware.

The multi-layered Black Mirror Episode 'White Christmas' also deals with the (im)morality of creating locked-in/simulated consciousnesses and the manner in which humans might view such self-aware creations, not to mention the effect on the simulated consciousnesses of being unable to interact with the external world in any meaningful way...

This thread was prompted by a request from Hanni79 following a comment regarding a potentially self-aware Competition Pro joystick [wacko]

The intention in starting this thread is simply to share ideas on this fascinating, and - given Moore's Law - timely topic.

For instance:

- How do you feel about killing NPCs?

- How would you feel if they were able to feel fear and taste the vacuum of space as your beam laser delivers them to oblivion?

- Do you know any particularly good (and preferably lesser-known) works of fiction in this genre that you would like to share?

- Do you think a non-biological entity can ever be truly conscious?

- Will we ever even be able to define or detect consciousness - human or not?

- Once a single-celled biological entity evolves from inert matter, is it just a few millennia from the abacus to self-aware, self-replicating machines?

- Are such machines the inevitable outcome of evolution throughout the universe?

Feel free to jump into the discussion - but remember - electronic communication of ideas only within this globally-connected hive mind...

No speaking (or screaming) :x

It deals with, amongst other things, the philosophical issues surrounding the (im)morality of creating self-aware conscious entities that are 'locked in' - intelligent, hyper-aware - yet unable to interact in any meaningful way with the external world, and how this severe restriction might affect their state of 'mind'

"Tell me Mister Anderson, what good is a phone call, if you are unable to speak?"The recent aeon.co article, 'Consciousness Creep' by George Musser, deals with a related concept: Given the difficulty we have in even defining or detecting what 'consciousness' is - humans may already have created (or be very close to creating) self-aware entities which we would be ill-equipped to recognise as self-aware.

The multi-layered Black Mirror Episode 'White Christmas' also deals with the (im)morality of creating locked-in/simulated consciousnesses and the manner in which humans might view such self-aware creations, not to mention the effect on the simulated consciousnesses of being unable to interact with the external world in any meaningful way...

This thread was prompted by a request from Hanni79 following a comment regarding a potentially self-aware Competition Pro joystick [wacko]

The intention in starting this thread is simply to share ideas on this fascinating, and - given Moore's Law - timely topic.

For instance:

- How do you feel about killing NPCs?

- How would you feel if they were able to feel fear and taste the vacuum of space as your beam laser delivers them to oblivion?

- Do you know any particularly good (and preferably lesser-known) works of fiction in this genre that you would like to share?

- Do you think a non-biological entity can ever be truly conscious?

- Will we ever even be able to define or detect consciousness - human or not?

- Once a single-celled biological entity evolves from inert matter, is it just a few millennia from the abacus to self-aware, self-replicating machines?

- Are such machines the inevitable outcome of evolution throughout the universe?

Feel free to jump into the discussion - but remember - electronic communication of ideas only within this globally-connected hive mind...

No speaking (or screaming) :x

Last edited: