play with your builds here and check bottleneck ect.If I went with RTX 4070, the CPU isn't going to be a bottleneck? I was under the impression i5-12400F was kind of mid-range while RTX 4070 and up are pretty high end.

I hadn't kept up with AMD numbering. My last computer that uses AMD was over ten yesrs ago. I have fond memories of not having to turn the heating on when I played games in the winter and was still sweating.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Game Discussions Bethesda Softworks Starfield Space RPG

- Thread starter Lngjohnsilver1

- Start date

-

- Tags

- space rpg

If I went with RTX 4070, the CPU isn't going to be a bottleneck? I was under the impression i5-12400F was kind of mid-range while RTX 4070 and up are pretty high end.

Hard to give any firm forecast without the game being released, but the 12400F is significantly faster than the CPUs in the recommended requirements. If they are targeting 60 fps with recommended specs, you'll easily surpass that (unless something is very wrong with your system). Once your desired frame rate floor is achieved, then you can shift any bottleneck to the GPU by running better graphics settings. A 12400F might only be capable of delivering half the frame rate of a 13900K or 7800X3D, in wholly CPU limited scenarios, but chances are that unless you're running 1080p or below, it will be easy to make the GPU a limiting factor, even if you had an RTX 4090.

Unless there are unusual performance anomalies (like those that occurred with Odyssey) I would only expect performance issues related to your CPU if you're targeting fairly extreme frame rates, or plan on doing significant multi-tasking while playing.

As per the comment from the devs, it will be locked to 30 fps, and if that holds water I'm sure us low and mid tie PC owners will do just fine, RTX2080 and RT6800XT not as good as a RTX4090TI however would you get a better experience playing with a monster GPU? I don't think so, I've been playing many new games with my old GTX1080OC however CP2077 was where I notices that I needed a new GPUHard to give any firm forecast without the game being released, but the 12400F is significantly faster than the CPUs in the recommended requirements. If they are targeting 60 fps with recommended specs, you'll easily surpass that (unless something is very wrong with your system). Once your desired frame rate floor is achieved, then you can shift any bottleneck to the GPU by running better graphics settings. A 12400F might only be capable of delivering half the frame rate of a 13900K or 7800X3D, in wholly CPU limited scenarios, but chances are that unless you're running 1080p or below, it will be easy to make the GPU a limiting factor, even if you had an RTX 4090.

Unless there are unusual performance anomalies (like those that occurred with Odyssey) I would only expect performance issues related to your CPU if you're targeting fairly extreme frame rates, or plan on doing significant multi-tasking while playing.

As per the comment from the devs, it will be locked to 30 fps

I'm fairly certain that was console specific, which makes sense given the CPU and memory systems they have, and exists to ensure stable frame rates even in worst case scenarios. I do not expect a 30 fps cap on the PC version. And unless they've done something silly like tie physics to render fps (again), there shouldn't be any frame rate cap on PC. Nor should it be difficult for PC to leverage higher frame rates.

Even if the PC version is somewhat less well optimized and/or suffers from a bit more overhead, a monolithic ~4.4GHz 6c/12c thread Alder Lake (an i5-12400F) with it's own dedicated pool of DDR4 or DDR5 has slightly superior aggregate performance, much better lightly threaded performance, and significantly lower memory latency than an ~3.6GHz dual-CCX 8c/16t Zen 2 that is tied to a unified pool of much higher latency GDDR6 (the XBox Series X SoC).

They actually sell salvaged PS5 SoCs (which are extremely similar, CPU and memory subsystem wise, to the XBox Series X SoCs) with disabled GPU components as the Ryzen 4700S kit: https://www.amd.com/en/desktop-kits/amd-4700s

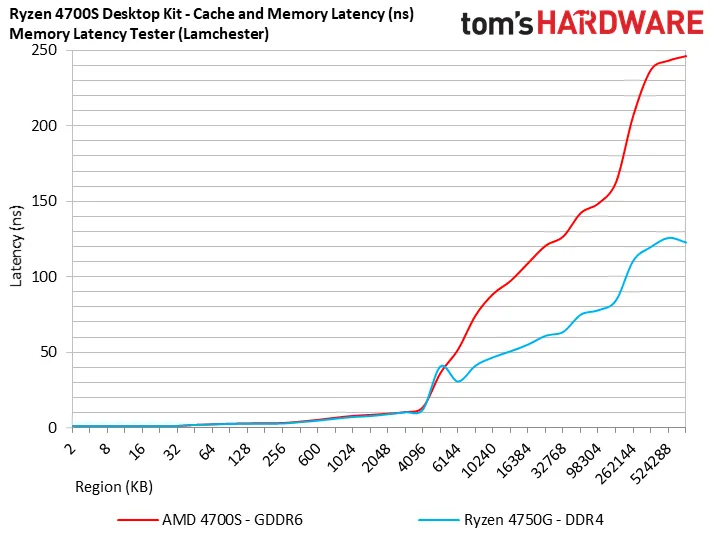

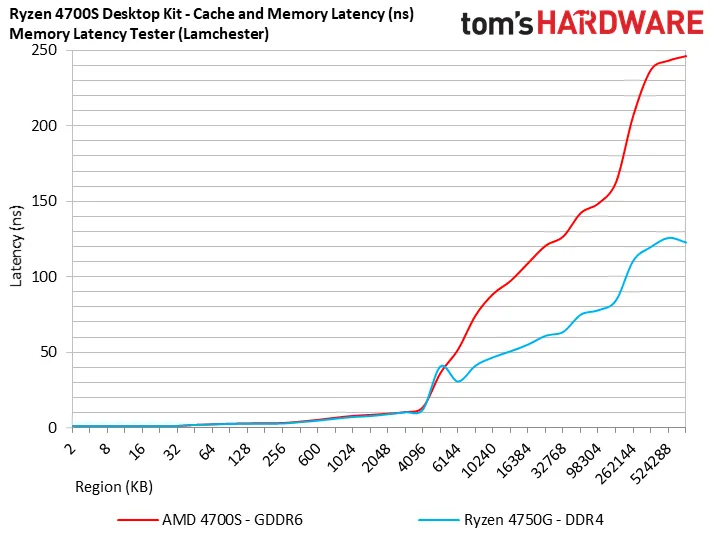

These have, of course, been benchmarked. Can't get solid direct gaming comparisons due to the APU being disabled and there only being a PCI-E 4x slot, but we can see the CPU performance compromises that had to be made, for the sake of making a practical console SoC, through the latency of the GDDR6 system (vs. a Zen 2 PC APU with a nearly identical CPU component, which should closely match a 12th gen Intel paired with about the most generic memory possible in memory latency):

They actually sell salvaged PS5 SoCs (which are extremely similar, CPU and memory subsystem wise, to the XBox Series X SoCs) with disabled GPU components as the Ryzen 4700S kit: https://www.amd.com/en/desktop-kits/amd-4700s

These have, of course, been benchmarked. Can't get solid direct gaming comparisons due to the APU being disabled and there only being a PCI-E 4x slot, but we can see the CPU performance compromises that had to be made, for the sake of making a practical console SoC, through the latency of the GDDR6 system (vs. a Zen 2 PC APU with a nearly identical CPU component, which should closely match a 12th gen Intel paired with about the most generic memory possible in memory latency):

if that holds water I'm sure us low and mid tie PC owners will do just fine

I'm sure they will, if they are content with the same 30 fps floor, and/or similar visual quality.

RTX2080 and RT6800XT not as good as a RTX4090TI however would you get a better experience playing with a monster GPU? I don't think so

There are always more settings to turn up.

I'm entirely GPU limited in games that are six or seven years old on a GPU that is faster than the out-of-box state of anything that can be bought today.

When I finally play Starfield, I expect to want to use settings my RTX 4090 cannot handle, but I expect the game to play well on my RX 6800 XT (which is less than half as fast), probably even on my remaining GTX 1080 Ti (which is half as fast as the 6800 XT). The point at which I no longer extract a better experience--in a late 2023 AAA title with recommended specs at the level of Starfield's--from a better GPU, is probably a decade away.

Right, i was wrong it's for consoles.I'm fairly certain that was console specific, which makes sense given the CPU and memory systems they have, and exists to ensure stable frame rates even in worst case scenarios. I do not expect a 30 fps cap on the PC version. And unless they've done something silly like tie physics to render fps (again), there shouldn't be any frame rate cap on PC. Nor should it be difficult for PC to leverage higher frame rates.

Currently, there is no FPS lock announced for Starfield on PC. The Frame Rate will be determined by your specific PC specs but we expect high-end rigs to be able to reach 120+ FPS at launch.

What is Starfield’s FPS on Xbox & PC? All Frame Rates

Upcoming space exploration title Starfield is under fire over recent news regarding its FPS (or Frames Per Second).

So what I like is 60FPS in games, I don't need more for a RPG, when I play games like ARMA 3 in MP I want as high FPS as possible and turn down the eye candy.

Since I'm not going to rebuild my PC 100% just adding a new GPU and a NVSSD card via the PCIe port because rebuilding is going to be more expensive from last time, as I always build one that will last for many years, and that is not cheap these days, my last build in 2014 was a asus extreme black edition x79 and a 4960x cpu with first a gtx980 then a gtx 1080 gpu with 32 gb RAM installed, it was top dog in 2014-2016 now it's a low end PC

The 'locked at 30fps' for Xbox statement seems to have been jumped on a lot by quite a few PC players looking to find fault with Starfield, even before it's release...namely by those stalwarts of misinformation that didn't read any further than that particular part of the statement (conveniently ignoring the small print) before heading of to t'interwebs for a bit of outraged PC master race keyboard rattling...and it's been sprayed around like a Facebook rumour since then

Starfield was never going to be limited to 30fps on PC...Bethesda even went to great lengths to explain why they had made the decision to hard lock it to 30fps on the Xbox

Starfield was never going to be limited to 30fps on PC...Bethesda even went to great lengths to explain why they had made the decision to hard lock it to 30fps on the Xbox

Last edited:

So what I like is 60FPS in games, I don't need more for a RPG, when I play games like ARMA 3 in MP I want as high FPS as possible and turn down the eye candy.

My point regarding CPU and GPU balance with regard to all of this is that once you have a CPU that lets you reach your target FPS, whatever that is, there is little practical limit to the GPU you can make use of, because you can keep that frame rate and just turn up the eye candy. If one doesn't care about eye candy, then they can opt for a cheaper GPU.

Since I'm not going to rebuild my PC 100% just adding a new GPU and a NVSSD card via the PCIe port because rebuilding is going to be more expensive from last time, as I always build one that will last for many years, and that is not cheap these days, my last build in 2014 was a asus extreme black edition x79 and a 4960x cpu with first a gtx980 then a gtx 1080 gpu with 32 gb RAM installed, it was top dog in 2014-2016 now it's a low end PC

The attempt at future proofiness is generally more expensive in the long run. That Rampage IV Black Edition was a ~$500 board in 2014, and the 4960X was a ~$1000 CPU. That nearly covers my last three boards and CPUs. The system I built back in early-2012 had a ~220 dollar board paired with a 3930K (that I ran at 4.3GHz) and would have provided the similar performance performance as a 4960X, to this day (they are both 6c/12t parts, of the same architectural tock, with the same instruction sets, extremely similar IPC, and similar clock speed potential).

Even for builds where I'd recommend a $1600 GPU outright, the entire system (well, the contents of the case any way) is probably going to cost less than $2500 (including that GPU), because there is little or nothing to be gained from a general use or gaming perspective from spending more. If a gaming-focused system's budget isn't at least 60% video card, it's probably wasting money somewhere. Such a balance allows every bit of it's performance to be leveraged day one, and generally still alows the platform to be kept through two or three top-teir GPU upgrades, if necessary.

For example, my next gaming-box upgrade is going consist of a 7800X3D (or it's immediate successor), a $125 motherboard, and the absolute cheapest memory kit that has the ICs I'm looking for that I can find (probably about $100 for 32GiB of Hynix M-die stuff, or $120 for A-die, should the 8000 series be ready and have a meaningfully better memory controller), and it will be still fast enough that there won't be anything out there that would be a meaningful improvement upon it for it's intended purpose (submitting frames to my overclocked RTX 4090).

Not that I haven't personally built plenty of systems that are far from exemplars of budgetary efficiency, or don't build more systems than I actually need (I average about one build a year for my personal use), but in my case the process is the goal; I'm often building them as a PC enthusiast/hobbyist first and a user second. Those with purely utilitarian goals don't need expensive boards at all, rarely benefit from the most expensive CPUs available, and can usually get away with relatively infrequent platform upgrades.

Yeah, you got a good point there, maybe find something that will fit the need and just upgrade from there, I mean my PSU is still awesome EVGA 1200 W P2 it's a beast, and the service from EVGA is just gold standard. Same with my case, I simply don't need a new one as I got plenty of space, so yeah maybe just find something that works for my gaming needs and upgrade if needed in the future. I was thinking of the 7900X to get higher MHz because ARMA 3 is one of the games I play a lot and that one loves high mhz systems.The attempt at future proofiness is generally more expensive in the long run.

I was thinking of the 7900X to get higher MHz because ARMA 3 is one of the games I play a lot and that one loves high mhz systems.

With a 7900X you're paying for a whole second CCD just to get one that clocks a few percent higher than the 7600 or 7700. It also costs nearly as much as the 7800X3D, which will almost certainly perform better, even in ARMA 3, despite the clock speed deficit.

If you're trying to save money without cutting too many corners and aren't sure if the v-cache of the X3D parts will be a major advantage, the 7700X is the CPU to get. It's within margin of error of the 7900X or 7950X in gaming performance, while still having enough cores to be viable for quite a while. It's also only $10 more than the 7700 non-X and $120 less than the 7800X3D.

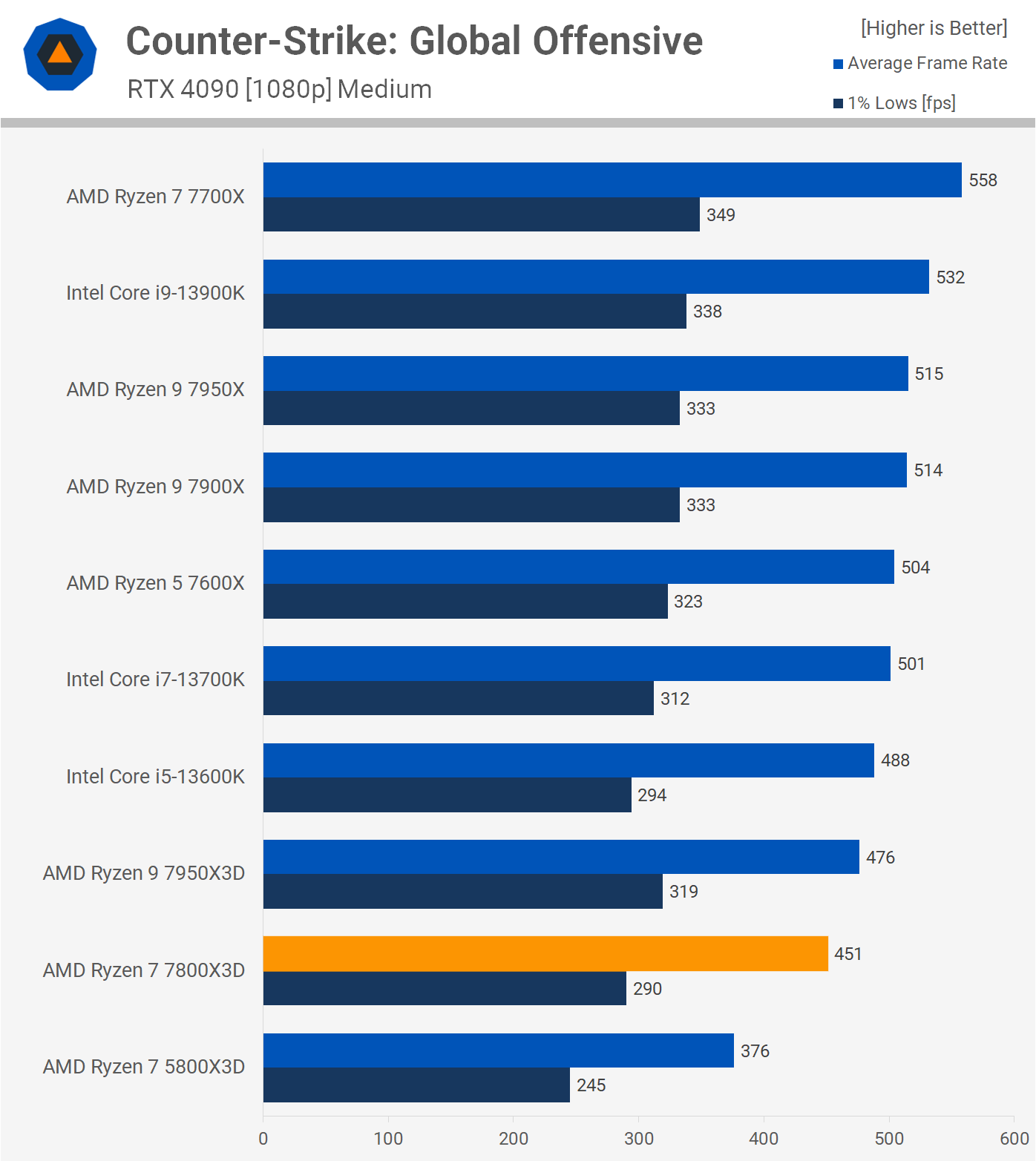

Finding competent ARMA 3 benchmarks on modern hardware is a pain, but in general, this is what the relative performance looks like across AMD CPUs lightly threaded game (CS:GO in this case) that doesn't need a ton of L3 cache:

That particular engine favors AMD, but even if you ignore the Intel parts and just compare AMD against itself, you can see the 7700X is actually faster than any of the dual CCX Zen 4 parts. The second CCX provides no advantage to any game, aside some rare extremely well-threaded RTSes, and occasionally harms performance in latency sensitive tasks because the CCXes have to communicate over the IOD. The scheduling issues this causes, as well as the higher thermal loads, are often enough to negate the ostensible boost clock advantage. Even on a good day, the 7900X is a wash with 7700X and the 7950X is at best 3-4% faster, outside of those ultra-well-threaded RTS type titles.

Example platform upgrade:

Ryzen 7 7700X -$320

2x16GiB DDR5 6000 CL30 (e.g. G.Skill Flare X5) - $110

ASRock B650M-HVD/M.2 - $125

Thermalright AK120 SE cooler - $30

That sub-$600 collection of parts will come within 10-20% of the frame rates of the best I could build with an unlimited budget, where I'd spend more than $600 on just the CPU cooling. It's also not really sacrificing any reliability and still has an upgrade path.

Last edited:

Gotta love this newly minted SF fan boi's perspective over at Refundian U

Source: http://www.reddit.com/r/starcitizen_refunds/comments/14onx67/if_you_cant_beat_the_jpeg_salesman_become_the/

Source: http://www.reddit.com/r/starcitizen_refunds/comments/14onx67/if_you_cant_beat_the_jpeg_salesman_become_the/

Gotta love this newly minted SF fan boi's perspective over at Refundian U

View attachment 360318

Source: http://www.reddit.com/r/starcitizen_refunds/comments/14onx67/if_you_cant_beat_the_jpeg_salesman_become_the/

Lol bored gamer, i remember that guy. Hes the factabulous of star citizen, or something like that.

Anyways.. as far as my suckering for starfield...

Limited controller: A thing of beauty. I don't even have an xbox.. but because of the feature of AA batteries, can be open to boutique controllers. Its very nice.

Limited headset: On first listen, its woefully bad.. but after setting the eq to "music", letting it burn in and getting used to the sound, it doesn't remind you its bad on daily listen. What's novel about it.. the only headset i have which has deliberately high levels of bass, its completely wrong.. but very pleasurable for certain types of music. Soundtracks and electronic music actually do really well, for anything with electric guitars, not so much, noticably bad. Its also has the largest and most detailed soundstage of anything i personally own, including open backs. Its like halfway to the insanity of the m50x, but pushed out much further and creates a bigger surround effect.

Constellation edition: Refused to be suckered, as i would want to use the watch.

Game: Preordered the steelbook, plus will get gamepass off legitimate key sites (no vpn etc) for an extended period. See what happens if the game is good later.

I don't get why noone talks about the watch, lol. If there was anything mildly interesting in the merchandise it'd been the watch for me.Lol bored gamer, i remember that guy. Hes the factabulous of star citizen, or something like that.

Anyways.. as far as my suckering for starfield...

Limited controller: A thing of beauty. I don't even have an xbox.. but because of the feature of AA batteries, can be open to boutique controllers. Its very nice.

Limited headset: On first listen, its woefully bad.. but after setting the eq to "music", letting it burn in and getting used to the sound, it doesn't remind you its bad on daily listen. What's novel about it.. the only headset i have which has deliberately high levels of bass, its completely wrong.. but very pleasurable for certain types of music. Soundtracks and electronic music actually do really well, for anything with electric guitars, not so much, noticably bad. Its also has the largest and most detailed soundstage of anything i personally own, including open backs. Its like halfway to the insanity of the m50x, but pushed out much further and creates a bigger surround effect.

Constellation edition: Refused to be suckered, as i would want to use the watch.

Game: Preordered the steelbook, plus will get gamepass off legitimate key sites (no vpn etc) for an extended period. See what happens if the game is good later.

play with your builds here and check bottleneck ect.

Whoa.. wasn't expecting this.. I'm bumping into more cpu bottlenecked games.. and turning off windows defender realtime scanning is still working.. tonight pushed sf6 world tour from low 50's to mid 50's plus all settings maxxed except crowd density set to low.

Fingers crossed for starfield... heeeeee

I don't get why noone talks about the watch, lol. If there was anything mildly interesting in the merchandise it'd been the watch for me.

Yeah, someone else mentioned that as well... i can't get past the low res display.. which could be an indicator of other cheapness.

Source: https://www.reddit.com/r/Starfield/comments/ug70tn/the_fcc_has_information_about_the_wand_company/

Look up the info in the government link.. still not convinced.

Thanks great suggestion I will for sure see how that will work , it gave me something to consider so good advice.Ryzen 7 7700X -$320

2x16GiB DDR5 6000 CL30 (e.g. G.Skill Flare X5) - $110

ASRock B650M-HVD/M.2 - $125

Thermalright AK120 SE cooler - $30

Whoa.. wasn't expecting this.. I'm bumping into more cpu bottlenecked games.. and turning off windows defender realtime scanning is still working.. tonight pushed sf6 world tour from low 50's to mid 50's plus all settings maxxed except crowd density set to low.

Fingers crossed for starfield... heeeeee

View attachment 360334

The fact that turning off Windows Defender is even perceptible should be a huge hint that four logical cores is no longer enough.

Anyway, even if this bottleneck calculator was accurate (and it's not, at least when it comes to modern titles paired with low core-count CPUs), the i5-2500k is a Sandy Bridge part that lacks AVX2. I'm doubtful it will run Starfield at all...not in the sense the game will be a slideshow on it, but that it will refuse to launch or just crash upon launching.

Windows Defender is malware. It doesn't matter how many cores you have, it will kill performance as it rolls through your RAM pretending to do something useful while itThe fact that turning off Windows Defender is even perceptible should be a huge hint that four logical cores is no longer enough.

Windows Defender is malware. It doesn't matter how many cores you have, it will kill performance as it rolls through your RAM pretending to do something useful while itharvests your datacollects telemetry to send to Microsoft. Should be one of the first things disabled on a new install.

Defender does reasonably well in third party tests, well enough that I probably wouldn't bother with a different persistent AV on Windows systems. The bulk of it's privacy issues (and some of it's effectiveness) can be mitigated by disabling sample submission and Smart Screen. If one desires more privacy, then that pretty much rules out effective real-time AV, which most consumers probably shouldn't be without.

I've never seen it kill performance on any system I've used it on, though I've never used it on anything slower than an i7-6800K. It's functionally transparent on the systems I currently have it running on (a 3950X with 128GiB of DDR4-3400 and a 5800X with 32GiB of DDR4-3800, which are both running Windows 10 22H2) vs. my other gaming capable Windows (Server 2016 and 2022) systems that it's never even been installed, let alone enabled, on (an i7-5820K and a 5800X3D). I'm sure I could bench small differences in app startup times and the like, but the hit from it certainly isn't obtrusive. Actively running a scan impacts performance, but that shouldn't be happening while doing anything heavy, and applies to any AV.

There was a bug not long ago where Defender's real-time monitoring would hog a bunch of performance counters on 8th through 11th gen Intel CPUs, causing increased overhead. Not sure if that's been fixed or not, but the only Intel processors I still have from these generations are laptops running Linux.