My screen's limit is 609 lumens, is it worth digging into the hidden HDR settings? I did something similar (basically enabled mative HDR support in UE5) for Oblivion Remastered and it made it look gorgeous!

Normally the brightness unit of relevance is nit, rather than lumen. Lumens are the total light emitted, nits are lumens per square meter. Somewhere between 500-600nits is where HDR starts to be appreciably better than SDR, while 1000+ is ideal. The higher the native contrast ratio of the panel and the darker the environment the less brightness is required for a good experience.

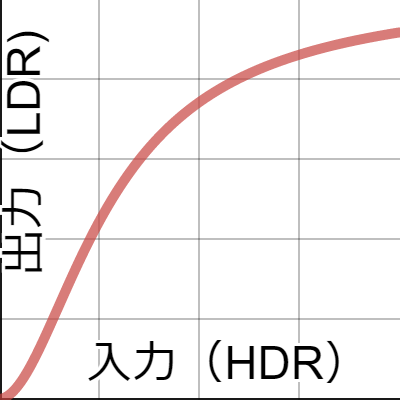

There does seem to be a big hole in Windows' support for HDR here - in my mind I was expecting at least the basics of an explicit way to set really simple tonemapping in a S-curve, to set how SDR content is mapped onto the HDR display's gamut. I think the item on HDR Settings page is doing something vaguely similar to this, but without any clues as to what it's actually doing it's very hard to find a good setting for it iteratively. (Plus the problem that a good setting for visual content that looks like ED is quite different to the good setting for visual content like a screen full of code)

Thank you for the tip about changing auto-exposure settings, I'll look into that. The reason I didn't even bother trying auto HDR was because I assumed ED messing with grading all the while would just confuse the heck out of the "auto" part of auto HDR. (To be clear, I think the way ED does this in SDR is very smart and I am a fan of the look it generates but I do think they could have done more to eliminate the common cases where it's metastable and generates flicker/back-and-forth contrast changes)

I also note Microsoft's latest answer to "make HDR better" is to add an option to turn it off except for movies, which does solve the problem of "I want to watch HDR Netflix but also be able to see my desktop" but somewhat signposts they're not close to doing anything useful with wider colour management modernisation.

I used some very old tone mapping settings from the early game (when ED station interiors were much darker) as a reference and converted them to ACES (which was added much later) with an online plotter, then tuned to taste, which produced an effect I find better than using the stock curve. That stock curve is way too bright, IMO, when trying to map the game to a much wider dynamic range.

www.desmos.com

Converts between common color spaces such as ACES, sRGB, P3, and more.

physicallybased.info

https://dev.epicgames.com/documenta...ng-and-the-filmic-tonemapper-in-unreal-engine -- basic reference that is engine agnostic for most relevant terminology.

Specific curve will depend on how much brightness and contrast you have on tap. Basically you want the game dark enough to look right in some marginally lit reference situation, without black crush, at whatever HDR injector settings allow peak bright objects to take full advantage of the display. Tuned 'right' I can see in very low light without NV, still have gloomy station interiors/concourses (where they aren't supposed to be brightly lit), yet barely be able to look at the screen when my ship is pointed at bright star.

Of course, the auto HDR method has a huge impact as well. I found it easiest to retain the game's tonemapping, but some people might prefer to set the game to a completely linear output then use their HDR injector's inverse tonemapping to do all the work. I might reevaluate my own settings at some point to make them more portable across my systems (which all have very different HDR capable displays) by going this route.

As for auto-exposure, setting "DisplayLumScale" and "HistogramSampleWidth" to the same value, along with reducing "Percentiles" to a negligible amount (0, 0, 0 or 0.1, 0.11, 0.111) should almost completely eliminate the feature. @[VR]Nieblaash pointed out that later part to me.

I've been using something like this in my

GraphicsConfigurationOverride.xml, though I'm still experimenting:

XML:

<?xml version="1.0" encoding="UTF-8" ?>

<GraphicsConfig>

<HDRNode>

<HistogramSampleWidth>164.000000</HistogramSampleWidth>

<ExposureType>2</ExposureType>

<Percentiles>0.010000,0.011000,0.011100</Percentiles>

<ManualExposure>0.0</ManualExposure>

<ShoulderStrength>0.491000</ShoulderStrength>

<LinearStrength>0.707000</LinearStrength>

<LinearAngle>0.704000</LinearAngle>

<ToeStrength>1.185000</ToeStrength>

<ToeNumerator>1.377000</ToeNumerator>

<ToeDenominator>1.976000</ToeDenominator>

<LinearWhite>5.242000</LinearWhite>

</HDRNode>

<HDRNode_Reference>

<HistogramSampleWidth>164.000000</HistogramSampleWidth>

<ExposureThreshold>1.000000</ExposureThreshold>

<Percentiles>0.010000,0.011000,0.011100</Percentiles>

<ManualExposure>0.0</ManualExposure>

<GlareCompensation>1.25</GlareCompensation>

<ShoulderStrength>0.491000</ShoulderStrength>

<LinearStrength>0.707000</LinearStrength>

<LinearAngle>0.704000</LinearAngle>

<ToeStrength>1.185000</ToeStrength>

<ToeNumerator>1.377000</ToeNumerator>

<ToeDenominator>1.976000</ToeDenominator>

<LinearWhite>5.242000</LinearWhite>

<ACES_A>2.51</ACES_A>

<ACES_B>0.03</ACES_B>

<ACES_C>2.43</ACES_C>

<ACES_D>0.59</ACES_D>

<ACES_E>0.14</ACES_E>

</HDRNode_Reference>

</GraphicsConfig>

Edit: Corrected missing percentiles in HDRNode.