You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Push graphic quality to the limits

- Thread starter IIIIIII

- Start date

- Status

- Thread Closed: Not open for further replies.

Well, using Ctrl-F shows me that I'm getting up to 200fps from my 100% GPU use, although that's a maximum and not an average (average is around 120fps, dropping to maybe 90fps in busy stations or belts). That's on a 4GB GTX760, so a 2 year old card can triple an "acceptable" value of 60fps and still beat it even in worst case scanarios.

As someone whose first gaming experience was on an old Pong clone, then the Atari 2600, I can truthfully say I'm not noticing any real "generational" increase in graphics quality over the last few years, mainly in computational grunt (framerates and/or resolutions supported). In other words, every new game looks like it's still a 2009 title to me, so I don't see the problem due to its all-pervasive nature.

Per one of my posts above, we're assuming v-sync, otherwise yes, it's always 100%.

When it comes to quality - there are new shaders, new techniques such as tesselation, lighting models like HBAO etc. So there's a lot of stuff.

Elite's station are somewhat plain and low poly.

Compare Threvick dock to Mass Effect's Citadel. I ran the latter at 60fps on hardware 2 generations below my GTX660. Having high gpu usage while in a low poly torus with some textures and an odd ship thrown in for a good measure is - for me - a symptom of a problem somewhere.

Again, that's why CPU and GPU manufacturers provide profilers. You just need to allocate time for your team to use them.

Back in 1990's, ASM was king, and every core procedure was being rewritten into assembler to optimize the C++ code. Nowadays, there are frameworks for frameworks, for managed language frameworks.

As a result, people don't even bother to pursue optimization beyond very basic pitfalls. That's why people have gfx performance issues.

- - - - - Additional Content Posted / Auto Merge - - - - -

A side question giving Graphics ,, I had a 144hz screen set to the recommend resolution 1920 x1080 & in game, should v-sync be on

You should set the resolution to the native of your panel. Whatever it might be.

If you have a 144hz screen (clocked at 144hz), there's no point in using vsync, unless you push well over 144fps and you see screen/frame tearing.

Per one of my posts above, we're assuming v-sync, otherwise yes, it's always 100%.

When it comes to quality - there are new shaders, new techniques such as tesselation, lighting models like HBAO etc. So there's a lot of stuff.

Elite's station are somewhat plain and low poly.

Compare Threvick dock to Mass Effect's Citadel. I ran the latter at 60fps on hardware 2 generations below my GTX660. Having high gpu usage while in a low poly torus with some textures and an odd ship thrown in for a good measure is - for me - a symptom of a problem somewhere.

Again, that's why CPU and GPU manufacturers provide profilers. You just need to allocate time for your team to use them.

Back in 1990's, ASM was king, and every core procedure was being rewritten into assembler to optimize the C++ code. Nowadays, there are frameworks for frameworks, for managed language frameworks.

As a result, people don't even bother to pursue optimization beyond very basic pitfalls. That's why people have gfx performance issues.

I'm running an older 120Hz 1080p panel (3D Vision Ready (TM)) so vsync is probably going to be less indicative, and tbh I've always turned it off as some earlier game engines would delay input processing until the next frame was rendered (after waiting for the current frame to finish displaying) so it could produce some less-than-smooth input issues on twitch games I used to play. I disable vsync out of habit now, although I now need to check that ED can enable it again when I'm using the Rift ^^;

I played ME2 and ME3 on the XBox 360, and they both ran smooth enough on 2005 hardware. Perhaps running on an engine (and art assets) optimised for rendering on the PS3 and XBox 360 @1080p may have had an effect on the PC version? It's possible that the majority of ME3 is actually lower poly than you think it is, and some bloom / bumpmapping and clever use of textures is being used to make background objects look smoother and more detailed than they actually are. Which is not a bad thing, by the way, I think it is an excellent way of providing a good user experience on limited hardware.

ED is being developed as a PC only title, and also as a long term title, so it will probably not get the same engine optimisation attention as a cross-platform title that needs to run on a console. Having said that, current gen consoles are approximately a GTX700 series or R9 270 equivalent in grunt, so I'd also suggest that those should be considered a baseline for new cross-platform titles to expect acceptable performance for something like Evolve or Witcher 3.

Still, I can honestly say I struggle to see a major render quality difference between say Ghosts, which I played on the 360, and Advanced Warfare, which I play on the XBox One, even though there are all these new shader options on the One. Poly counts, texture effects etc, all seem much of a muchness, even though the hardware is nearly a decade apart. That is nothing like the jump from Doom to GLQuake, or Unreal to Unreal 2, in terms of obvious visual improvements.

Fun fact, the gpu in the Xbone is actually slower than a desktop R9270x or Nvidia equivalent by a very noticeable margin. Almost double the g-flops on the r9 270 by straight comparison.

Sauce? I mean Source?

Physical specs Xbone gpu core

http://www.techpowerup.com/gpudb/2086/xbox-one-gpu.html

Physical specs R9 270x gpu core

http://www.techpowerup.com/gpudb/2466/radeon-r9-270x.html

Sauce? I mean Source?

Physical specs Xbone gpu core

http://www.techpowerup.com/gpudb/2086/xbox-one-gpu.html

Physical specs R9 270x gpu core

http://www.techpowerup.com/gpudb/2466/radeon-r9-270x.html

Optimization is a very different thing in open world sandbox type games than in... less open games.

In less open games it is easier to identify, or create, what will never be seen, so you can in advance not allocate resources to it. Then there are things that might be seen, so you can prioritise resource allocation based on good guesses about what the player might do, you may even engineer it so the player is more likely to look at what you can optimise for, and you can, as a developer, make good best guesses about what that might be.

In open games there is a different trade of between resources allocated to optimisation itself and what it optimised. You have to allocate more resources to what the player might see, because you can't always predict what they will see.

This gets more of an issue the richer the world you want to make. FD have given themselves the most difficult of these combinations. A rich world that is very open in what the player can choose to look at.

They do a great job, but fall down sometimes, an example is that recently someone noticed that custom skins on your own ship are not visible from your own cockpit (you see the default), but the skins of other players are, and the skin of your own ship is visible if you are in outfitting.

Edit - just as a matter of interest, I just experimented running ED entirely in a ram drive, all juddering seemed to stop. This is obviously not conclusive as it doesn't always happen for me anyway but I am a compulsive tester so... there ya go...

In less open games it is easier to identify, or create, what will never be seen, so you can in advance not allocate resources to it. Then there are things that might be seen, so you can prioritise resource allocation based on good guesses about what the player might do, you may even engineer it so the player is more likely to look at what you can optimise for, and you can, as a developer, make good best guesses about what that might be.

In open games there is a different trade of between resources allocated to optimisation itself and what it optimised. You have to allocate more resources to what the player might see, because you can't always predict what they will see.

This gets more of an issue the richer the world you want to make. FD have given themselves the most difficult of these combinations. A rich world that is very open in what the player can choose to look at.

They do a great job, but fall down sometimes, an example is that recently someone noticed that custom skins on your own ship are not visible from your own cockpit (you see the default), but the skins of other players are, and the skin of your own ship is visible if you are in outfitting.

Edit - just as a matter of interest, I just experimented running ED entirely in a ram drive, all juddering seemed to stop. This is obviously not conclusive as it doesn't always happen for me anyway but I am a compulsive tester so... there ya go...

Last edited:

No, it isn't. You don't need samples from things that will never run majority of games (especially the games we're talking about), which also only ever have specific hardware combinations so that cuts out phones and tablets. That would be like saying surveys of pregnancy is cutting out crucial data from men.No it cannot because statistically it is missing crucial data. There are hundreds of thousands (more like millions actually) of gamers playing on mobile phones, tablets and laptops that are not counted in to the survey. So it is not accurate in the slightest to say that "people game on 64bit os now" because gaming habits have changed drastically as has the devices to play them on. Also windows is not the only platform for gaming, Linux and Apple OS are also gaming capable (if not as well supported).

It can be statistically relevant, and yet still be an inaccurate indicator of the whole due to missing data. It is not "gospel" of the entire gaming community, it is merely a survey of Steam owners who participated. It is only accurate in that context.

Laptops are represented.

Apple and Linux are barely supported so again, not relevant data and there's no reason to believe they wouldn't have a similar range of hardware anyway.

It's a statistically relevant sample which you can use to determine the average gaming system. If I'm a developer of high end indie or AAA gaming, the Steam survey tells me what people who would buy my game are using.

Last edited:

I do see your point but lexandro's point is equally valid.No, it isn't. You don't need samples from things that will never run the game, so that cuts out phones and tablets. That would be like saying surveys of pregnancy is cutting out crucial data from men.

It's a statistically relevant sample which you can use to determine the average gaming system.

Steam gamers who chose to participate in a poll is a pretty selective group, I would say it is a stretch to over generalize its statistical relevance.. You only have to read some of the threads on this forum to know how many gamers refuse to use steam. Also it seems to me that those who do use steam are going to be those who tend to have above average specs on thier gaming kit, although of course I do not have figures to back that up.

Steam has millions of active accounts, and unless, as I said, you can find some kind of participation bias then no, it's not a selective group, it's representative of the average desktop gaming PC.I do see your point but lexandro's point is equally valid.

Steam gamers who chose to participate in a poll is a pretty selective group, I would say it is a stretch to over generalize its statistical relevance.. You only have to read some of the threads on this forum to know how many gamers refuse to use steam. Also it seems to me that those who do use steam are going to be those who tend to have above average specs on thier gaming kit, although of course I do not have figures to back that up.

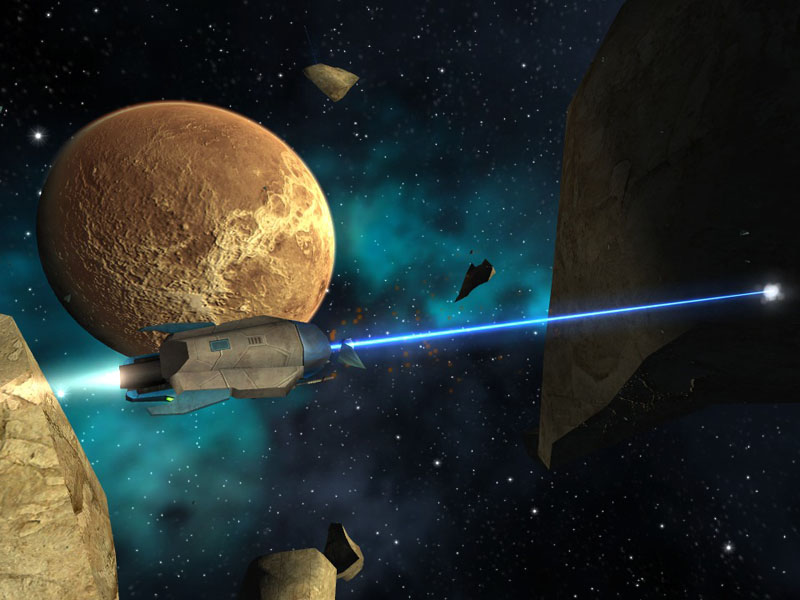

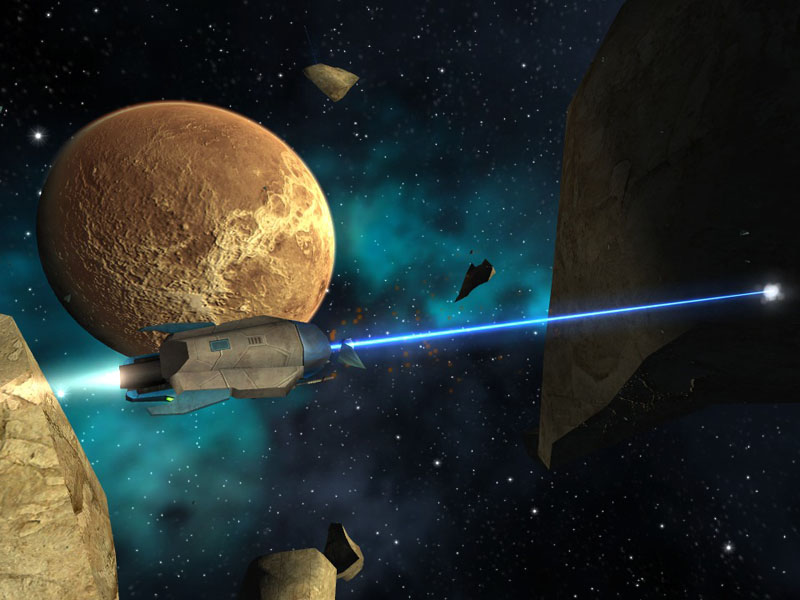

Yeah, sure.Compare E|D to Vendetta Online, as a competitor. VO uses at best 20% of all of the resources E|D does, and looks vastly better, IMHO.

This…

looks better than this…

Seriously, put off the weed.

(Did I break the forum? The spoiler tags don't seem to hide one of the screenshots completely…)

Last edited:

Steam has millions of active accounts, and unless, as I said, you can find some kind of participation bias then no, it's not a selective group, it's representative of the average desktop gaming PC.

Millions of accounts its not millions of actual people though. You have to agree that there are many many people out there who have multiple accounts/run mulli-boxes that skew the numbers. On top of that is that we all know that Steam accounts and bought and sold daily. Yes its against the ToS, but it still happens. These also skew the numbers. And there are many many people who do not participate in the steam survey at all. I myself have a steam account and I only ever recall one survey I did around about 8 years ago.

So again I re-iterate the point I made earlier, it is not fair to say that "most people" now play on 64bit OS when the data is known to be not as accurate as portrayed. And also has data missing from it. It would be fair to say that most "dedicated gamers" use 64bit OS for desktops. Laptops and notebooks are still sold in the thousands with a 32bit OS.

Last edited:

There are not many many at all. There's few games that benefit from.that, and fewer still who are willing to pay for the same game several times.Millions of accounts its not millions of actual people though. You have to agree that there are many many people out there who have multiple accounts/run mulli-boxes that skew the numbers. On top of that is that we all know that Steam accounts and bought and sold daily. Yes its against the ToS, but it still happens. These also skew the numbers. And there are many many people who do not participate in the steam survey at all. I myself have a steam account and I only ever recall one survey I did around about 8 years ago.

So again I re-iterate the point I made earlier, it is not fair to say that "most people" now play on 64bit OS when the data is known to be not as accurate as portrayed. And also has data missing from it. It would be fair to say that most "dedicated gamers" use 64bit OS for desktops. Laptops and notebooks are still sold in the thousands with a 32bit OS.

Those that don't participate are irrelevant as long as the numbers that do make up the sample is large enough to produce a viable average and has been collected without bias.. You don't appear to know how polls and surveys work. You don't need to sample everyone for the output to be relevant. Again, I point out that drug trials don't use every single human being due to this fact.

So I repeat my point again. The Steam survey is a statistically relevant example of gaming systems. It shows the majority have a 64bit OS and CPU. The data is accurate and doesn't have any missing relevant data.

Fixed.....There are not many many at all. There's few games that benefit from.that, and fewer still who are willing to pay for the same game several times.

Those that don't participate are irrelevant as long as the numbers that do make up the sample is large enough to produce a viable average and has been collected without bias.. You don't appear to know how polls and surveys work. You don't need to sample everyone for the output to be relevant. Again, I point out that drug trials don't use every single human being due to this fact.

So I repeat my point again. The Steam survey is a statistically relevant example of gaming systems *of those gamers who choose to use steam*. It shows the majority have a 64bit OS and CPU. The data is accurate and doesn't have any missing relevant data.

Fun fact, the gpu in the Xbone is actually slower than a desktop R9270x or Nvidia equivalent by a very noticeable margin. Almost double the g-flops on the r9 270 by straight comparison.

Sauce? I mean Source?

Physical specs Xbone gpu core

http://www.techpowerup.com/gpudb/2086/xbox-one-gpu.html

Physical specs R9 270x gpu core

http://www.techpowerup.com/gpudb/2466/radeon-r9-270x.html

Isn't it a known fact the new consoles have already old tech in them? Same goes for PS4

Millions of accounts its not millions of actual people though. You have to agree that there are many many people out there who have multiple accounts/run mulli-boxes that skew the numbers. On top of that is that we all know that Steam accounts and bought and sold daily. Yes its against the ToS, but it still happens. These also skew the numbers. And there are many many people who do not participate in the steam survey at all. I myself have a steam account and I only ever recall one survey I did around about 8 years ago.

So again I re-iterate the point I made earlier, it is not fair to say that "most people" now play on 64bit OS when the data is known to be not as accurate as portrayed. And also has data missing from it. It would be fair to say that most "dedicated gamers" use 64bit OS for desktops. Laptops and notebooks are still sold in the thousands with a 32bit OS.

The Steam hardware survey stats are exactly as representative as, say, the Gallup Poll results, since they use a sample of a couple of thousand people to represent 300+ million.

If statisticians are comfortable using a base of 0.1% (or less) of the population as "representative" because they hand picked a few details, then I fail to see how the Steam survey cannot be considered "representative" of x86 architecture PC gaming, even if only 0.1% of Steam accounts choose to participate. Although I will say that the December hardware results look dodgy as hell and should probably be ignored.

Still, opening the "OS version" line to see the details, and Win7 64 gets 46.53%, with Win8.1 64 second at 25.52%. People who completed the Steam Hardware Survey overwhelmingly (more than 70%) use a 64-bit operating system, and as you can see, it also includes OSX and Linux distros. For those people who run Mac or Linux Steam, they can also participate in the survey if they choose, and they are all going to be running a 64-bit operating system as well.

Literally everyone I know who plays PC games is running a 64-bit OS to do so, so these results are unsurprising to me. If you want to be able to use more than 4GB of RAM, and want to be able to use all the features of your 64-bit CPU, there is literally no other choice.

Last edited:

Isn't it a known fact the new consoles have already old tech in them? Same goes for PS4

Known and fun I guess.

I just changed Environmental Quality from High to Medium, and textures to Medium, honestly couldn't tell the difference anyway, must be my middle aged eyes packing it in. Fixed my stuttering instantly, go figure, had stutter since beta, but after that simple little change, bam, gone.

Obviously not going to work for everyone, but hey if any of you guys haven't tried it, give it a shot.

Obviously not going to work for everyone, but hey if any of you guys haven't tried it, give it a shot.

In all seriousness, do you guys really expect modern PC games to play on 12 to 13 years old hardware? The x64 architecture was introduced by AMD in 2003 and adopted by Intel in 2004. If by today some diehards still insist on playing on x86 architecture PCs, let them go the way of the dinosaurs already so they stop holding everyone else back.

LMAO!Yeah, sure.

This…

looks better than this…

Seriously, put off the weed.

ED looks dated in some aspects but this other thing is from like a decade ago if i should judge based on graphics alone.

Isn't it a known fact the new consoles have already old tech in them? Same goes for PS4

The PS4 and XBox One both use an octocore AMD APU with a Graphics Core Next on-chip GPU, the PS4 has 18 GPU compute cores (1152 shader cores) vs 12 GPU compute cores (768 shader cores) in the XBox One.

They aren't "old" tech, but at the (more power efficient, aka slower) clock speeds being used in the consoles they do compare to the R9 270 in terms of GPU performance (with the XBox being like a veteran R9 270 that had 6 compute cores shot off in action with the enemy, but got overclocked in compensation). Google can furnish you with the exact specs and clock speeds of everything if you actually care about that stuff.

Anyone running an R9 290, or 900 series nVidia GPU has more grunt than the consoles, but the compute cores themselves are contemporary designs, just underclocked to reduce heat and power draw.

The PS4 and XBox One both use an octocore AMD APU with a Graphics Core Next on-chip GPU, the PS4 has 18 GPU compute cores (1152 shader cores) vs 12 GPU compute cores (768 shader cores) in the XBox One.

They aren't "old" tech, but at the (more power efficient, aka slower) clock speeds being used in the consoles they do compare to the R9 270 in terms of GPU performance (with the XBox being like a veteran R9 270 that had 6 compute cores shot off in action with the enemy, but got overclocked in compensation). Google can furnish you with the exact specs and clock speeds of everything if you actually care about that stuff.

Anyone running an R9 290, or 900 series nVidia GPU has more grunt than the consoles, but the compute cores themselves are contemporary designs, just underclocked to reduce heat and power draw.

Try dialing it back a bit. Those igpu performace specs are overstated a lot.

Xbone

| Shading Units: | 768 |

|---|---|

| TMUs: | 48 |

| ROPs: | 16 |

| Compute Units: | 12 |

| Pixel Rate: | 13.6 GPixel/s |

| Texture Rate: | 40.9 GTexel/s |

| Floating-point performance: | 1,310 GFLOPS |

| Shading Units: | 1280 |

|---|---|

| TMUs: | 80 |

| ROPs: | 32 |

| Compute Units: | 20 |

| Pixel Rate: | 32.0 GPixel/s |

| Texture Rate: | 80.0 GTexel/s |

| Floating-point performance: | 2,560 GFLOPS |

I dont call nearly double the number of Gflops a "minor" difference do you?

http://www.techpowerup.com/gpudb/2086/xbox-one-gpu.html

http://www.techpowerup.com/gpudb/2466/radeon-r9-270x.html

Last edited:

- Status

- Thread Closed: Not open for further replies.