You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

What's with these oddly regular star patterns?

- Thread starter DjVortex

- Start date

Procedural generation of assets.

There is a similar huge concentration of stars around Orion Nebula, if you look closely it’s actually square shaped.

Can’t say for certain, but at a guess this likely is a graphic bug affecting the skybox, could try resetting your game, see if it persists, or could be evidence of blocked star generation.

It’s a big task to build a galaxy and they didn’t just leave it overnight to grow, it was manually coded and they used a grid system to do so, certain areas were hand built, others imported from catalogues, others filled in by algorithms, errors were made. It’s not a perfect 1-1 replication.

Just a bug in the game?

There is a similar huge concentration of stars around Orion Nebula, if you look closely it’s actually square shaped.

Can’t say for certain, but at a guess this likely is a graphic bug affecting the skybox, could try resetting your game, see if it persists, or could be evidence of blocked star generation.

It’s a big task to build a galaxy and they didn’t just leave it overnight to grow, it was manually coded and they used a grid system to do so, certain areas were hand built, others imported from catalogues, others filled in by algorithms, errors were made. It’s not a perfect 1-1 replication.

Just a bug in the game?

Last edited:

It's not a bug as such, so much as an unfortunate consequence of how the galaxy was generated.

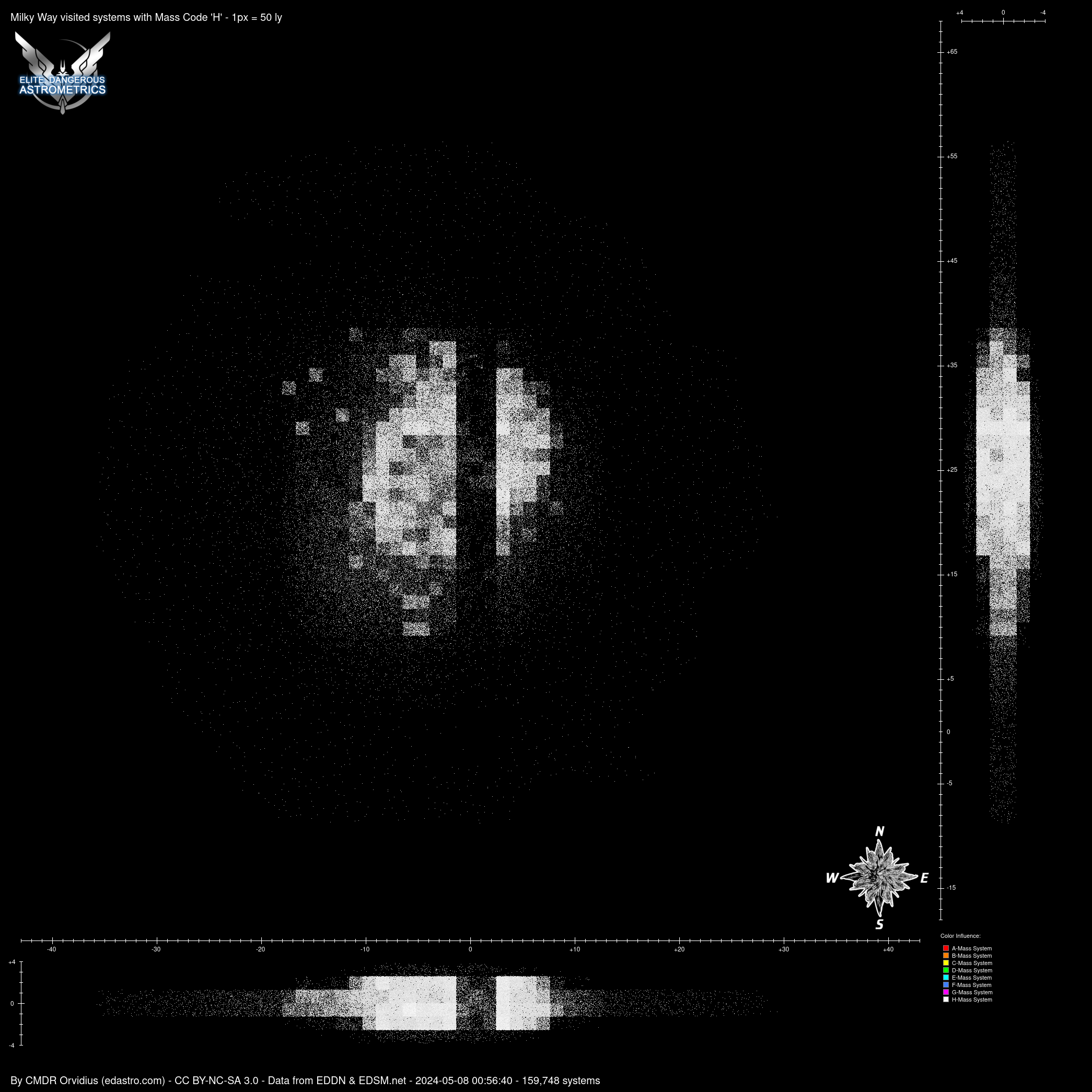

The galaxy - outside of a few hand-placed systems, mostly near the bubble - is built of cubes of stars, each of which has a particular density. Neighbouring cubes have different densities.

This happens everywhere and normally isn't too noticeable ... until you get closer to the core. Unfortunately the 'E'-size cubes (160 LY sides) which contain the B-class stars happen to have the wrong combination of "size of cube" and "visibility of stars" that when you get closer to the core and some of the cubes start getting fairly high densities, the edges between them show up.

The same happens with the other cube sizes and star types as well, but it's generally not visible either because even close to the core the cube doesn't get dense enough, or because the stars in the cube aren't bright enough to be able to see so many at once, or because there's so many stars around that they exceed the "number of stars" limit in your graphics settings and you get different display issues. (The patterns elsewhere can be picked up in third-party visualisations, though, in the well-explored regions)

The galaxy - outside of a few hand-placed systems, mostly near the bubble - is built of cubes of stars, each of which has a particular density. Neighbouring cubes have different densities.

This happens everywhere and normally isn't too noticeable ... until you get closer to the core. Unfortunately the 'E'-size cubes (160 LY sides) which contain the B-class stars happen to have the wrong combination of "size of cube" and "visibility of stars" that when you get closer to the core and some of the cubes start getting fairly high densities, the edges between them show up.

The same happens with the other cube sizes and star types as well, but it's generally not visible either because even close to the core the cube doesn't get dense enough, or because the stars in the cube aren't bright enough to be able to see so many at once, or because there's so many stars around that they exceed the "number of stars" limit in your graphics settings and you get different display issues. (The patterns elsewhere can be picked up in third-party visualisations, though, in the well-explored regions)

You can probably find the answer to that in one of the (many) previous discussions of the subject, such as

forums.frontier.co.uk

forums.frontier.co.uk

Borders between Codex regions seem to also be borders between different background star fields

I have recently been traveling around the border point of Sanguineous Rim, Elysian Shore and Kepler's Crest. I have noticed this weird thing when I look at space towards the center of the galaxy and away from it: The background star field is looking nice on the side of Sanguineous Rim, but...

Maybe in theory, but given the vast variety of scenarios (densities, distances, angles) and graphics settings that could intersect, finding an algorithm that works well for all of them [1] could be tricky, and the current one works fine already for most of the galaxy.Couldn't this be alleviated in the algorithm that creates the skybox?

[1] At the higher end of "stars visible" settings, the algorithim would have to actively choose perfectly visible stars to hide in some cases, which is probably even more error prone than merely being selective about which ones get included in the N-thousands for display.

Couldn't this be alleviated in the algorithm that creates the skybox?

Well yes, but then you could never be sure what you are seeing in the skybox is actually there! For instance, that tiny nebula, planetary or otherwise you just spotted in the skybox and decided on a whim to fly to, is it really there or is it just an artifact created by the algorithm that smooths out boxel edges? You could never know for sure ad could spen ages just looking for it and never find it, but at least at the moment if you do spot something on the skybox and decide to fly to it, you know it is actually there. That I think is one of the main arguments, what you are seeing in the galaxy around you is what is actually there, start playing with the skybox and next thing you know we have NMS skybox....not that those skies aren't perfectly fine for that game, but this isn't NMS.

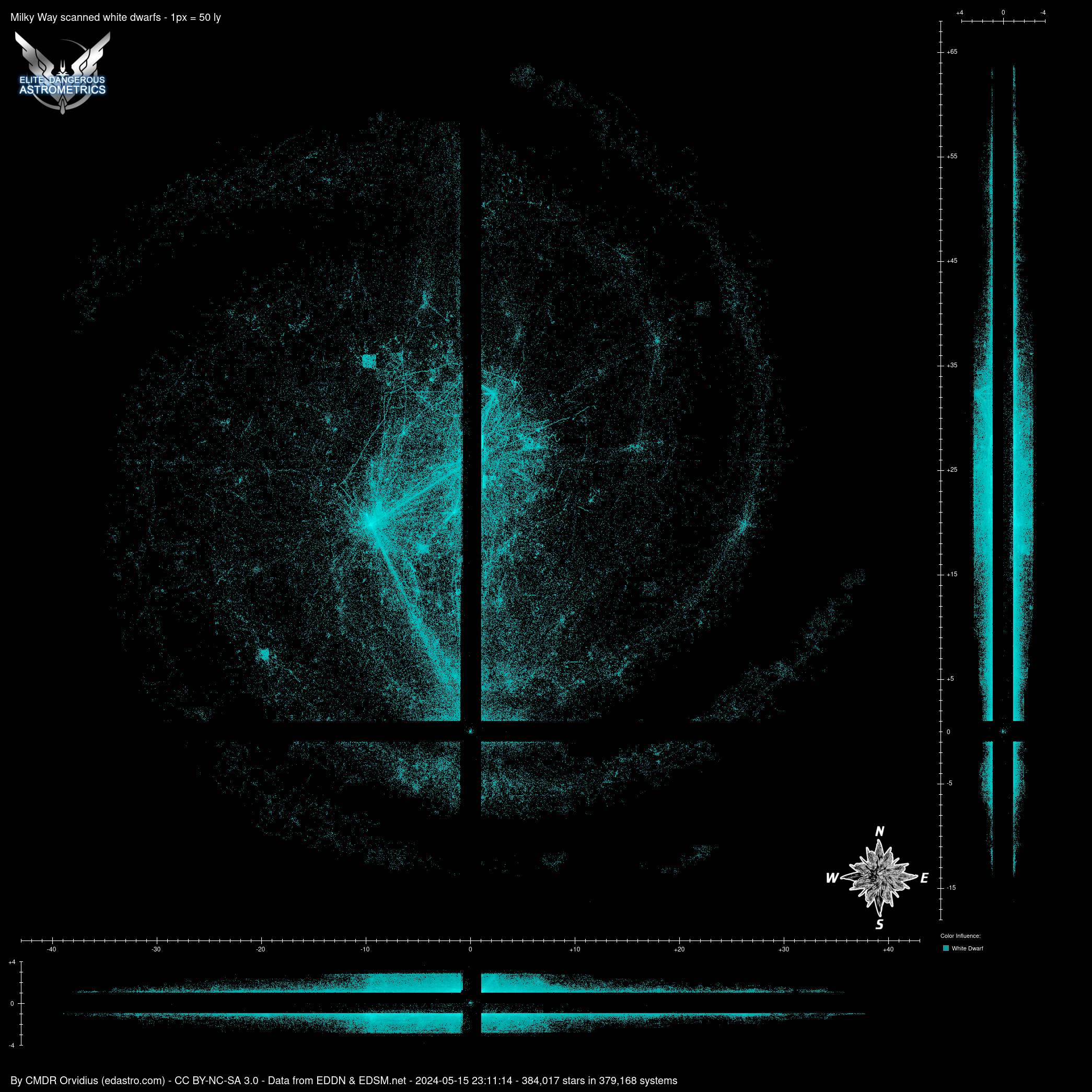

What's with that dark band? Did a supermassive black hole pass through?

Yeah, there were some areas where FDEV didn't want BH and Neutron stars, and somewhere it got messed up so we ended up with the BH/N exclusion zone cross which was never supposed to exist but by that time it was to late to change the entire galaxy.

Yeah, as best we can tell, they wanted to suppress the normal rules for procedurally generated supernova remnants specifically in the region around the bubble. But it looks like someone mixed up their ORs and ANDs in the logic, so we get these bands in all three dimensions extending out from the bubble. It's super visible on the Supernova-remnants and White dwarf maps:

That's what happens when there's no proper unit-testing...

To be fair, there's a lot of stars in the galaxy, and the exclusion cross would certainly not have been noticable in any ordinary examination of the galaxy. It appears clearly now because, we, as pilots, have visited enough of them for it to be clearly visible when you visualise the galaxy using star class filters. So unless FDEV generated maps of the galaxy based on star class and examined them for such peculiarities there's every chance they never even knew it existed for a long time early in the game, until us pesky pilots started generating our stats of systems explored. They were probably as surprised as us!

Not really. Catching that sort of bug at the unit test phase would require some very particular structuring of the code which happens to place the suppression cross into its own testable unit, rather than just being part of a larger calculation. That sort of structuring may be at odds with the requirement for extreme efficiency - it needs to be able to generate at least basic positional and primary star data for tens of thousands of systems a second.That's what happens when there's no proper unit-testing...

Code:

if (inSuppressionCross(x,y,z)) { ... }

Code:

if (abs(x) <= 1 || abs(z) <= 1) { ... }Unit tests are a specific thing, not just a synonym for "automated tests" or "regression tests", and I would guess that Elite Dangerous generally has pretty good unit tests, given the sorts of bugs it doesn't have. (Other sorts of test? Sure, those it needs more of, though the Stellar Forge specifically is probably fine: for all the "how did you miss that?" bugs Elite Dangerous has had over the years, entire star systems disappearing or moving has never been one of them, even when they've made in-system changes to it to remove broken orbits or fix Herbig rotation or similar)

And until 2.2 introduced neutron boosting it had very minimal gameplay effect, too - so what if a sector is a bit low on procedural hypergiants?To be fair, there's a lot of stars in the galaxy, and the exclusion cross would certainly not have been noticable in any ordinary examination of the galaxy.

TDD style unit-testing precisely guides software development in such a direction that smaller units can be independently tested. This often (although admittedly not always, but often) not only leads to more correct code, but also better and more modular code which doesn't have gigantic functions full of spaghetti code.Not really. Catching that sort of bug at the unit test phase would require some very particular structuring of the code which happens to place the suppression cross into its own testable unit, rather than just being part of a larger calculation. That sort of structuring may be at odds with the requirement for extreme efficiency - it needs to be able to generate at least basic positional and primary star data for tens of thousands of systems a second.

Unit-testing would have very easily and quickly caught such a simple bug like using

or instead of and in such a check.I don't know which language the galaxy generator is written in, but I would assume it's probably in C++. If it is, then subdividing the code into functions for this purpose does not necessarily incur any efficiency penalty (because short functions that should be as efficient as possible can be declared inline). (That being, said, somehow I doubt that this check is in an especially time-critical part of the code. Could be, but it doesn't sound to me like it would have to be.)

Sure, in theory. Not that it's possible to retrofit that to an existing large project in any reasonable time, but if you're starting from a clean baseline.TDD style unit-testing precisely guides software development in such a direction that smaller units can be independently tested

Extracting every single-use conditional clause into its own function so that it can be independently unit-tested, then writing a set of unit tests for each of those conditionals, then duplicating enough of the purpose of those unit tests on the original function to make sure you didn't do something like get the if{} and else{} blocks the wrong way round, or call the inSuppressionCross() function with the parameters in the wrong order ... is a huge amount of overhead. And sure, it guarantees that changes won't break the code, but unless you're in the safety-critical business where it's fine to take ten times as long to develop in the first place, it does that by guaranteeing the code never gets to the 1.0 stage in the first place.

Spending that much effort to prevent a bug which players hardly noticed for years, has minimal user-facing impact, and takes statistical analysis of the results of thousands of players' exploration trips to discover the specifics of beyond "that was an oddly boring G-masscode system"? That's not remotely cost-effective for games development.

Given a sufficiently comprehensive test suite, yes. Since you can't write the tests only to catch the bugs you're going to implement, though, that needs to be pretty comprehensive. We've got a function that takes three input parameters (system coordinates) and has particular behaviour if those coordinates fall within a pre-defined cube.Unit-testing would have very easily and quickly caught such a simple bug like usingorinstead ofandin such a check.

So, that needs a minimum of four tests per dimension (one just either side of each boundary), to check that the faces are in the right place. That would be sufficient to catch the suppression cube bug, but you also want to check that you haven't accidentally implemented a suppression checkerboard, where (2,0) and (0,2) behave correctly, but (2,2) doesn't, so that needs another set of tests on the other 20 regions which don't have two of three zero-coordinates. 32 tests so far. Then you might want to test that there's not any inappropriate type conversion that might lead to integer overflows (so 0,0 and 0,1 are fine, but 0,32768 is broken again) which means a few more tests at greater distances, though you probably can get away with just doing two in each direction here.

So that's at least 38 unit tests to guarantee that one short inlinable bit of code is working to the spec, and I haven't covered every possible way it might not work yet.

What are the chances that someone who gets that short bit of code wrong has written all 38 unit tests correctly?

How long, once that's all dealt with and this is all assembled into a full application, will it take to amend the code and correctly amend all unit tests so that when the internal playtest says "sorry, I can still see a bunch of imaginary bright stars from Sol, the suppression cube needs to be bigger", not only are the X/Y/Z boundaries changed in the code, but also it's correctly determined which unit tests failed because they're now testing against the wrong spec, and which ones failed because the code change was incorrect to either old or new spec? (And that's not just those 38 unit tests, but also some of the tests for the calling-site function, and whatever calls that, and so on, because they'll happen to also be using parameters which now don't work)

That's a lot of work for one line of functional code.

I like automated testing, I use it a lot myself, I probably spend at least as long on writing tests as writing the code. But my employers aren't going to pay for that even greater level of assurance any more than I'd pay £500 for Elite Dangerous 1.0 when it releases next year just to have the "no bugs" alternate timeline version.

Without knowing the fine details it's hard to say, but "what is the primary star class of this system" (which is what the suppression cross very visibly affects) is probably something which needs to be calculated extremely quickly for map display, routing and skybox generation.That being, said, somehow I doubt that this check is in an especially time-critical part of the code

In good TDD, unit tests work as a sort of specification for the function being tested. That means that unit tests check that the function returns the correct values for all possible different cases. For example, if the function should return true for x,y values between -2 and 2, else false, you write unit tests that check all the possible combinations of those parameters being between or outside that range. It doesn't matter if that means that 38 or 105 unit tests have to be written, the unit tests should check all the combinations to see that the function returns the correct value for all of them.What are the chances that someone who gets that short bit of code wrong has written all 38 unit tests correctly?

Of course unit tests should be paired with code coverage. In other words, good unit tests should aim for 100% code coverage (and, optimally, 100% branch coverage.)