Yeah im using very often 1080p to get high fps but always with maxed settings! In this case im using SS 2.0 but the game runs also bad with all the settings down. My 3090 has no chance to handle the game in a good way...A 3090 should be destroying this game at 4K to be honest. Do people still use 1080p? It's tiny

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

When can we expect performance optimizations?

- Thread starter Bortas

- Start date

All I'm pointing out is that the scientific evidence seems to suggest that human visual performance runs out at 75hz.

There's nothing in the page you linked suggesting that it runs out at 75 Hz. It has a link to a paper describing an experiment the method of which was "two groups of participants viewed an RSVP sequence of six pictures presented for 13, 27, 53, or 80 ms per picture and tried to detect a target specified by a written name".

Which means they didn't even go any higher than 75 Hz in that experiment.

Then again, there's much more to high fps gaming than what they were looking at in this study.

There is so much misinformation and irrelevant information (performance wise), I would not now where to begin correcting that.Very wise questions by Kantaris, in my case I had issues using an old HDD 7.200rpm and moved the ED Odyssey to the SSD M2 PCI and kept the other copy of ED Horizon only in the HDD and both are working great now

ED Horizon has around 25GB of files and the new ED Odyssey has more than 50GB (the game folder has both versions going over 75GB)... so it's all about texture file loading issues when using a HDD or old SSD...

Also if you have two disks or Volumes, you can format both and create a RAID 0 that loading times will be incredible...

if Elite Dangerous game folder is in a Disk with other 2 Terabytes of games (Steam/Epic/Etc) don't expect the best performance cos the FAT(File Allocation Table of this disk) is completely flooded with files of all games...

if you want the best performance leave ED in an exclusive disk or volume (not saying that you should leave all the free space unused, just go to Disk Management under Computer Management and create a dedicated VOLUME for Elite and this will create a dedicated FAT for ED)

Also the file system (NTFS, xFAT, ReFS) and cluster size also matter...I still don't know what is the best cluster size, but 1MB is the average size of the game files, but NTFS deafult cluster size is 64k... Also NTFS is very old and not optimized for SSD like ReFS or exFAT.

And don't forget to check the basics:

- Turn on Windows Game Mode

- go to Control Panel, Hardware, Power Options and check HIGH Performance and make sure Windows and the GPU driver is updated.

- Run CCleaner and remove temporary files

I have a very old CPU that is the minimum requirement, but I got decent RAM and GPU and a cheap and small SSD M2 that made all the difference for Odyssey.

After all this checks, lower your Game Quality and/or Resolution that will also work while Frontier tries to make something else to help.

tl;dr would be: Use an SDD, update driver from the vendors home page, close all other applications (running in the background.).

All I'm pointing out is that the scientific evidence seems to suggest that human visual performance runs out at 75hz.

Not in a whole slew of relevant metrics it doesn't.

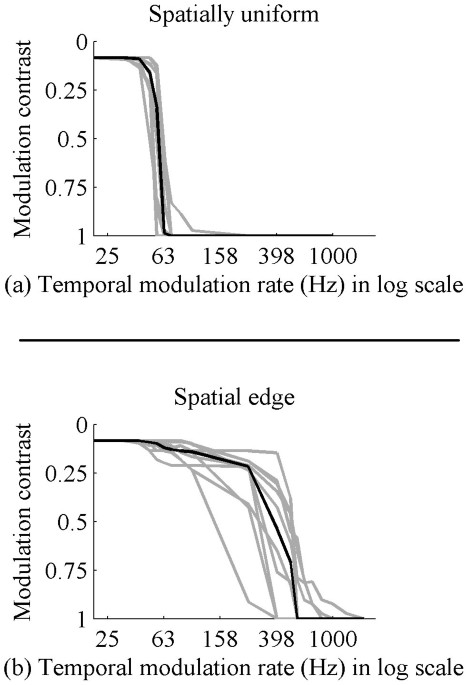

When it comes to flicker, the recent consensus is somewhere between 500 and 10000Hz, depending on individual and type of of lighting, before strobic effects are wholly imperceptible:

Humans perceive flicker artifacts at 500 Hz - Scientific Reports

Humans perceive a stable average intensity image without flicker artifacts when a television or monitor updates at a sufficiently fast rate. This rate, known as the critical flicker fusion rate, has been studied for both spatially uniform lights and spatio-temporal displays. These studies have...

To eliminate the perception of motion blur on sample and hold displays, ~1000Hz will probably do the trick:

Test Results: 4K 120 Hz Display with bonus 1080p 240 Hz & 540p 480 Hz Modes - Blur Busters

Introduction: An actual 4K 120 Hz option available now before 2018 Imagine you could obtain a 4K monitor -- that does 120Hz -- and it has a fully adjustable optional strobe backlight (open source firmware!) with a switchable scanning backlight mode? Many of us would be absolutely excited to have...

To knock out the conscious perception of input latency, a mere 100-200Hz seems to suffice:

Relating Scene-Motion Thresholds to Latency Thresholds for Head-Mounted Displays - PMC

As users of head-tracked head-mounted display systems move their heads, latency causes unnatural scene motion. We 1) analyzed scene motion due to latency and head motion, 2) developed a mathematical model relating latency, head motion, scene motion, ...

Identifying complex silhouettes (friend or foe identification of aircraft shapes on a tachistoscope) can be done with duration at least as low as 1/250th of a second, and extracting motion information up to the same rate at which blur becomes imperceptible.

If all you're going for is making motion smooth enough so most individuals cannot pick out individual frames, then we can get away with about 20-25Hz. That's not a very playable frame rate for most people.

Personally, CRT flicker doesn't become entirely comfortable for me until about 120Hz and I can consciously perceive flicker artifacts on old LCDs with PWM backlights at at least twice that frequency. I can and will play some games at ~30 fps. However, I can easily pick out the difference between 75 fps and 100, 100 and 144, or 144 and 300. There are certainly diminishing returns, but if the gap in fps is large enough, all other things being equal, the absolute frame rate has to be enormous for me not to be able to tell them apart. Based on the scientific evidence, I probably wouldn't be able to tell 1000 from 2000 fps, but since I've never seen a display faster than 360Hz in person, let alone had a blind test on one, I can't say for certain where my upper limit is.

That "last decibel" equivalent here doesn't start at 75Hz, it starts closer to 500.

It's likely the first thing they address.

All I'm pointing out is that the scientific evidence seems to suggest that human visual performance runs out at 75hz.

That is not entirely true.

So, let's go for some actual science.

First off, we need to differentiate between the speed of CONSCIOUS PROCESSING of seen image and the information transferred to the brain.

Conscious processing is NOT required to impart reaction from the muscles and the body - muscle memory is a direct proof of it (with plethora of other behaviours like widening of the pupils due to fear)

As per new studies the conscious processing happens at about top "speed" of 13 milliseconds so as you said roughly 75 frames per second, but that has nothing to do with reaction to it that is not a start-stop process and the test was to check the time of processing of an entire image. Information is not processed in batches but constantly and in a priority order. Light information is processed first, that's evolutionary. Movement and threat related information goes after. Then shapes, then colors, then depth/distance, then all the other things. So, as long as the data flows, the image is processed. Initial trigger of the neural reaction is around 13ms, yes* but later on the information sent to the muscles does not need that gap as it is constantly fed and updated accordingly as fast ad the

*Also, in the test that resulted in the 75Hz score they were using... 75HZ screens. So I'll let you decide what that can imply.

Information that goes to the brain at higher rate (and for humans that's about 8.75 megabits of data per second) allows the brain for far more selective evaluation of important changes (like movement) and also the eye has rods and cones, with rods specifically specializing in light change (evolutionary) that help detect movement way better and faster than cones are. Rods get the priority if something changes as they relay critical not additional info. So the brain processes rod info as a priority.

After the brain is accustomed to "movement means danger" that doesn't come from a potential predator but a guy with the gun on the screen - it works just like it would be a predator, speeding up the process as the data gets the priority way more if you are only watching Rick and Morty and don't do adrenaline generating activity.

More science: Nerves performance up to 1000Hz

Even more science: Bandwidth of the Eye

The above strongly suggests that 75Hz is not even near the possibility (same with the old USAF eye speed test that shown reaction in pilots to even a 1/220 of a second, with ability to identify the image as a plane)

And for the layman - go to a TV store, tell them you want to but a TV with full insurance but you need a 60Hz telly and 100Hz telly next to each other with a moving image. Compare. Human eye can't see past 60, right?

Not to mention that after you get comfortable (or more precisely your brain gets accustomed to the certain input speed) going down is actually a VERY unpleasant and annoying experience.

Last edited:

This is highly dependant on how or in what way motion blur is applied to images (and also the content itself). This applies to the images themselves as well as the display. An OLED display, for instance, has an extremely short pixel response time, inducing extremely little blur (a 120Hz OLED panel is on a similar level as a 360Hz TN panel). This causes images, especially ones without motion blur, to be perceived juddery up to a higher frame rate, than on a regular non-OLED display.If all you're going for is making motion smooth enough so most individuals cannot pick out individual frames, then we can get away with about 20-25Hz. That's not a very playable frame rate for most people.

I have a modest system. 1660ti / i7 6700k / 32 gigs of ddr4. 1080p 60hz monitor, fps capped at 60.

Horizons 60 ultra all day at 1080p.

Odyssey ultra half terrain slider bar and low shadows. 45-60 fluctuating in space stations. 30-50 Settlements, 30 looking at fire. 50-60 planet surfaces. 60 constant in space and ship combat.

Pretty happy whith performance for alpha release but I have a feeling with optimizations in the next few months (Culling?) My feet fps will be back up to sixty and can put shadows back up.

I saw horizons launch bog my 970 GTX to really increased performance after a couple years and at Horizons end run ran fluid for me.

Given time I have no doubts Oddy will be better. Stop thinking normal release and start looking at it as alpha beta. Its a long road.

Just gotta wait until console!

That is not entirely true.

So, let's go for some actual science.

I suppose we can get into multiple quoting websites (try googling "maximum framerate for human eye" there are plenty there), but I can't be bothered to fill this reply up with them.

I'm going to maintain, 75Hz, from the data I've seen on the internet, is around the limit (give or take) that people can see any flicker.

And of course, your brain can't process images at 75Hz, that rate its much lower.

And of course, your brain can't process images at 75Hz, that rate its much lower.

Even the very article you yourself linked suggests otherwise.

Edit: but go enjoy 75 fps gaming in 2021 all you want ofc

Last edited:

What I find hilarious is how people with RTX 3000 series GPUs saying "performance is great" as they get frame drops into the 50s at a mere 1080p.

Even a 3070 should be DESTROYING this game at 1080p, never mind a 3080 or 3090. And yet we have people with these cards only just staying above a mere 60fps at 1080p saying "runs great!".

This^^

Those cards are meant for running modern titles at 1440p and above at high frame rates, in this 7 year old title they should be doing 4k 144hz all day long even with the new planetary tech.

I gave you the actual scientific paper backed info, you are quoting pop-science that comes first in google search.I suppose we can get into multiple quoting websites (try googling "maximum framerate for human eye" there are plenty there), but I can't be bothered to fill this reply up with them.

You need to be more selective in research, first article that supports your thesis is most likely the one that was clicked the most, not one written by better minds.from the data I've seen on the internet

You understood (or tried to process) nothing from my post. I admit I wasn't expecting that you will but I gave you benefit of a doubt.And of course, your brain can't process images at 75Hz, that rate its much lower.

You can maintain whatever you wish, that's your undeniable right.

Last edited:

I remember playing Elite on a Q9500 with GTX550Ti and some dumpster find DDR2 ram.

Back then i was happily in my apartment with not a care in the world.

Now I have a house, wife, kids and a career.

Am I getting old ?

Am I expecting to much ?

Should i buy another new €2000 gaming pc ? No im going to re-do the driveway.

I spent my last personal savings on a 1080Ti and its showing its age as i have to downclock it to keep it crashing, if it goes, its goes and then its game-over. period.

Exactly. Asking anyone to throw down a ton of dough in this horrid GPU market is simply expecting consumers to act irrationally.

For example, last week, my second kid graduated high school, and my oldest returned home for the summer from college.

So, I bought another car .... that was higher on my priority list than upgrading my GPU to compensate for unoptimized graphics in a space flight simulator.

Horizons ran GREAT for me. I decided to buy Odyssey because I assumed it would run roughly the same. I expected a small frame rate hit, not what I'm seeing now.

You’re running a very low resolution, that’s why you have such high FPS. In Horizons you can get those FPS while running 4K with a 1080TI, on top of various XML file tweaks to further improve graphics.

I see ! Maybe I'm lacking of RAM where I only have 16gb at 3600Mhz, I know it can make a huge difference in a bunch of game but I really don't know if it does in EDO.You're close to my setup. I'm getting roughly 80fps inside interiors, about 100-120 in exterior space. There are slight drops in fps when closing in planets, or illuminated places. But in general it never drops lower than 80fps. Specs:

Gainward RTX 3070

Intel i7-10700k

G.Skill RipJaws V, 32gb@4.000Mhz(overclocking needs to happen in BIOS)

1TB Samsung Evo 980 SSD M2.2

I'm using a 850W Power supply unit from Seasonic I believe, can't exactly remember, and I'm not at home. I'm also running on absolutely no case light, or additional nonsense you'd get in computers nowadays. The mainboard is a MSI Z-490 A-Pro. I specifically took that mainboard because it has high specs, but almost no connectors for USB, light and whatnot. Also, Windows 10 Pro, Gaming Mode turned on. Running on Steam and Nvidia overlays enabled, and also running 6 additional 3rd party apps, and my Antivirus enabled(G-Data).

Edit: Oh yeah, obviously forgot the most important info. I'm running everything maxed out on Ultra, and I'm using a custom Nvidia profile which has everything maxed out, where it is reasonable, as well. The Monitor is a HP 1920x1080 running on 144Hz. It has a AMD profile enabled, which, to my surprise, makes the monitor run better than any other profile, despite me having a Nvidia system.

Maybe I'll upgrade and keep you updated