Yeah, but I'm angry my wife has been nagging me for along time to install filters in case of lightning, and I always said We will be fine, everything is grounded, now she looks at me and chuckles and i'm like NOT A WORD, DON'T SAY A WORD....o install filtertime tWhen you are spiked, it's time for a completely new box.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Game Discussions Bethesda Softworks Starfield Space RPG

- Thread starter Lngjohnsilver1

- Start date

-

- Tags

- space rpg

O Filterbaum, o Filterbaum, how smooth are your phase curves.Yeah, but I'm angry my wife has been nagging me for along time to install filters in case of lightning, and I always said We will be fine, everything is grounded, now she looks at me and chuckles and i'm like NOT A WORD, DON'T SAY A WORD....

Competition is definitely a good thing, and most other things being equal, I'll favor the underdog's products.

Nepotism and unjustly inherited merit should be discouraged, but I'm only willing to go so far to personally subsidize corporate affirmative action. I'll support policies to increase competitiveness, but ultimately, I buy the right tool for the right job and try to spend as little as I can to meet my goals.

Yeah, current AM5 boards should support the 8000 series without issue.

Just don't spend too much on the board either; the arbitrary market segmentation here is getting insane. This goes for the whole motherboard industry in general, but is even more apparent on the AMD side, where none of their CPUs need a wild VRM to support their peak power consumption and where the flagship chipset is just another non-flagship chipset daisy chained to the first Promontory 21 part.

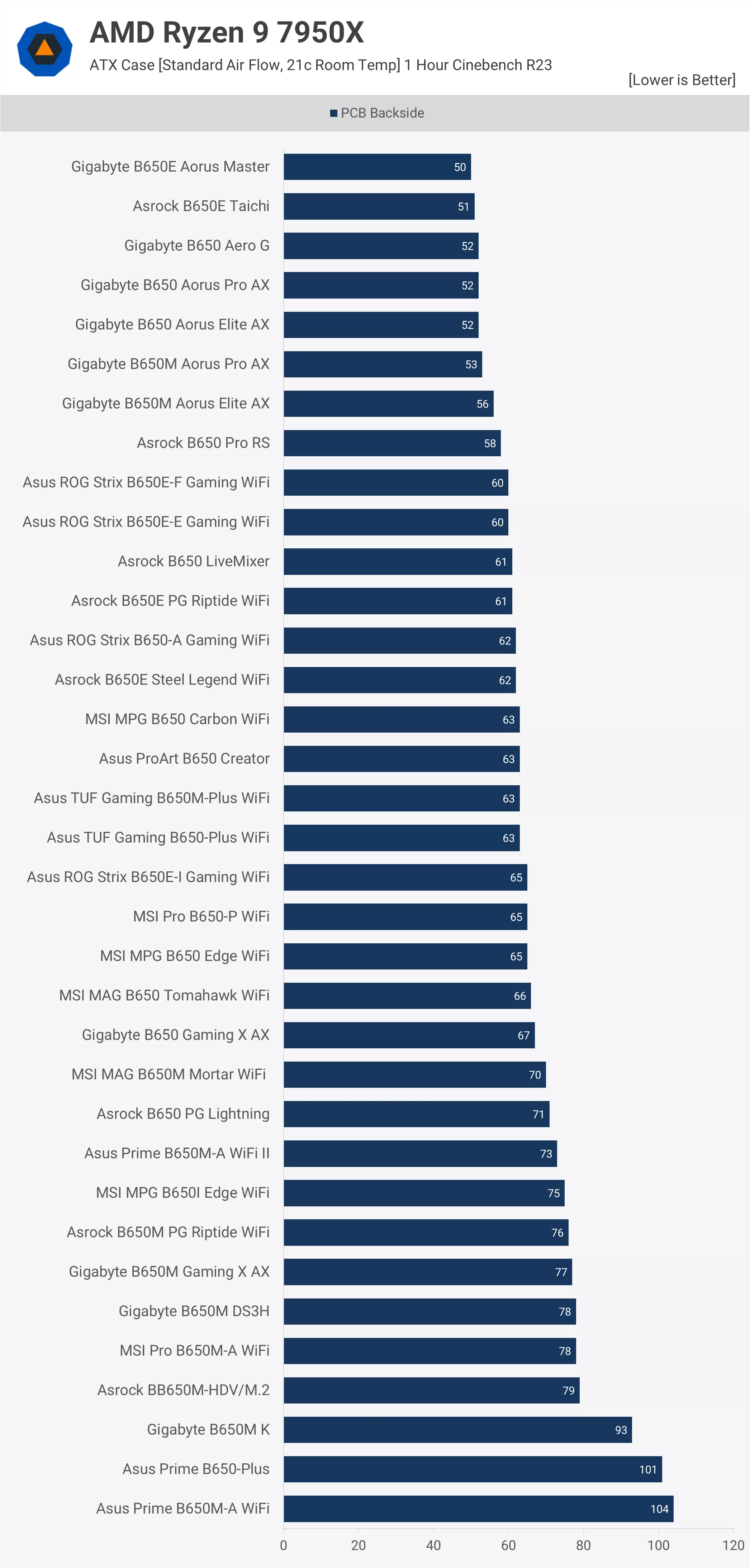

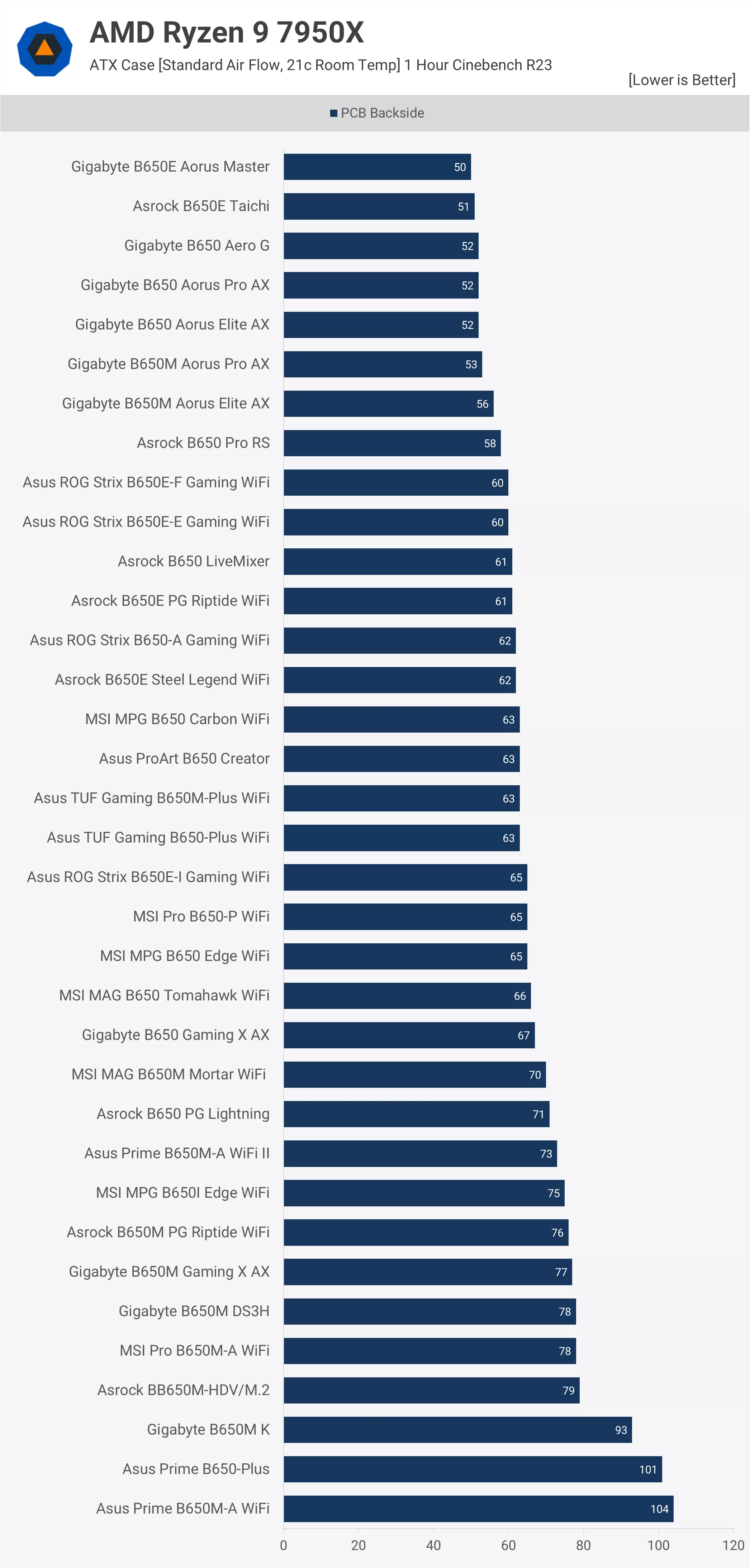

So, unless you need a bunch of I/O that is difficult to properly leverage, B650 is the chipset to get. A620 isn't much cheaper and mandates disabling far too many tuning features (artificial product segmentation), while X670 is senseless for most use cases. Likewise, most B650 boards, even in expensive ones, have the power delivery to handle any CPU you can install:

Of those 35 boards, only the ASUS Primes and the heavily cost cut Gigabyte B650M K had unacceptably high VRM temperatures when stressing the most power hungry AM5 CPU. Everything else ranges from entirely competent to total overkill, as far as VRM goes.

You can also save a bit by going with an mATX board, even if you have room for a full size board. Normally, the only thing you lose out on are a few slow expansion slots hanging off the chipset that will probably be blocked by the GPU anyway. North of that the boards are usually functionally identical.

www.techspot.com

www.techspot.com

If you want to spend a little more than the cost of the ASRock budget board I recommended earlier so you don't have to add your own wifi card, I'd probably recommend the Gigabyte B650M Aorus Elite AX for most people as Gigabyte tends to have the best POST times and the best performance without extensive manual tweaking. As can be seen from the review above, Gigabyte provides a couple of quick settings to tighten up certain memory timings (which almost any Hynix based memory should handle with ease) that makes a meaningful difference in memory performance. Of course, that advantage disappears with full manual tuning, but most people aren't capable or inclined here.

The Riptide is also fine, but defaults to an unusually low temperature limit as well as less optimal memory timings, so needs some more tweaking to reach parity.

On a side note, don't consider two DIMM slots to be a disadvantage over four. The second DIMM per channel pair is mostly token, at least for anyone who doesn't need more than 96GiB of system memory. Two DIMMs is easier on the memory controller while omitting the extra two slots entirely allows the remaining ones to have more optimized trace routing or get away with fewer PCB layers without much side effect.

The ground connection is there to keep you from getting electrocuted. Sensitive electronics still need surge suppressors, and not just to protect from lightning, but even cumulative wear from much less energetic surges that occur due to other loads on the circuit or the vagaries of power transmission.

Well, at least time to pull everything apart and test pieces individually to see what was damaged. Some things are more sensitive than others, and somethings are more isolated from the PSU. But doing so is a lot of work and everything has to be suspect until it thoroughly demonstrates otherwise.

Nepotism and unjustly inherited merit should be discouraged, but I'm only willing to go so far to personally subsidize corporate affirmative action. I'll support policies to increase competitiveness, but ultimately, I buy the right tool for the right job and try to spend as little as I can to meet my goals.

true, and as you explained my last build was over priced and I could have upgraded many times for the same cost, so not going to do that again, 8000 series CPU from AMD same with the GPU can run on the AM5 MOBO if I understood it right, so that's my aim, cpu/gpu can always be sold or reused in other projects if needed.

Yeah, current AM5 boards should support the 8000 series without issue.

Just don't spend too much on the board either; the arbitrary market segmentation here is getting insane. This goes for the whole motherboard industry in general, but is even more apparent on the AMD side, where none of their CPUs need a wild VRM to support their peak power consumption and where the flagship chipset is just another non-flagship chipset daisy chained to the first Promontory 21 part.

So, unless you need a bunch of I/O that is difficult to properly leverage, B650 is the chipset to get. A620 isn't much cheaper and mandates disabling far too many tuning features (artificial product segmentation), while X670 is senseless for most use cases. Likewise, most B650 boards, even in expensive ones, have the power delivery to handle any CPU you can install:

Of those 35 boards, only the ASUS Primes and the heavily cost cut Gigabyte B650M K had unacceptably high VRM temperatures when stressing the most power hungry AM5 CPU. Everything else ranges from entirely competent to total overkill, as far as VRM goes.

You can also save a bit by going with an mATX board, even if you have room for a full size board. Normally, the only thing you lose out on are a few slow expansion slots hanging off the chipset that will probably be blocked by the GPU anyway. North of that the boards are usually functionally identical.

AMD B650 Motherboard Roundup: VRM Thermal Testing

For those wanting to jump on the AM5 platform while spending as little as possible, which affordable AMD B650 motherboards are the best? We'll be torture-testing eleven...

www.techspot.com

www.techspot.com

If you want to spend a little more than the cost of the ASRock budget board I recommended earlier so you don't have to add your own wifi card, I'd probably recommend the Gigabyte B650M Aorus Elite AX for most people as Gigabyte tends to have the best POST times and the best performance without extensive manual tweaking. As can be seen from the review above, Gigabyte provides a couple of quick settings to tighten up certain memory timings (which almost any Hynix based memory should handle with ease) that makes a meaningful difference in memory performance. Of course, that advantage disappears with full manual tuning, but most people aren't capable or inclined here.

The Riptide is also fine, but defaults to an unusually low temperature limit as well as less optimal memory timings, so needs some more tweaking to reach parity.

On a side note, don't consider two DIMM slots to be a disadvantage over four. The second DIMM per channel pair is mostly token, at least for anyone who doesn't need more than 96GiB of system memory. Two DIMMs is easier on the memory controller while omitting the extra two slots entirely allows the remaining ones to have more optimized trace routing or get away with fewer PCB layers without much side effect.

Yeah, but I'm angry my wife has been nagging me for along time to install filters in case of lightning, and I always said We will be fine, everything is grounded

The ground connection is there to keep you from getting electrocuted. Sensitive electronics still need surge suppressors, and not just to protect from lightning, but even cumulative wear from much less energetic surges that occur due to other loads on the circuit or the vagaries of power transmission.

When you are spiked, it's time for a completely new box.

Well, at least time to pull everything apart and test pieces individually to see what was damaged. Some things are more sensitive than others, and somethings are more isolated from the PSU. But doing so is a lot of work and everything has to be suspect until it thoroughly demonstrates otherwise.

Voice pack for StarField is coming, that sounds good, I really like it for ED.

Source: https://www.youtube.com/watch?v=4CX15v3EDXw

Thanks for the patronising explanation of how an Xbox works

Aww, did it really sound like that? I only paraphrased a quote by the great Arthur C Clarke

As for FSR2...since it's been available for the Xbox since last year... possible, but Bethesda haven't suggested Starfield or their game engine utilises it on Xbox.

Oh, but they did - specifically mentioning XBox

i've marked the timestamp in the video posted by Morbad in post #1121 - 1:21

The video was posted less than 2 weeks ago and mr Todd Howards opens his speech with "We're so excited with our new partnership with AMD on Starfield" (minute 1:02)

To me, this new (new!? in a game that's in the making for so many years?) partnership means they've reach some serious performance issues running Starfield on Consoles and try to get as much as possible from their already dated architecture - which IMO is very commendable

Last edited:

MS being involved and AMD GPU is used in Xbox you're probably right, that PC follow is just how it worked out, however they have maybe worked together just not officially?To me, this new (new!? in a game that's in the making for so many years?) partnership means they've reach some serious performance issues running Starfield on Consoles and try to get as much as possible from their already dated architecture - which IMO is very commendable

AMD used to make CPUs and compete with Intel.

To me, this new (new!? in a game that's in the making for so many years?) partnership means they've reach some serious performance issues running Starfield on Consoles and try to get as much as possible from their already dated architecture - which IMO is very commendable

Zen 2 and RDNA2 aren't that dated and I'd expect this basic level of partnership to exist between AMD, via Sony or Microsoft, for any title intended to run on any Playstation or XBox console since AMD started designing the SoCs for them.

This announcement strikes me as pure marketing, something that Microsoft and AMD think will pay future dividends rather than having any actual bearing on Starfield itself. The console versions were never going to run any proprietary NVIDIA tech or need any Intel stuff that AMD already has rough equivalents to, so simply refraining from integrating such stuff in the PC version of the game will prevent possible negative comparisons with competitor solutions, which is a win for AMD. As I mentioned previously, Microsoft could well be getting priority over Sony for a future SoC in exchange for this minor concession. A few NVIDIA fanpersons get their panties in a bunch, but buy the game anyway, and the game sinks or swims on it's actual merits...the DLSS issue only comes back to haunt anyone if the game sucks for another reason.

Anyway, with regard to the Windows PC vs. console performance argument, if someone wants to loan me an XBox Series X (with access to appropriate games), I will build an entire Ryzen 4700/4750G system, paired with an RDNA2 GPU, dial both to accurately reflect XBox Series X SoC performance specs, setup Windows on it to the best of my ability, then benchmark it directly against the console at the same resolutions in the same titles, with visually indistinguishable quality settings and capture comparison videos.

MS being involved and AMD GPU is used in Xbox you're probably right, that PC follow is just how it worked out, however they have maybe worked together just not officially?

Anyone developing games for an XBox in the last ten years has officially worked with AMD. That's why this announcement strikes me as almost entirely a marketing move.

AMD used to make CPUs and compete with Intel.

Now they design CPUs, have TSMC build them, and compete more successfully with Intel.

What about Intel - do they still run chip manufacturing?Zen 2 and RDNA2 aren't that dated and I'd expect this basic level of partnership to exist between AMD, via Sony or Microsoft, for any title intended to run on any Playstation or XBox console since AMD started designing the SoCs for them.

This announcement strikes me as pure marketing, something that Microsoft and AMD think will pay future dividends rather than having any actual bearing on Starfield itself. The console versions were never going to run any proprietary NVIDIA tech or need any Intel stuff that AMD already has rough equivalents to, so simply refraining from integrating such stuff in the PC version of the game will prevent possible negative comparisons with competitor solutions, which is a win for AMD. As I mentioned previously, Microsoft could well be getting priority over Sony for a future SoC in exchange for this minor concession. A few NVIDIA fanpersons get their panties in a bunch, but buy the game anyway, and the game sinks or swims on it's actual merits...the DLSS issue only comes back to haunt anyone if the game sucks for another reason.

Anyway, with regard to the Windows PC vs. console performance argument, if someone wants to loan me an XBox Series X (with access to appropriate games), I will build an entire Ryzen 4700/4750G system, paired with an RDNA2 GPU, dial both to accurately reflect XBox Series X SoC performance specs, setup Windows on it to the best of my ability, then benchmark it directly against the console at the same resolutions in the same titles, with visually indistinguishable quality settings and capture comparison videos.

Anyone developing games for an XBox in the last ten years has officially worked with AMD. That's why this announcement strikes me as almost entirely a marketing move.

Now they design CPUs, have TSMC build them, and compete more successfully with Intel.

Zen 2 and RDNA2 aren't that dated and I'd expect this basic level of partnership to exist between AMD

Well, it's 2019/2020 tech - i guess they got the early silicons - which means no favor usually

We're 3 years later, and 2 hardware gens later, soon a 3rd one may launch

Hence the "dated", even tho in console terms they're still quite fresh.

What about Intel - do they still run chip manufacturing?

They outsource some parts to TSMC, but Intel's foundry business is still a major component of their operations and they're actively trying to catch up. Indeed, Intel is looking to expand their Foundry Service to become a major competitor with the likes of TSMC and Samsung for the manufacture of third party silicon.

AMD was forced to go fabless (by spinning them off as the now fully independent GlobalFoundries) due to the financial hurdles of maintaining their own cutting edge foundries, especially after they nearly bankrupted themselves with the acquisition of ATi and missteps in their CPU division that occurred around the same time.

Ryzen 4700/4750G system

I've read the CPU in the XB Series it more like the 3700X

And dont forget the Series X is also quite TDP limited (it draws under 220W from the power outlet)

Anyone developing games for an XBox in the last ten years has officially worked with AMD. That's why this announcement strikes me as almost entirely a marketing move.

yea, but as they said in the video, they have the AMD tech guys all over in their code - so to me it looks more than "working" with AMD due to the nature of console hardware which is amd based for the last 2 generations of consoles.

Edit: Anyways, i really really hope Starfield will live to expectations, but i will definitely not preorder and will wait for other people to try it first

Yeah, I remember AMD struggling. InterestingThey outsource some parts to TSMC, but Intel's foundry business is still a major component of their operations and they're actively trying to catch up. Indeed, Intel is looking to expand their Foundry Service to become a major competitor with the likes of TSMC and Samsung for the manufacture of third party silicon.

AMD was forced to go fabless (by spinning them off as the now fully independent GlobalFoundries) due to the financial hurdles of maintaining their own cutting edge foundries, especially after they nearly bankrupted themselves with the acquisition of ATi and missteps in their CPU division that occurred around the same time.

I've read the CPU in the XB Series it more like the 3700X

And dont forget the Series X is also quite TDP limited (it draws under 220W from the power outlet)

Core topology wise, it's closest, as in almost exactly identical, to Renoir*. Matiesse has more L3 per CCX (and it's a big enough difference to significantly skew performance in favor of Matiesse in many gaming scenarios). Of course there are differences than cannot be reconciled between the PC and console SoCs. Namely, the PC processors have an independent and lower latency memory interface, which will help them to some extent. I was planning on partially compensating for this by making sure total combined CPU and GPU memory bandwidth didn't exceed that of the XBox Series X's GDDR6 pool and using lax timings on the system memory.

And I can set power limits to whatever I like (a 3.6GHz Renior with a disabled IGP isn't going to use more than ~30w while gaming anyway).

*The ideal analog would actually be the Ryzen 4800S Desktop Kit (which is a PS5 SoC with a disabled GPU and a fast enough PCI-E interface to actually use a discrete GPU), but I'm not sure that even exists in the wild, and I have no idea where I could buy one for a non-absurd price (I'm not going to spend thousands of dollars to make an XBox knock off).

Yeah, I remember AMD struggling. Interesting

They paid twice as much as they should have for ATi (who was almost as big as AMD at the time), ran into delays with K10, then almost ran the company into the ground with Bulldozer. A $1.25 billion settlement from Intel, the GF spin off, and console contracts kept them afloat, then the gamble on the Zen architecture saved them. They've recovered well under Lisa Su, but still have a brand recognition problem in the consumer market and harsh competition from NVIDIA in the HPC space.

Dang, missed that video totallyAww, did it really sound like that? I only paraphrased a quote by the great Arthur C Clarke

Oh, but they did - specifically mentioning XBox

i've marked the timestamp in the video posted by Morbad in post #1121 - 1:21

The video was posted less than 2 weeks ago and mr Todd Howards opens his speech with "We're so excited with our new partnership with AMD on Starfield" (minute 1:02)

To me, this new (new!? in a game that's in the making for so many years?) partnership means they've reach some serious performance issues running Starfield on Consoles and try to get as much as possible from their already dated architecture - which IMO is very commendable

Source: https://youtu.be/9ABnU6Zo0uA?t=81

My only question would be, could you build all that for the retail price of an Xbox series x...namely $500?... Assuming you'd have to buy the parts rather than dig them out of your spares cupboard...and don't forget that the Xbox comes in the box with a $60 controller plus at least a year of gamepass ultimate subsCore topology wise, it's closest, as in almost exactly identical, to Renoir*. Matiesse has more L3 per CCX (and it's a big enough difference to significantly skew performance in favor of Matiesse in many gaming scenarios). Of course there are differences than cannot be reconciled between the PC and console SoCs. Namely, the PC processors have an independent and lower latency memory interface, which will help them to some extent. I was planning on partially compensating for this by making sure total combined CPU and GPU memory bandwidth didn't exceed that of the XBox Series X's GDDR6 pool and using lax timings on the system memory.

And I can set power limits to whatever I like (a 3.6GHz Renior with a disabled IGP isn't going to use more than ~30w while gaming anyway).

*The ideal analog would actually be the Ryzen 4800S Desktop Kit (which is a PS5 SoC with a disabled GPU and a fast enough PCI-E interface to actually use a discrete GPU), but I'm not sure that even exists in the wild, and I have no idea where I could buy one for a non-absurd price (I'm not going to spend thousands of dollars to make an XBox knock off).

They paid twice as much as they should have for ATi (who was almost as big as AMD at the time), ran into delays with K10, then almost ran the company into the ground with Bulldozer. A $1.25 billion settlement from Intel, the GF spin off, and console contracts kept them afloat, then the gamble on the Zen architecture saved them. They've recovered well under Lisa Su, but still have a brand recognition problem in the consumer market and harsh competition from NVIDIA in the HPC space.

Last edited:

My only question would be could you build all that for the retail price of an Xbox series x...namely $450?... Assuming you'd have to buy the parts rather than dig them out of your spares cupboard...and don't forget that the Xbox comes in the box with a $65 controller plus at least a year of gamepass ultimate subs

No, but that was never anyone's assertion.

The question is how close can an XBox Series X hardware approximation that's running Windows, Windows drivers, and Window versions of games come to the gaming performance of an actual XBox Series X...the answer of which will reveal the actual performance overhead of the Windows ecosystem.

How close one can come for the the same price is a wholly different question, that wouldn't involve attempting to match hardware, because there is better and cheaper hardware available today.

Yeah always a problem, however I already preordered it, silly me, but it can't be worse than the EDO launch and CP2077, so fingers crossed. From a space game POW from all the stuff I can dig up it just seam to me that it will be my dream space game, as FDEV is clearly not wanting to make anything of what they told us years ago, and it looks like bethesda will, also they said it will be a Han Solo Simulator LOL.i really really hope Starfield will live to expectations, but i will definitely not preorder and will wait for other people to try it first

Bethesda Describes Starfield As A "Han Solo Simulator"

The studio further took that analogy into the adventurer genre by saying it's like NASA met Indiana Jones.