Had to sort out a really bizarre stability issue with my wife's new workstation...memory passed hundreds of loops of memtest86, days of testmem5, and craptons of OCCT without issue, but the system became unusable when I moved my 3080 back into it and installed drivers. Thought I had broken the card at first, but everything ran fine at firmware defaults. Wasn't ReBAR, FCLK, or LCLK DPM either, but the actual MCLK/UCLK (memory/memory controller clock) when used in conjunction with the card. IOD on this 3950X has to be a bit weak...can't drive a memory clock much past 1733MHz(3466MT/s) with 128GiB of RAM installed along side a PCI-E 16x 4.0 GPU. Memory itself was stable, as was the memory controller when the PCI-E controller was mostly idle, but heavily load them together and something gives. Backed off to DDR4-3466, which seems stable, but it will probably be another week or two before I'm confident enough in it for it to be allowed to do any real work. Also corrupted the snot out of my Windows install while diagnosing things, so it's got to be wiped when I'm done testing. I'll have to find some good Linux GPU tests so I can screen for this sort of stuff in a more disposable environment. Miners won't work for this as mining involves almost zero PCI-E traffic.

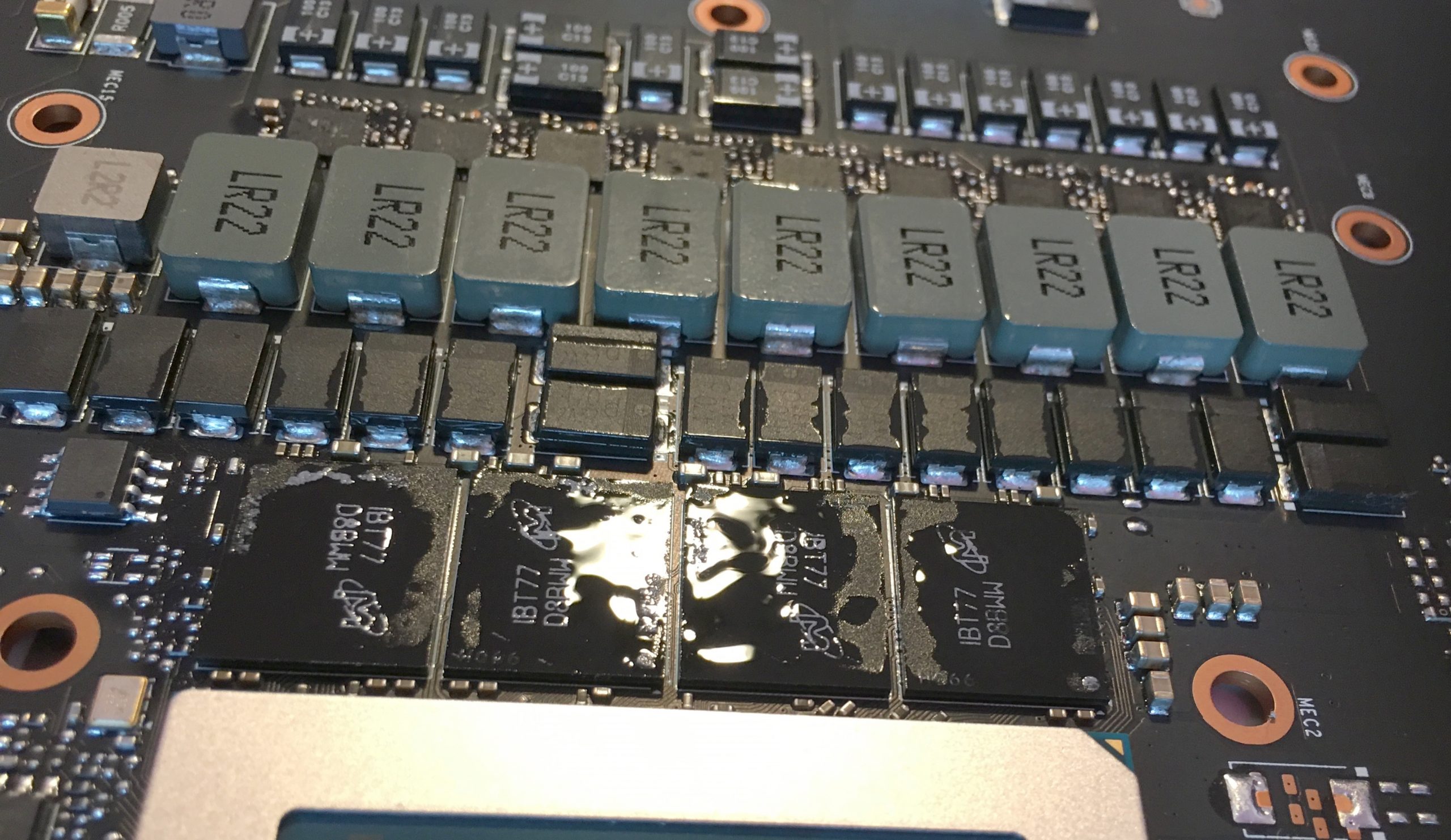

Anyway, this Aorus RTX 3080 Master rev.1 is a pretty average board, but the heatsink on it is exceptional. I've got a trio of Arctic 92mm fans screwed together and attached to an I/O cover adapter to hold them over the bare heatsink of the GPU. I haven't put a shroud on it or anything, so it's just three off-center fans suspended about half a slot from the top of the bare heatsink. Was planning on this being a temporary solution until I got some higher-end fans and attached them directly to the card, but I'm so impressed with the temperatures that I'll probably just leave it. I've applied underfil to the GPU and GDDR6X and completely replaced all TIMs--liquid metal on the GPU itself, higher-end Gelid pads on the GDDR6X and a mix of mid-range pads or thermal paste on the VRM and between the reverse of the PCB and the backplate. Some of the pads I used were a bit too thick, but I got around this with some brute force mounting (counter)pressure. GDDR6X temps rival water cooled cards and the GPU is not far behind...with the GPU fans running at a relatively quiet ~1600rpm and the case fans (Artic P12s) at a very tolerable 1200-1300rpm.

Memory on this 3080 maxes out at +650 (almost 1100 less than my brother's now watercooled FE) and the official firmware has a power limit of a measly 350w, but that's still enough for 1950-2010MHz in games, and isn't any barrier to mining, of course. Might flash it and upgrade the cooling further at some point, but I don't think my wife is gaming enough on her workstation for the extra 2-3% in performance I could squeeze out of it to justify the extra work and another ~100w in power consumption. Mining wise, it does ~96MH/s at about 224w...so a fair bit less efficient than the watercooled FE, but not bad. GDDR6X tops out at 76C while mining, with the GPU at 50C/60C hotspot. During gaming, more GPU dependent loads GPGPU loads, or anything else that pushes up against the 350w power limit, the GPU gets about 10-12C hotter, while maintaining that same 10C differential between the edge and hotspot temp.