Morbad it has been a long time since the glory days of being able to unlock a GPU to a high end model (I remember doing it with my geforce 6600 and my ATI 9800 and it really was a free upgrade).

I will eat my hat if NV made this possible even IF the cards are essentially the same.

Most recent GPU I've fully unlocked to a higher model was a Radeon HD R9 290, which took a 290X bios and became a full fledged 290. There have been a few cases like this with more recent GPUs, as board partners still occasionally flash higher-end boards to lower-end cards to make up for supply deficiencies in the lower-end models. It's certainly uncommon though.

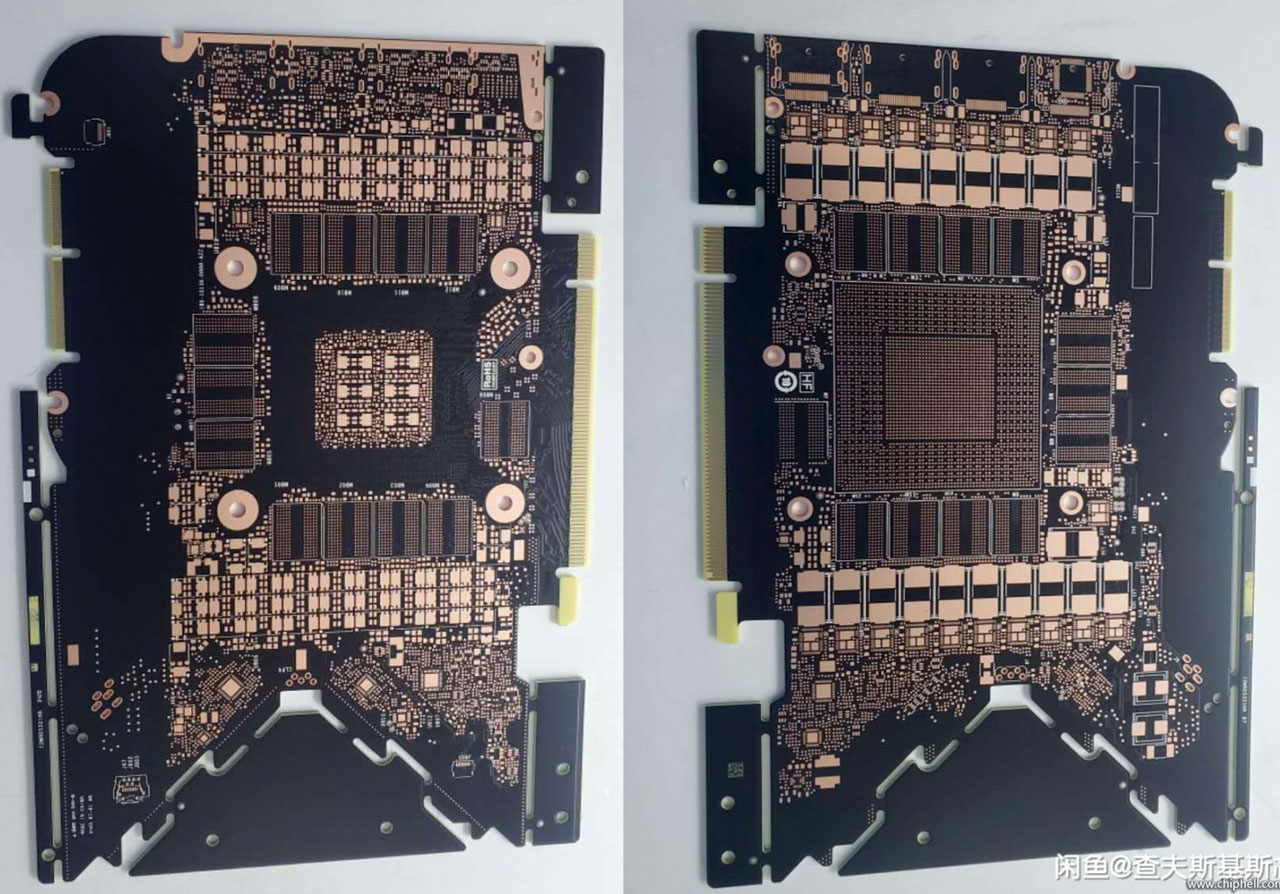

Anyway, I fully expect the disabled portions of the GPU to be fused off and impossible to reenable with the RTX 3000 series, and I expect different physical memory ICs on the 3080 and 3090 boards as well as two unpopulated memory channels on the 3080.

If the memory tables are compatable, flashing 3090 firmware onto a 3080 could still be the easiest way to increase the maximum power limit of a 3080, without having to resort to hardware mods.

Forgetting the cost (which is a hard thing to forget) I just don't like the idea of such a power hungry GPU....... It's ok in winter after all I need to heat my room anyway but in summer it really is horrible sitting next to a fan heater and even best case scenario just means wasting a load of energy.

The idea of needing an 850w PSU if I want a top of the range gaming rig does not sit well with me.

The board power requirements are not all that different from prior generations, and should be just as tunable. Idle power consumption is also negligible and performance per watt has certainly increased. You don't need an 850w PSU for a high-end system with a 3090, and even if you did, it's not going to draw anywhere near peak loads the overwhelming majority of the time.

I wish NV had gone with TSMC and not Samsung and I think you could have probably chopped 50-100w off those requirements.

We don't know how different these processes actually are in efficiency as no GPUs made on Samsung's 7nm process have yet been reviewed.

I also think 20 or 24 GB is overkill and adding to the price but at the same time cutting my video card ram in an "upgrade" does not sit well with me either.

For gaming an RTX 3080 with 12gb and a 3090 with 16gb would be a better balance I think

Not practical configurations. The GPU has a 384-bit memory interface which mandates 12/24GiB in fully enabled configurations. ROP clusters are almost certainly still tied to the memory controllers as well, making it impractical to cut them down without disabling memory channels.

The 3090 has 24GiB to make sure it has a bigger number on it than the competitor's part. People will buy 24GiB cards at the high-end even though it's a meaningless figure and by the time any game benefits appreciably from more than twelve, the 3090 will be quite long in the tooth.

The 3080 has 10GiB because it's only got ten of twelve memory channels enabled and putting 20GiB on the Founders Edition would be an extra cost on the BoM and would eat into AIB's options for making distinctive non-reference products.

NVIDIA is also leaving a gap between the 3080 and 3090 to account for the possibility of near-future competition from AMD or even Intel. The 3090 or Titain should be enough to secure a win at the top-end, but the high-end RDNA2 part could concievably best the 3080. If that is the case, I'm sure we will soon see a 3080 Ti or 3085 with a 352-bit memory interface and 11GiB of memory to fill the gap.

Regardless, 10GiB is fine. I have two 1080 TI's (in different computers) with 11GiB and have zero hangups about upgrading to a 10GiB 3080 for my primary gaming system. Both the new Microsoft and Sony consoles have 16GiB of shared memory, and the odds of more than 10GiB being available for graphics at any given time, or many games being able to significantly leverage it in the next few years are rather small.