You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Occlusion Culling and the hardware survey: question to the tech comunity

- Thread starter LKx

- Start date

That is what occlusion culling should be doing. Looking at your viewport, check what's visible, and only draw that.With stuff like that I'd have thought (again, no expert) it would be quicker to just not draw small things that are X distance away than do lots of ray-casting to determine which shot glass is visible. So LODs rather than occlusion culling. It used to be that rendering was always the most expensive thing (in my ancient days coding this sort of thing) but I'm not sure that's the case any more.

Binary space partitioning - Wikipedia

Hi, i don't know anything about graphic rendering, so i might be totally wrong about this, but i'm a bit confused about their hardware survey when a lot are pointing out the alleged lack of optimizations and rendering issues with odyssey:

- no occlusion culling in the rendering

- excessively dark areas due to something happening with colors in the rendering process

So, i'm wondering what's the point of an hardware survey in those conditions, and my question is, since as i said i'm clueless about it, am i wrong and the lack of this occlusion culling might be not such a problem as it sounds it is?

My guess is they suspect some issues are hardware-specific, AMD GPU's vs Nvidia GPU's, Perhaps FDev's occlusion culling works better on Nvidia vs AMD or specific models with less memory have issues, mobile vs integrated etc.

One thing I can tell you is it is much easier to code for a unified platform(Example PlayStation and Xbox) where you know the hardware is the same vs The x86 PC world where the combinations of hardware are almost limitless.

This was true in the 90's when I worked for Westwood Studios and it is just as true today.

They will fix these issues my peeps(ya I'm old), it's just going to take time.

Last edited:

The situation is quite simple actually. They released Odyssey in alpha / beta version. Why they did it will remain a mystery. Now I hope it will be a bad memory in 6 months.

But it is indeed weird to ask for player specs when the basic optimizations are not even done. It's too early to ask that...

But it is indeed weird to ask for player specs when the basic optimizations are not even done. It's too early to ask that...

Frontier is clever, I'll give them that. Instead of hiring more QA to troubleshoot problems in EDO, they get the players to pay Frontier to do their jobs for them.

I'd be happy to help troubleshoot EDO for free (I have some experience in this), but there's no way I'm paying $40 for this "privilege". There really should have been a free public beta for everyone who owns EDH to deal with these issues before unleashing the final game onto the public. Frontier deserves all the negative press it's getting.

Sorry, I'm feeling a little extra salty and disappointed today.. It's time to break out my old meme from the last time Frontier disappointed me (you know the song):

I'd be happy to help troubleshoot EDO for free (I have some experience in this), but there's no way I'm paying $40 for this "privilege". There really should have been a free public beta for everyone who owns EDH to deal with these issues before unleashing the final game onto the public. Frontier deserves all the negative press it's getting.

Sorry, I'm feeling a little extra salty and disappointed today.. It's time to break out my old meme from the last time Frontier disappointed me (you know the song):

Well, yeah, we all knew they should have done a proper beta, i'm pretty sure they knew too (they began to do public beta at some point for a reason), they just couldn't because they had to throw this out of the window in time for their financial year... and i'm pretty sure they were already bracing for impact for the storm they knew would come with that.Frontier is clever, I'll give them that. Instead of hiring more QA to troubleshoot problems in EDO, they get the players to pay Frontier to do their jobs for them.

I'd be happy to help troubleshoot EDO for free (I have some experience in this), but there's no way I'm paying $40 for this "privilege". There really should have been a free public beta for everyone who owns EDH to deal with these issues before unleashing the final game onto the public. Frontier deserves all the negative press it's getting.

Sorry, I'm feeling a little extra salty and disappointed today.. It's time to break out my old meme from the last time Frontier disappointed me (you know the song):

View attachment 231898

In addition they couldn't do a proper beta to not spoil the super secret things they had for release and that now we are all beginning to discover...

Nah. QA will have found and reported the bugs. Fontier simply decided it was ok to ship with those bugs.Frontier is clever, I'll give them that. Instead of hiring more QA to troubleshoot problems in EDO, they get the players to pay Frontier to do their jobs for them.

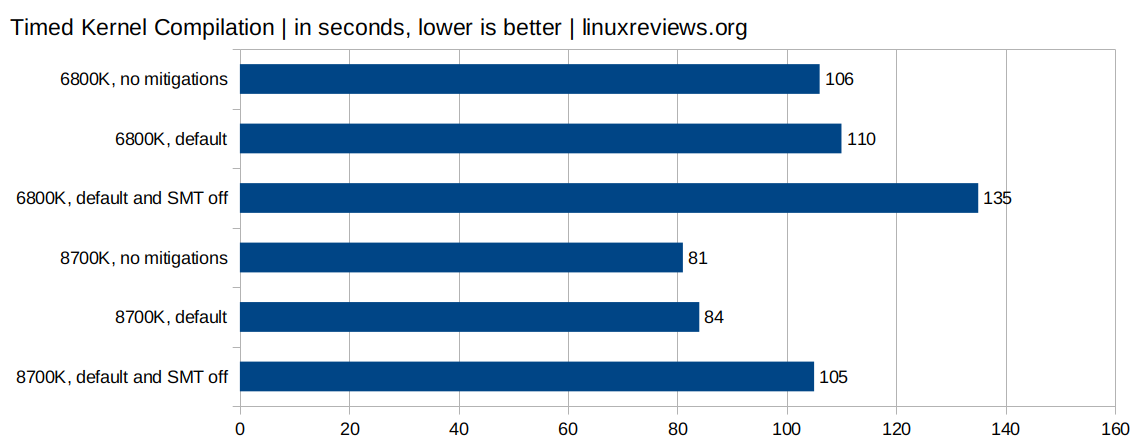

Could you please elaborate on this?I have disabled spectre/melt protection explicit

What are the settings?

Thanks!

Playing on Linux with steam play.

Could you please elaborate on this?

What are the settings?

Thanks!

Playing on Linux with steam play.

HOWTO make Linux run blazing fast (again) on Intel CPUs

Basically it is how the engine prioritizes rendering objects. There would only be a pattern if a specific generation of card(s) or version of driver(s) were consistently causing the issue. Knowing people that have a good variety of hardware and the same issues, I can say it has little to do with any particular combination of hardware. I would say it is a hollow gesture. They'll fix it, but it is just another way businesses that have clearly done wrong can avoid taking any responsibility for it. It's pretty clear that money was the motivation and that trumped making a good product. It's a shame cuz I have no reason to believe they will come through if / when there is another expansion. And I certainly have no reason to buy any games from FD after this.

What is hilarious is all the devs and cm's who defend the company and lie or skew the truth to their customers for the business suits that pushed this are actually enabling this type of behavior. If it ever got out of hand they would also be killing their own jobs. Irony...

There is nothing any of as have done to cause this.

What is hilarious is all the devs and cm's who defend the company and lie or skew the truth to their customers for the business suits that pushed this are actually enabling this type of behavior. If it ever got out of hand they would also be killing their own jobs. Irony...

There is nothing any of as have done to cause this.

So far everying points out that it's not occlusion culling that is the issue. Even if there is none - and we know, that there is none. Current and past gen performance should be enough to deal with rendering. The issue must be somewhere else. The ask for tech spec can lower the area in which they should look.

So I installed odyssey on my new laptop to see if that would work any better - it doesn't.

MSI Prestige 14

Intel i7-1185G7 @ 3GHz

16GB Ram

1TB NVME storage

Geforce GTX1650 Ti with 4GB Ram

Windows 10 Pro, 64Bit

...and with that hardware Odyssey wants to run on "high" at 1280x720, but even at that it's pretty much impossible to even get through that combat tutorial:

I'm inside the building looking at that corpse on the ground, the one where the sparks fly out of the wall, and the NVIDIA Performance Overlay shows me 13 to 19 FPS, and as soon as I'm actually in the firefight the FPS drops to single digits.

This has been a clean install, until just now there has never been any version of Elite angerous on that laptop.

angerous on that laptop.

In comparison:

Let it be noted: the NVIDIA stats thing shows less than 40% CPU and GPU use for horizons, so I'm sure I could crank the settings up some more and still have fun with the results.

MSI Prestige 14

Intel i7-1185G7 @ 3GHz

16GB Ram

1TB NVME storage

Geforce GTX1650 Ti with 4GB Ram

Windows 10 Pro, 64Bit

...and with that hardware Odyssey wants to run on "high" at 1280x720, but even at that it's pretty much impossible to even get through that combat tutorial:

I'm inside the building looking at that corpse on the ground, the one where the sparks fly out of the wall, and the NVIDIA Performance Overlay shows me 13 to 19 FPS, and as soon as I'm actually in the firefight the FPS drops to single digits.

This has been a clean install, until just now there has never been any version of Elite

In comparison:

- the good old single player combat demo / training also suggests high, and runs at stable 40FPS with the same settings.

- horizons also suggests "high" at 1366x768, and I have 30 to 40 FPS in a 'conda autolaunching from inside a coriolis starport.

Let it be noted: the NVIDIA stats thing shows less than 40% CPU and GPU use for horizons, so I'm sure I could crank the settings up some more and still have fun with the results.

Great example! Not only is this brokenness new (I don't recall seeing them take this long in alpha), it's as obvious and easy to replicate as could be. This isn't textures loading slowly; this is the game getting in the way of the operation to load the textures.Also I have no idea what uncompressed monsters those textures have to be, that you see them load on GDDR5. I watched an advert load for almost a minute after loading into a concourse from main menu.

Current and past gen performance should be enough to deal with rendering

Don’t forget the VR community ... For us optimization is mandatory.

I hope at least that EDH 2.0 will perform as well as EDH 1.0 this fall..

That makes no sense, i have a far older laptop with an i7-6700HQ, 16GB RAM, GTX 980M, and playing at 1080p with supersampling 0.85x, settings to high, besides shadows and ambient occlusion to low, i get 20-27 fps in foot combat zones.So I installed odyssey on my new laptop to see if that would work any better - it doesn't.

MSI Prestige 14

Intel i7-1185G7 @ 3GHz

16GB Ram

1TB NVME storage

Geforce GTX1650 Ti with 4GB Ram

Windows 10 Pro, 64Bit

...and with that hardware Odyssey wants to run on "high" at 1280x720, but even at that it's pretty much impossible to even get through that combat tutorial:

I'm inside the building looking at that corpse on the ground, the one where the sparks fly out of the wall, and the NVIDIA Performance Overlay shows me 13 to 19 FPS, and as soon as I'm actually in the firefight the FPS drops to single digits.

This has been a clean install, until just now there has never been any version of Eliteangerous on that laptop.

In comparison:

- the good old single player combat demo / training also suggests high, and runs at stable 40FPS with the same settings.

- horizons also suggests "high" at 1366x768, and I have 30 to 40 FPS in a 'conda autolaunching from inside a coriolis starport.

Let it be noted: the NVIDIA stats thing shows less than 40% CPU and GPU use for horizons, so I'm sure I could crank the settings up some more and still have fun with the results.

Guess they do need to figure out what's going on on different hardware

That's probably the reason they didn't want to support VR on launch (besides foot VR which is another story)Don’t forget the VR community ... For us optimization is mandatory.

I hope at least that EDH 2.0 will perform as well as EDH 1.0 this fall..

If windows users have it enabled on w10 and not present on w7/8, then that could be a reason. Difference there is huge, especially for gaming as it invalidates all last 20 years inventions.

The performance impact of spectre and meltdown mitigations aren't anywhere near so large and even with all of them enabled on platforms that have the most impactful mitigations, it's not disabling speculative execution, nor are they turning off SMT on Windows.

Might be worth a shot if one thinks they are CPU limited, but it's going to be noise level variations for most people.

Some versions of the Linux kernel were hit pretty hard before better patches came out (I lost a huge chunk of IGP performance on my old Lenovo laptop for a while), but if you have a recent kernel and SMT is enabled, disabling the rest probably isn't going to help.

I did notice one thing:

When I am flying in space in Horizons my GPU fans are quiet, there is not much GPU load.

When I touch down on a planet their RPM increases a lot.

But when I leave the planet and fly to space again the GPU load decreases again, the fans go quiet.

(and that's in 1440p with SS x1,25)

In Odyssey my GPU fans are only quiet when I start in space. When I land on a planet or at a station and the GPU load increases it never goes down even if I go back to space - or the main menu for that matter.

It seems to me that Odyssey is indeed rendering unnecessary things even in space.

Do you happen to have a frame rate cap or vsync enabled?

No matter where you are, Odyssey is going to need to spend more work to render a frame, so if you happen to be reaching the same frame rate cap in both, Odyssey will make your GPU work harder for it.

While the culling is incomplete, it's simply not enough to explain the degree of slowdowns.

It's not, and I'm pretty sure Horizons also has iffy culling as well.

That makes no sense, i have a far older laptop with an i7-6700HQ, 16GB RAM, GTX 980M, and playing at 1080p with supersampling 0.85x, settings to high, besides shadows and ambient occlusion to low, i get 20-27 fps in foot combat zones.

Guess they do need to figure out what's going on on different hardware

How much memory on that 980M?

I recall there being an 8GiB version, and that one will definitely perform better than a 4GiB 1650 Ti in the current state of the game.

Anyway Lance Corrimal's laptop is performing well below where I'd expect it to be, even in Horizons.

4GB (and i add that settings on ultra, besides shadows ambient occlusion and supersampling, don't change things much)How much memory on that 980M?

I recall there being an 8GiB version, and that one will definitely perform better than a 4GiB 1650 Ti in the current state of the game.

Anyway Lance Corrimal's laptop is performing well below where I'd expect it to be, even in Horizons.

PS: And in horizons i've 75fps (vsync) most of the times, not lower than 60 on worst cases (full 1080p ultra).

Last edited:

So I installed odyssey on my new laptop to see if that would work any better - it doesn't.

MSI Prestige 14

Intel i7-1185G7 @ 3GHz

16GB Ram

1TB NVME storage

Geforce GTX1650 Ti with 4GB Ram

Windows 10 Pro, 64Bit

...and with that hardware Odyssey wants to run on "high" at 1280x720, but even at that it's pretty much impossible to even get through that combat tutorial:

I'm inside the building looking at that corpse on the ground, the one where the sparks fly out of the wall, and the NVIDIA Performance Overlay shows me 13 to 19 FPS, and as soon as I'm actually in the firefight the FPS drops to single digits.

This has been a clean install, until just now there has never been any version of Eliteangerous on that laptop.

In comparison:

- the good old single player combat demo / training also suggests high, and runs at stable 40FPS with the same settings.

- horizons also suggests "high" at 1366x768, and I have 30 to 40 FPS in a 'conda autolaunching from inside a coriolis starport.

Let it be noted: the NVIDIA stats thing shows less than 40% CPU and GPU use for horizons, so I'm sure I could crank the settings up some more and still have fun with the results.

Your frame rate figures have me thinking you have something set to a low power mode or are using the IGP and not the 1650 Ti. I haven't seen that low simultaneous CPU and GPU utilization for Horizons unless there was a broader issue somewhere.

EDO might have major issues with 4GiB parts, but Horizons shouldn't, and the space combat demo definitely shouldn't.

Well then, it's definitely something wrong with Lance's configuration.

I've just realized one thing: my results are in solo mode, i remember in alpha that playing in open hit the framerate quite a lot, so, there's also that to addo to the mix, and i often omit it.Well then, it's definitely something wrong with Lance's configuration.