One solution would be for Frontier to implement Multi-View Rendering

Benefits

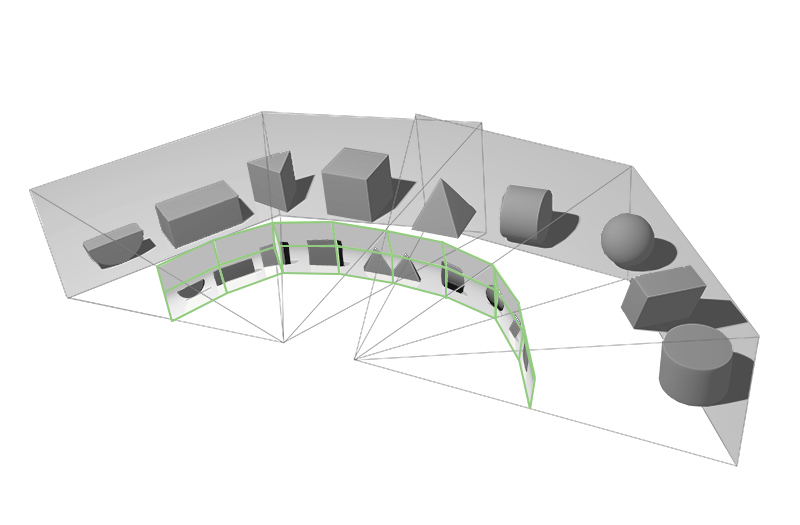

Multi View Rendering expands on the Single Pass Stereo features, increasing the number of projection centers or views for a single rendering pass from two to four. All four of the views available in a single pass are now position-independent and can shift along any axis in the projective space.

This unique rendering capability enables new display configurations for Virtual Reality. By rendering four projection centers, Multi View Rendering can power canted HMDs (non-coplanar displays) enabling extremely wide fields of view.

Details

Single Pass Stereo was introduced with Pascal and uses the Simultaneous Multi-Projection (SMP) architecture of Pascal to draw geometry only once, then simultaneously project both right-eye and left-eye views of the geometry. This allowed developers to almost double the geometric complexity of VR applications, increasing the richness and detail of their virtual worlds.

Turing’s Multi-View Rendering (MVR) capability is an expansion of the Single Pass Stereo (SPS) functionality introduced in the Pascal architecture that processes a single geometry stream across two different projection centers for more efficient rendering of stereo displays. The simultaneous projection of both right-eye and left-eye views of the geometry. This allowed developers to almost double the geometric complexity of VR applications, increasing the richness and detail of their virtual worlds.

MVR expands the number of viewpoint projections from two to four, helping to accelerate VR headsets that use more displays. SPS also only allowed for eyes to be horizontally offset from each other with the same direction of projection. With Turing MVR, all four of the views available in a single pass are now position-independent and can shift along any axis in the projective space. This enables VR headsets that use canted displays and wider fields of view. While the general assumption in stereo rendering is that eyes are just offset from each other in the X dimension, in practice, human asymmetries may need adjustments in multiple dimensions to more precisely fit individual faces. Turing helps to accelerate applications built for headsets with these customizations.

(source : Nvidia VR Works SDK -

https://developer.nvidia.com/vrworks)

VRWorks also includes Variable Rate Shading, Multi-Res Shading, Lens Matched Shading and Single Pass Stereo. All of which were designed specifically for VR render pipeline optimisation.

Frontier was a pioneer in VR implementation with Elite supporting Oculus and OpenVR but sadly they no longer continue to innovate by adopting cutting edge rendering techniques for VR.

They are clearly capable of doing so but no doubt the engineering effort is considerable and the associated development cost has no tangible fiscal return. Consequently the work remains deep in the technical debt backlog