I, as some many other people got appalled by the hideous luminance

distribution and color saturation issues exhibited by the Odyssey

renderer. Since I'm a computer graphics developer myself and do consulting

work for critical graphics systems (think medical imaging, CT, MRI, and

such) I took a dive in the sequence of steps that go into rendering

a single frame of Odyssey.

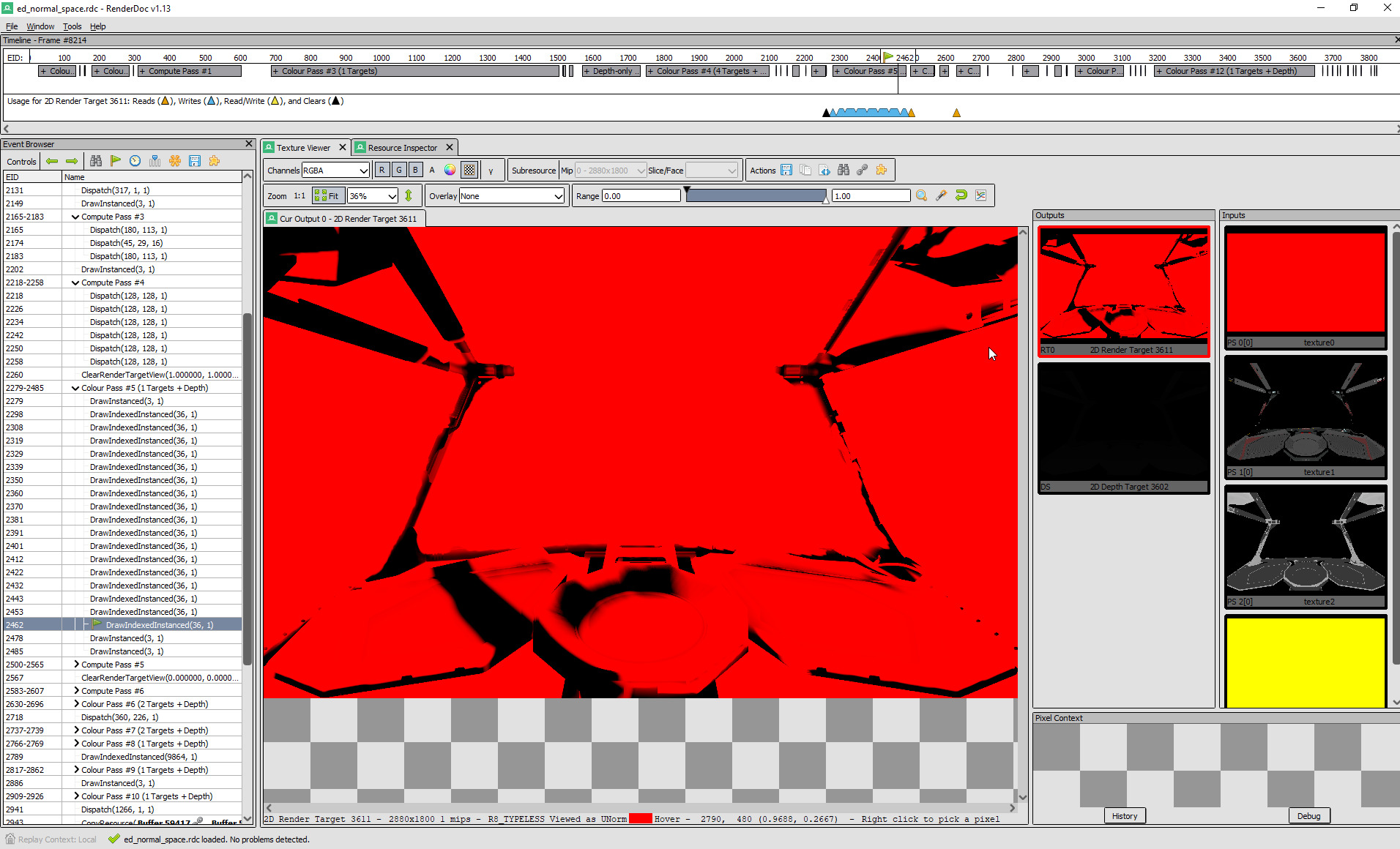

For those curious about this: This is not really data mining in the

strictest sense, what you do is, you take a graphics debugger like

RenderDoc, or Nvidia Nsight Graphics, which places itself between the

program under test and the graphics API and/or driver and meticulously

records every\* command that goes into drawing a frame. Then you can go

through the list of drawing commands step by step and see how the picture

is formed.

The TL;DR is, it's not as a simple issue as a merely wrongly set gamma

value or wrong tonemapping curve. What's actually going on is, that

rendering a single frame of Odyssey consists of several steps, between

which intermediary images are transferred; and unfortunately some of these

steps are not consistent about what the color space is of the

intermediaries that are passed between them.

I'm not going to break this down in detail, so that you, my fellow

players, are able to understand what's going on.

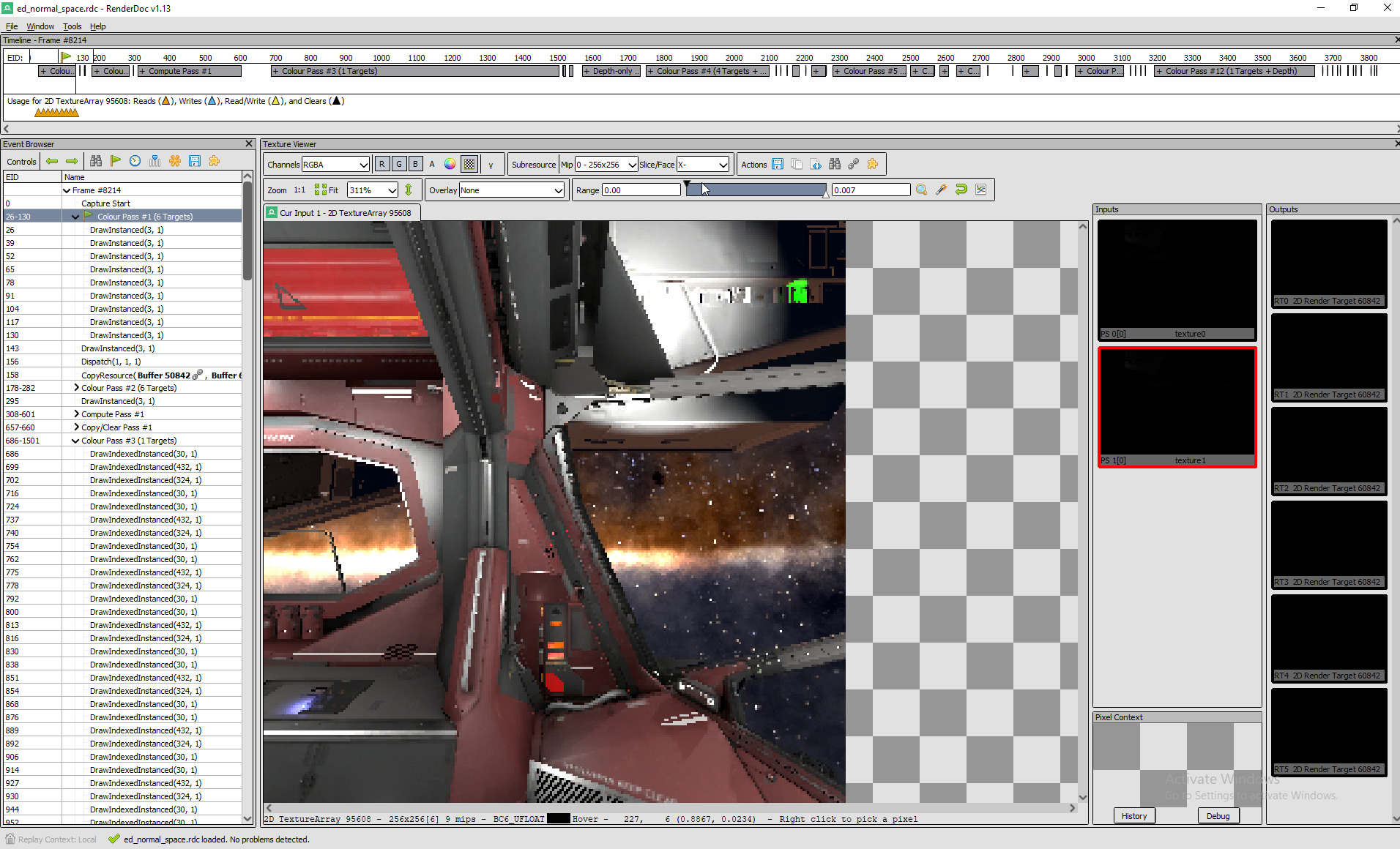

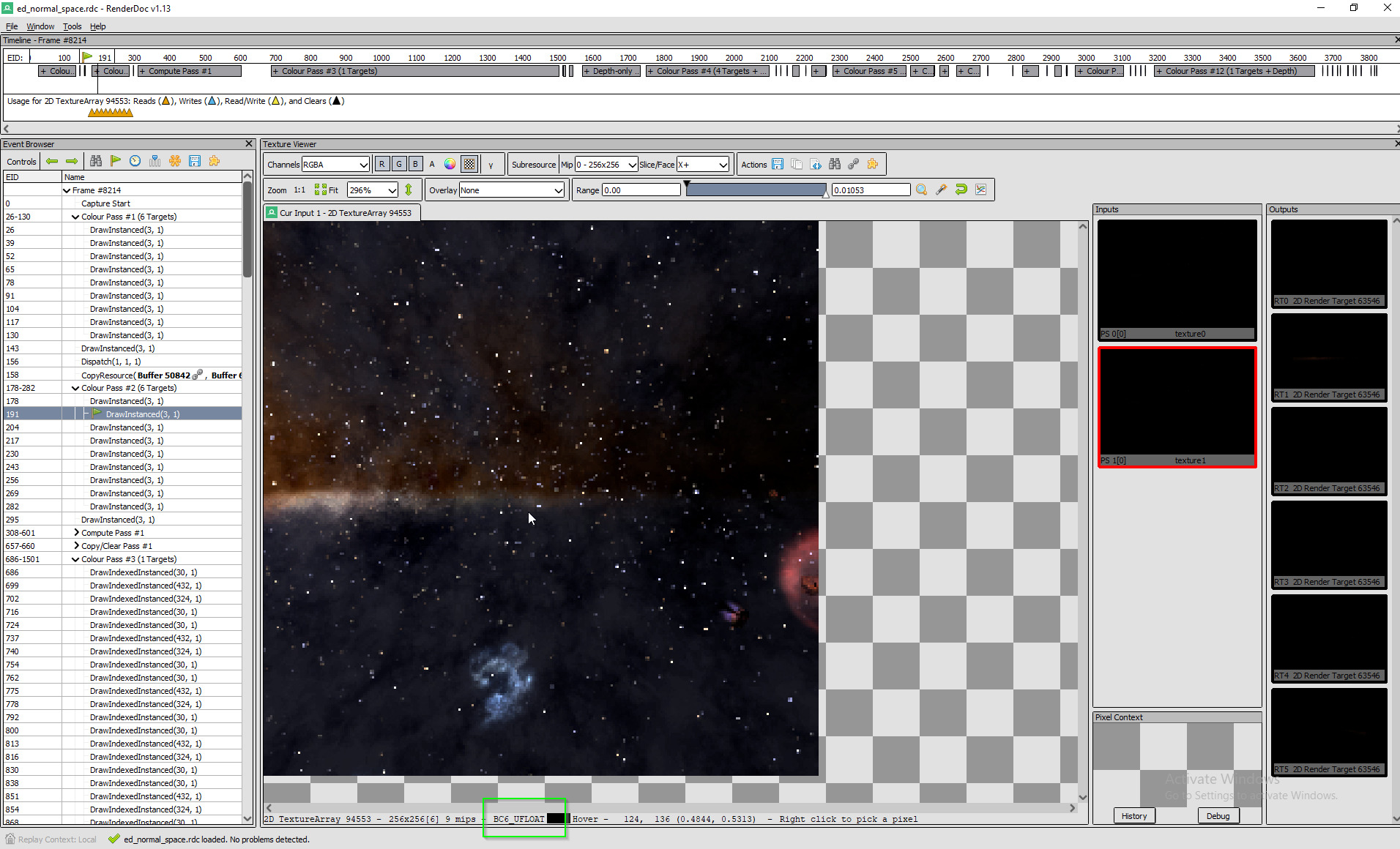

Step 1: An environment map in the form of a cubemap is transferred from

a cubemap texture to a cubemap renderbuffer target. This is essentially

just a copy, but it would be possible to make color adjustments here

(which doesn't happen). This copy step is done mostly to convert the color

format from a limited range, compressed format (BC6) to a HDR half

precision floating point format (R11G11B10 float), which will be used for

the rest of the rendering process until the final tonemapped downsampling

into the swapchain output (i.e. the image sent to the monitor). There's

something peculiar about this cubemap though, and I encourage everyone

reading this to take a close look at these images and think about those,

too, throughout the rest of the explanation.

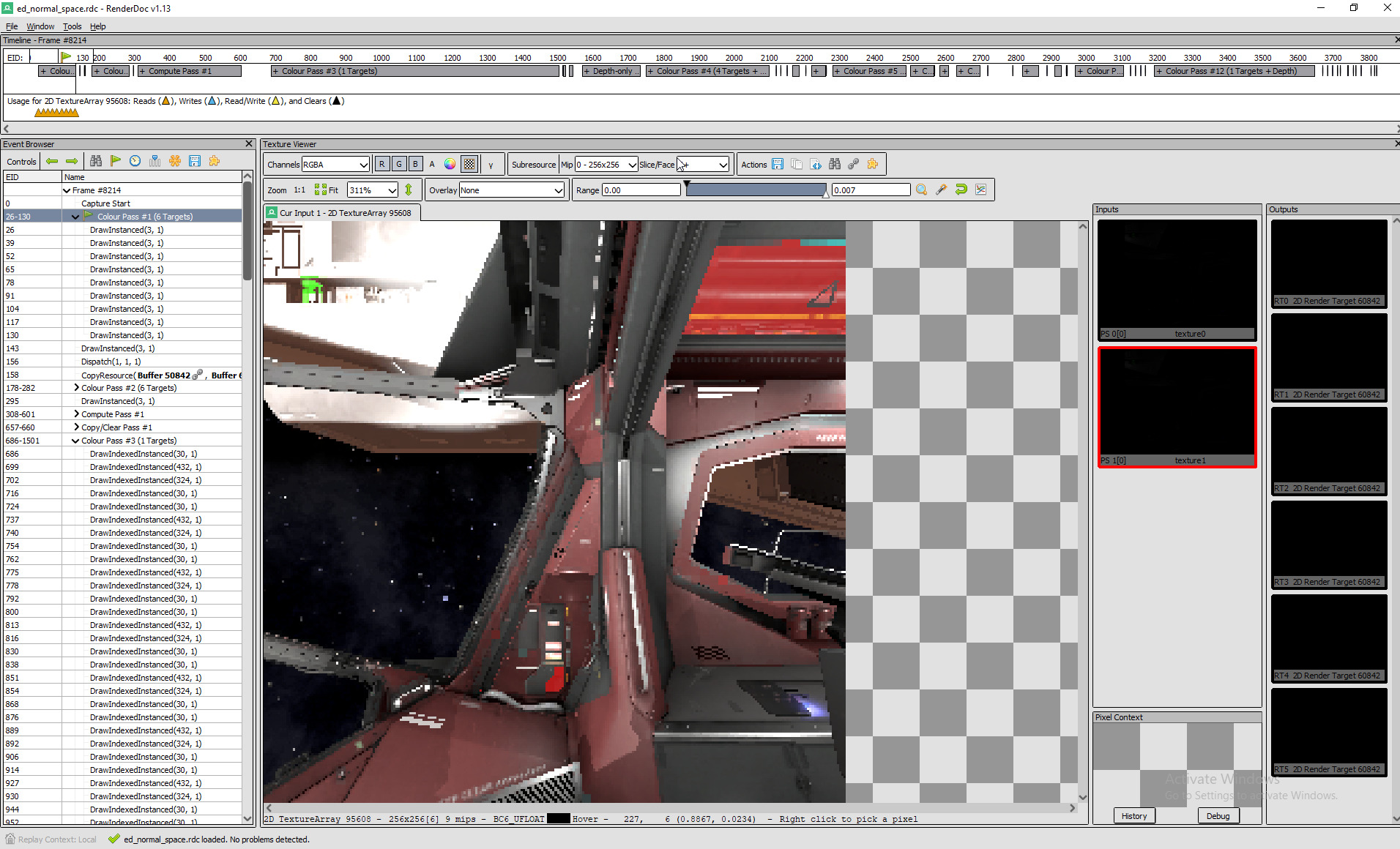

Step 2: Skybox rotation. In this step a low resolution version of the

skybox is drawn rotated into a cubemap renderbuffer, so that the result is

aligned with the ship in space.

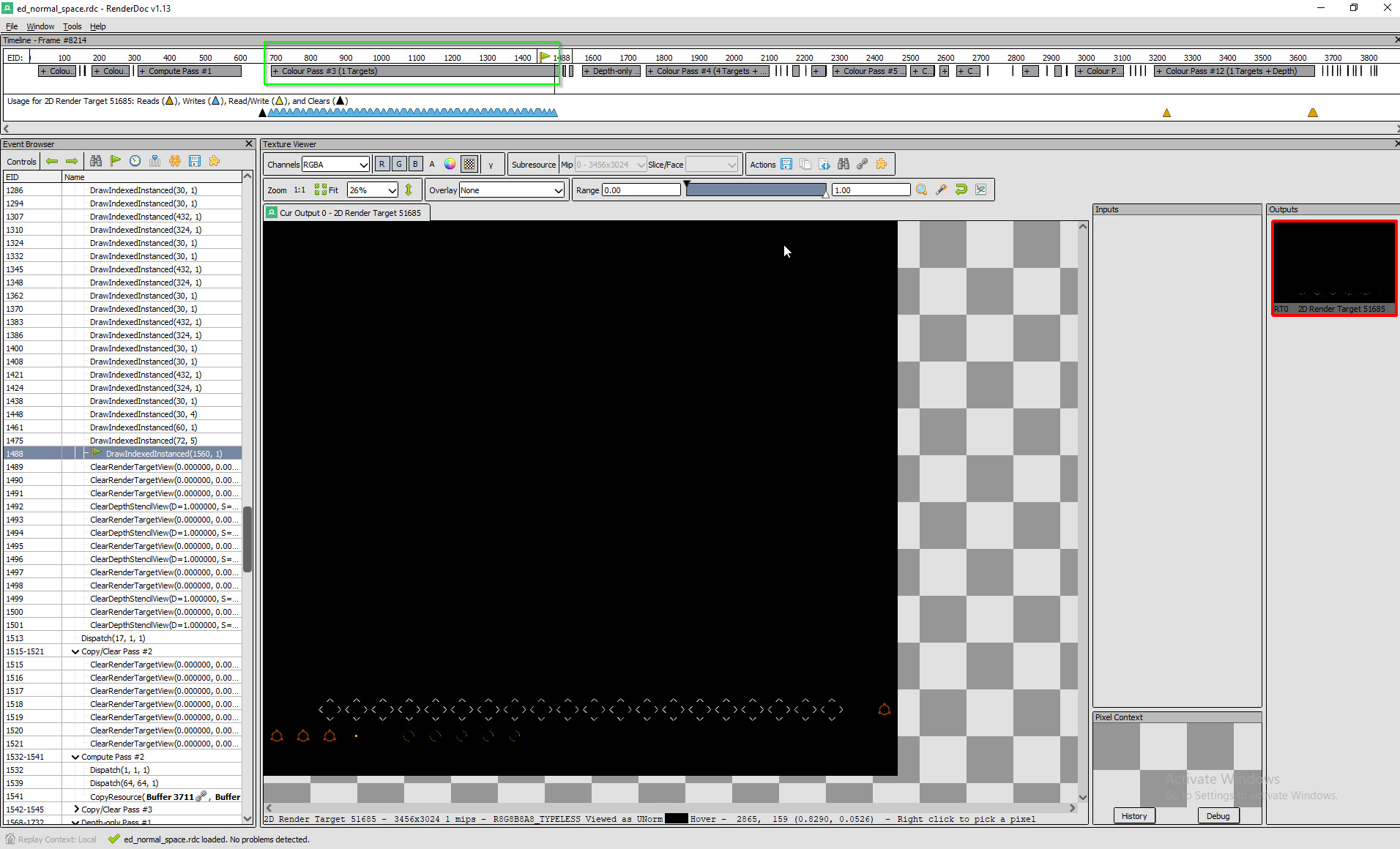

Step 3: IMHO this is the most questionable pass captured in this frame.

What's happening here is, that a couple of the target reticle variants are

drawn, several times, and in a very inefficient matter at that. This pass

generates over 800 events, and I've seen it in all of the frame dumps of

Odyssey I've been looking so far. It should be noted though, that the

event numbers in the timeline are not strictly proportional to the actual

time spent on this pass. But still this is what I came to call myself the

what pass.

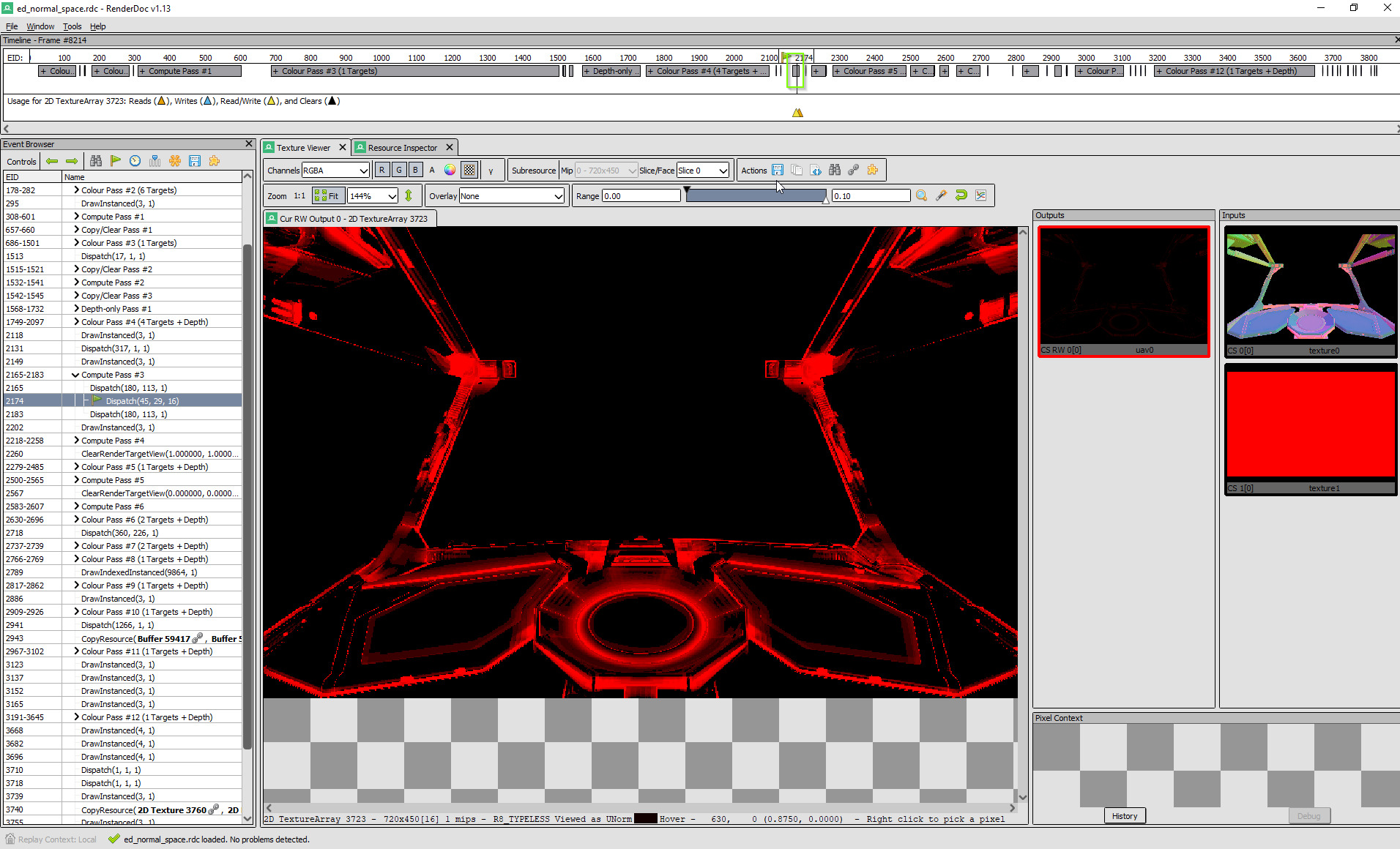

Step 4 & 5: Next comes a "mystery" pass. It's a compute pass, and without

reverse engineering the compute shader and tracing the data flow forward

and backward I can't tell what they do. I mostly ignored them.

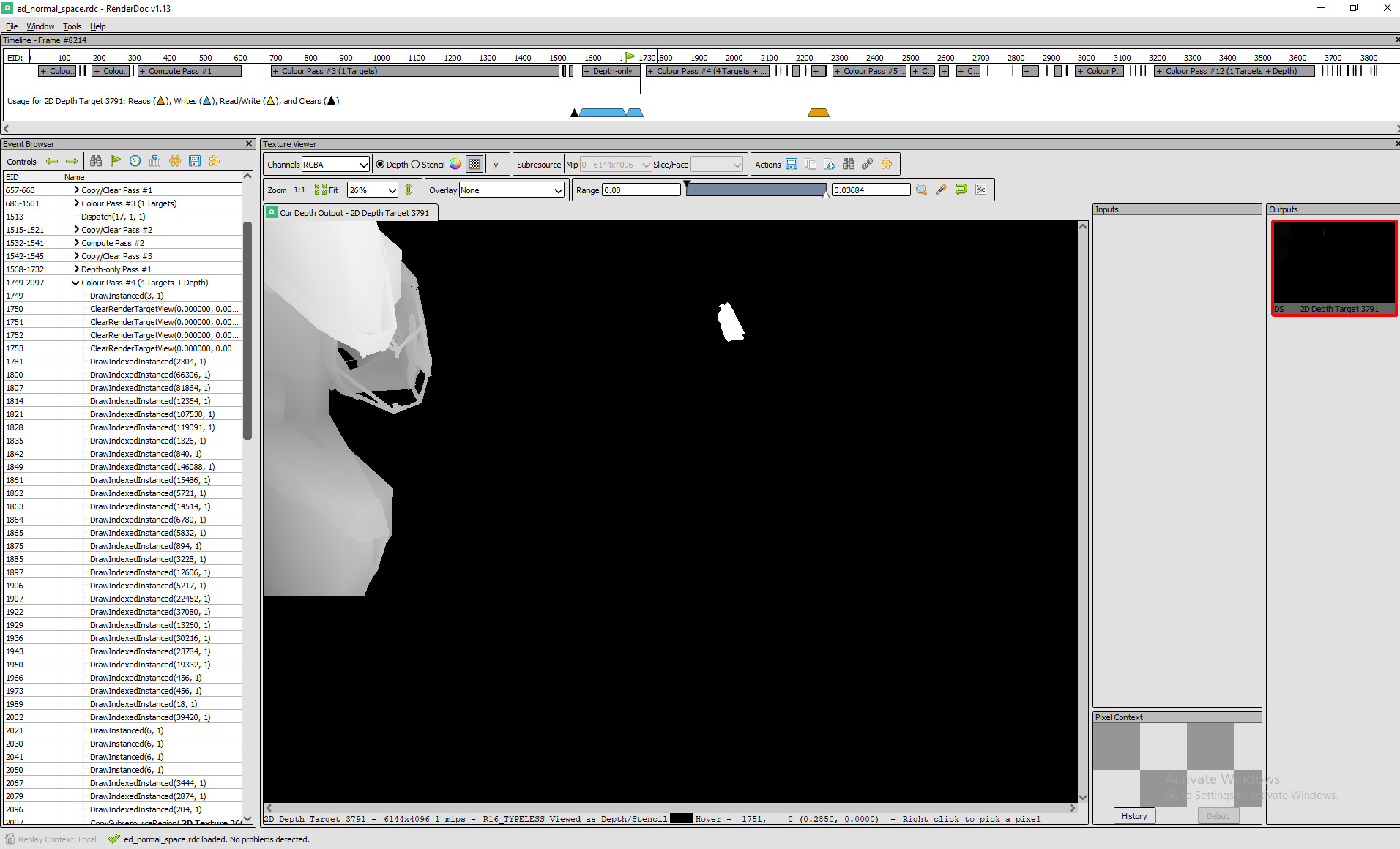

Step 6: Here the shadow map cascade is generated. I.e. the ship is

rendered from the direction of the primary light source, each pixel

encoding the distance of that particular visible surface of the ship to

the light source. This information is used in a later step to calculate

the shadows.

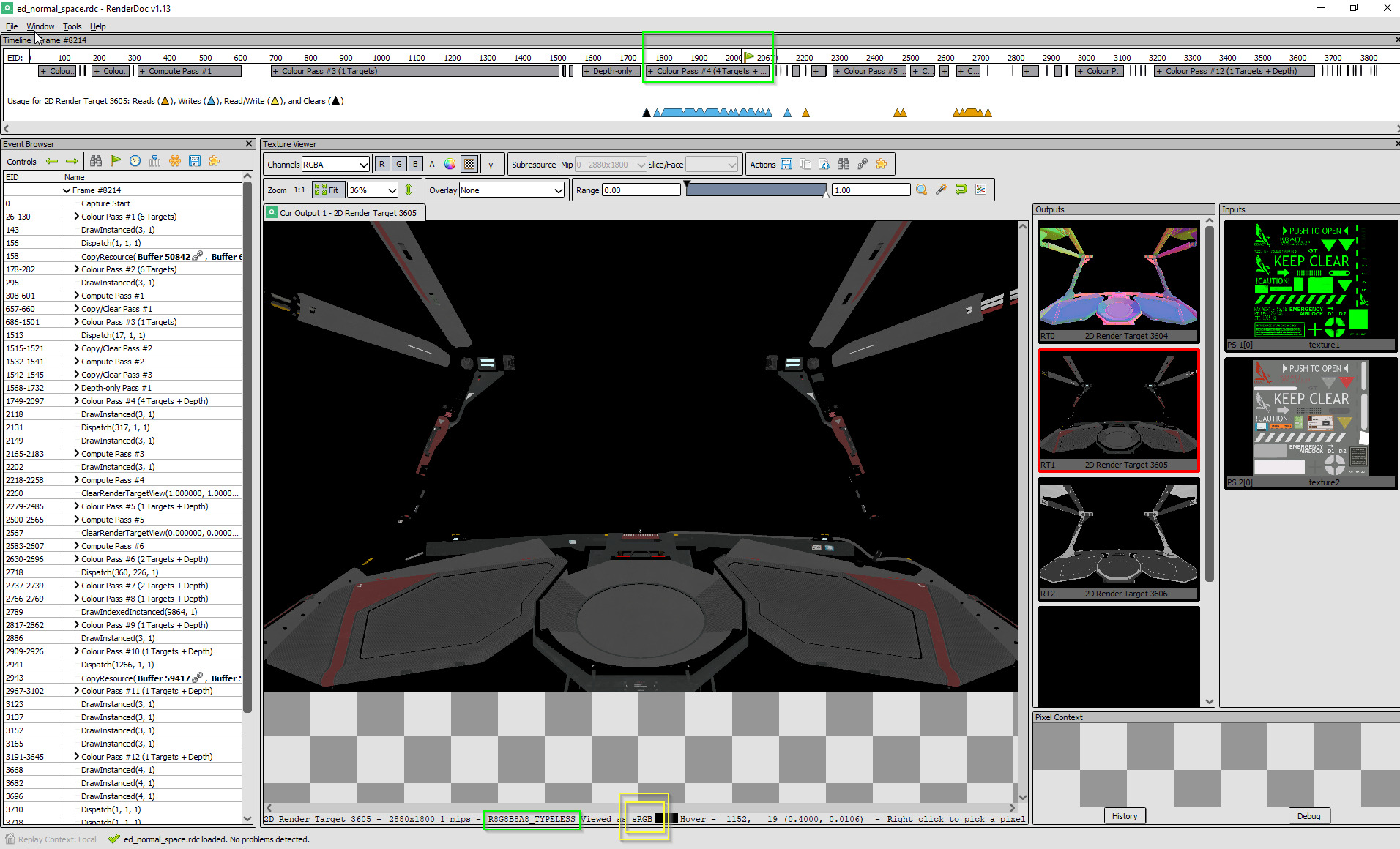

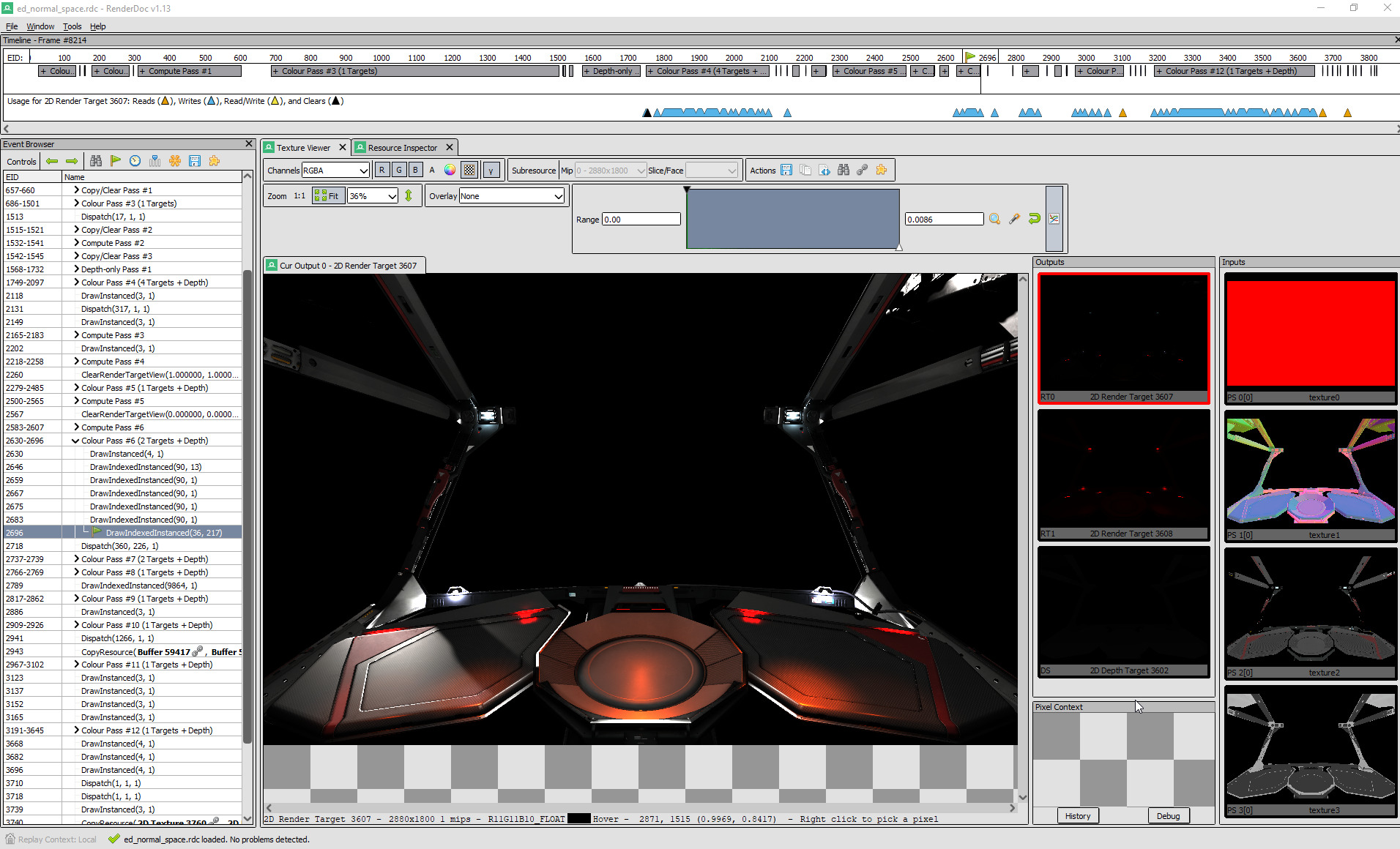

Step 7: This is a deferred rendering G-buffer preparation pass.

Essentially what's happening is, that all the information about the

geometry of the model, together with several "optical" properties (color,

glossyness, surface structure) is encoded into pixels of several render

targets (i.e. images). These intermediaries do not contribute to the final

image directly, but are used as ingredients for the actual image

generation. However of particular note here is the image view format in

which the diffuse component render buffer has been configured: This has ben

set as sRGB, which effects that rendering of this intermediary ought to

happen in linear RGB, but the value stored in this buffer are translated

into sRGB color space (to a close approximation sRGB is RGB with a gamma

of 2.4 applied; strictly speaking there's a linear part for small values

and a differentiable continued power curve for large values). This is

something that has the potential to cause trouble in deferred renderers,

but I am under the impression, that it's fine here.

Steps 8 & 9: These steps essentially perform a copy of the depth buffer

and the gbuffer into new render targets (presumably to resolve their

framebuffers).

Steps 10: Calculate Screen Space Ambient Occlusion (SSAO)

Step 11: SSAO is combined with gloss

Step 12 & 13: Shadow mapping and shadow blurring

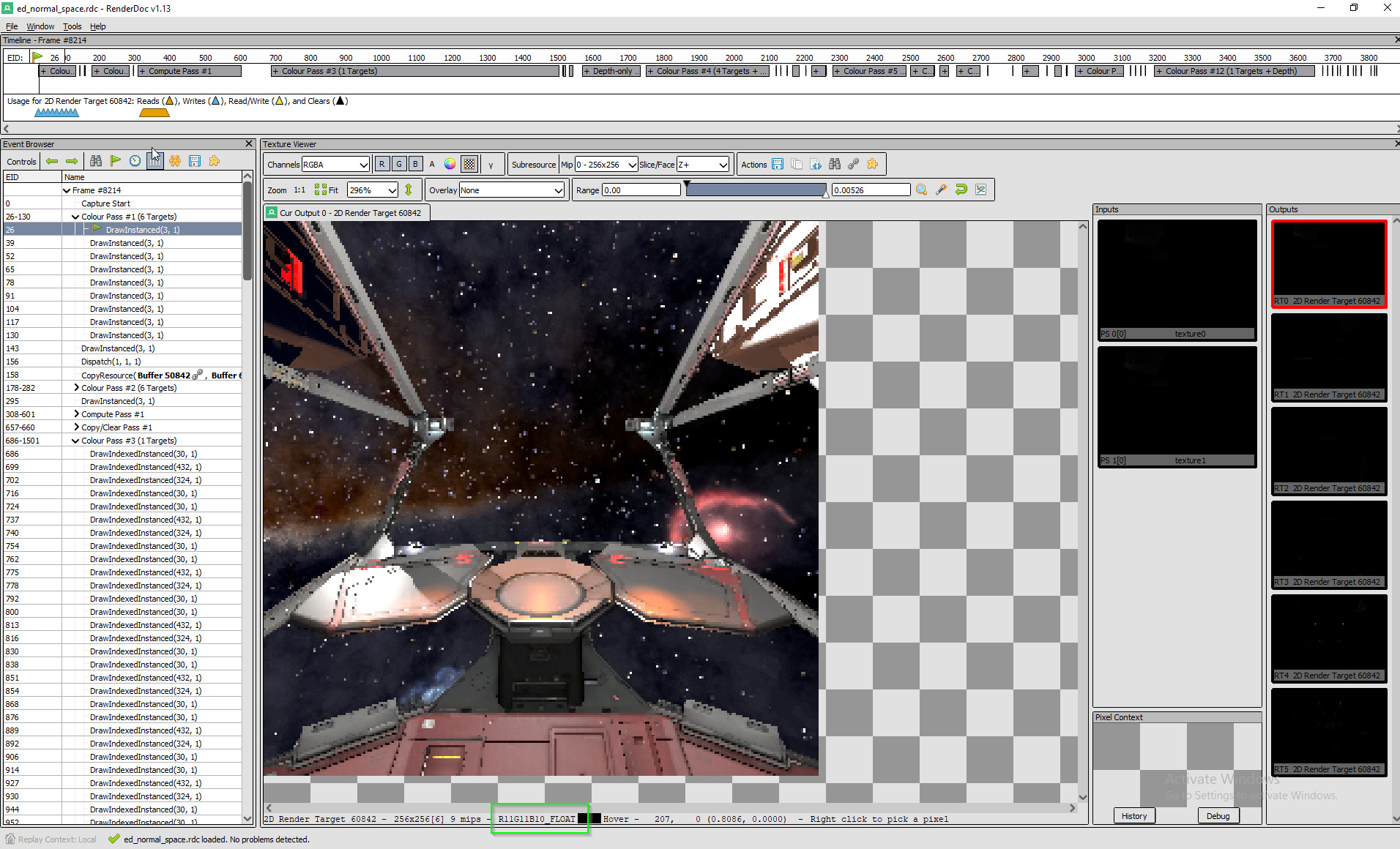

Step 14 & 15: Deferred Lighting and Ambient. At the end of these steps the

image of the cockpit geometry is finished and ready for composition with

the rest of the scene. Take note that I had gamma toning enabled for this

display and as you can see, it looks correct.

This is a crucial moment to take note the following: The intermediary

image of the cockpit geometry is rendered to an HDR render target, and the

contents of this target are linear RGB. Or in other words: Everything is

fine up to this point (except for that weird what pass).

Step 16: This prepares the view of the ship in the sensor display.

distribution and color saturation issues exhibited by the Odyssey

renderer. Since I'm a computer graphics developer myself and do consulting

work for critical graphics systems (think medical imaging, CT, MRI, and

such) I took a dive in the sequence of steps that go into rendering

a single frame of Odyssey.

For those curious about this: This is not really data mining in the

strictest sense, what you do is, you take a graphics debugger like

RenderDoc, or Nvidia Nsight Graphics, which places itself between the

program under test and the graphics API and/or driver and meticulously

records every\* command that goes into drawing a frame. Then you can go

through the list of drawing commands step by step and see how the picture

is formed.

The TL;DR is, it's not as a simple issue as a merely wrongly set gamma

value or wrong tonemapping curve. What's actually going on is, that

rendering a single frame of Odyssey consists of several steps, between

which intermediary images are transferred; and unfortunately some of these

steps are not consistent about what the color space is of the

intermediaries that are passed between them.

I'm not going to break this down in detail, so that you, my fellow

players, are able to understand what's going on.

Step 1: An environment map in the form of a cubemap is transferred from

a cubemap texture to a cubemap renderbuffer target. This is essentially

just a copy, but it would be possible to make color adjustments here

(which doesn't happen). This copy step is done mostly to convert the color

format from a limited range, compressed format (BC6) to a HDR half

precision floating point format (R11G11B10 float), which will be used for

the rest of the rendering process until the final tonemapped downsampling

into the swapchain output (i.e. the image sent to the monitor). There's

something peculiar about this cubemap though, and I encourage everyone

reading this to take a close look at these images and think about those,

too, throughout the rest of the explanation.

Step 2: Skybox rotation. In this step a low resolution version of the

skybox is drawn rotated into a cubemap renderbuffer, so that the result is

aligned with the ship in space.

Step 3: IMHO this is the most questionable pass captured in this frame.

What's happening here is, that a couple of the target reticle variants are

drawn, several times, and in a very inefficient matter at that. This pass

generates over 800 events, and I've seen it in all of the frame dumps of

Odyssey I've been looking so far. It should be noted though, that the

event numbers in the timeline are not strictly proportional to the actual

time spent on this pass. But still this is what I came to call myself the

what pass.

Step 4 & 5: Next comes a "mystery" pass. It's a compute pass, and without

reverse engineering the compute shader and tracing the data flow forward

and backward I can't tell what they do. I mostly ignored them.

Step 6: Here the shadow map cascade is generated. I.e. the ship is

rendered from the direction of the primary light source, each pixel

encoding the distance of that particular visible surface of the ship to

the light source. This information is used in a later step to calculate

the shadows.

Step 7: This is a deferred rendering G-buffer preparation pass.

Essentially what's happening is, that all the information about the

geometry of the model, together with several "optical" properties (color,

glossyness, surface structure) is encoded into pixels of several render

targets (i.e. images). These intermediaries do not contribute to the final

image directly, but are used as ingredients for the actual image

generation. However of particular note here is the image view format in

which the diffuse component render buffer has been configured: This has ben

set as sRGB, which effects that rendering of this intermediary ought to

happen in linear RGB, but the value stored in this buffer are translated

into sRGB color space (to a close approximation sRGB is RGB with a gamma

of 2.4 applied; strictly speaking there's a linear part for small values

and a differentiable continued power curve for large values). This is

something that has the potential to cause trouble in deferred renderers,

but I am under the impression, that it's fine here.

Steps 8 & 9: These steps essentially perform a copy of the depth buffer

and the gbuffer into new render targets (presumably to resolve their

framebuffers).

Steps 10: Calculate Screen Space Ambient Occlusion (SSAO)

Step 11: SSAO is combined with gloss

Step 12 & 13: Shadow mapping and shadow blurring

Step 14 & 15: Deferred Lighting and Ambient. At the end of these steps the

image of the cockpit geometry is finished and ready for composition with

the rest of the scene. Take note that I had gamma toning enabled for this

display and as you can see, it looks correct.

This is a crucial moment to take note the following: The intermediary

image of the cockpit geometry is rendered to an HDR render target, and the

contents of this target are linear RGB. Or in other words: Everything is

fine up to this point (except for that weird what pass).

Step 16: This prepares the view of the ship in the sensor display.