You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

What fps is acceptable?

- Thread starter Ackoman

- Start date

I have just done that, to me the 30fps appear to spin faster.30fps is fine but there is a difference between that and say 60fps. Here's a little demo, I recommend randomly clicking the button and then guessing what it's running at without looking at toggle.

nice demo

Anything over 30 makes the game at least playable, 50 to 60 and you have a great experience and anything over that and you have nothing to worry about. I'm running between 60 to 75 in most areas and upto 125 in other areas so i'm more than happy and i'm on hardware that's now at the limit of Odyssey's specs.

Fighter pilots cap out at around 250. People being able to discern things at well over 1000 seems unlikely.If you want to get to the point where a higher frame rate has no utility, it's well in excess of one-thousand. Contrast permitting we can detect visual stimuli of vanishingly short duration, identify even complex silhouettes that we see for less than a hundredth of a second, and glean information on motion and trajectory from even shorter duration.

I'm looking for 60hz in even the worst circumstances, and had that in Horizons at 4k. I always want more (and that's on me & the hardware I can afford or justify buying).

I used to play in a 144Hz 1080p monitor & the reduction in input lag & general responsiveness over 60Hz was detectable & worthwhile to me but that's more about the inputs & responsiveness than the display itself. 45 or so fps is an acceptable minimum for display smoothness but flickering at that rate (while laser mining for example) is not, hence wanting 60fps at minimum.

I used to play in a 144Hz 1080p monitor & the reduction in input lag & general responsiveness over 60Hz was detectable & worthwhile to me but that's more about the inputs & responsiveness than the display itself. 45 or so fps is an acceptable minimum for display smoothness but flickering at that rate (while laser mining for example) is not, hence wanting 60fps at minimum.

There's a lot of snobbery around framerates and it really depends on the type of game and situation. In a 3D shooter, below 60fps is not a good experience imo. In a competitive shooter, higher framerates do slightly improve your competitiveness and reaction times, but your mileage may vary. If I'm playing a game like Battlefield, then ideally I'm aiming for >100fps or as close to my refresh rate as possible (155hz). In a single-player FPS, I'm generally happy with >60fps.

Last edited:

60fps is an industry standard for PC. Anything below that for extended periods of time is bad. If you drop out of SC and get a 1s drop to 55fps that is acceptable but not great.

30fps is a bare minimum for PC. Anything below that and the refresh rates really hampers your gameplay.

So, with the minimum hardware requirements, you should get a steady 30fps. With the recommended requirements you should get 60fps.

Higher frame rates shpuld be achievable with better hardware or lower graphic settings but that's not the point here.

30fps is a bare minimum for PC. Anything below that and the refresh rates really hampers your gameplay.

So, with the minimum hardware requirements, you should get a steady 30fps. With the recommended requirements you should get 60fps.

Higher frame rates shpuld be achievable with better hardware or lower graphic settings but that's not the point here.

First try today and im baffled. 40 in stations now at ultra 3440x1440.

But the pc cant handle it for longer than 5 minutes and crashes.

But the pc cant handle it for longer than 5 minutes and crashes.

First try today and im baffled. 40 in stations now at ultra 3440x1440.

But the pc cant handle it for longer than 5 minutes and crashes.

Check your CPU & GPU temps, overheating components in your PC would explain that.

On Planet Surfaces Id be happy if i get 40 FPS +/- 5 on slightly reduces Settings. I already reduced supersampling to 1.0 instead of 1.5 but that didnt do anything

I had 80-100 FPS on planet surfaces before....

I had 80-100 FPS on planet surfaces before....

Last edited:

yeah cpu max 65 gpu max 80 from gpu-z log. I rebuild my pc every year and use new noctua paste and have all noctua fans no improvements to be made.Check your CPU & GPU temps, overheating components in your PC would explain that.

Fighter pilots cap out at around 250.

Cap out in what? The frame rate at which motion becomes perceptibly smooth. The frame rate at which they stop being able to consciously tell that frame rate is increasing? That they stop being able to distinguish single frames of aircraft silhouettes?

I can personally identify shapes that are on screen for one frame per second on 300Hz strobed (no image persistence) displays, so I doubt it's the latter.

People being able to discern things at well over 1000 seems unlikely.

Can you see a camera flash on low? That's typically in the ballpark of 1/20000th of a second in duration.

For motion blur to be imperceptible on sample and hold displays, you need to approach 1kHz (fps).

For virtually everyone to find strobing artifacts acceptable, well over 1kHz is needed and to make them completely undetectable, even unconsciously, you need to approach 10kHz. This is the effective floor for higher frame rate having zero practical benefit.

Some references:

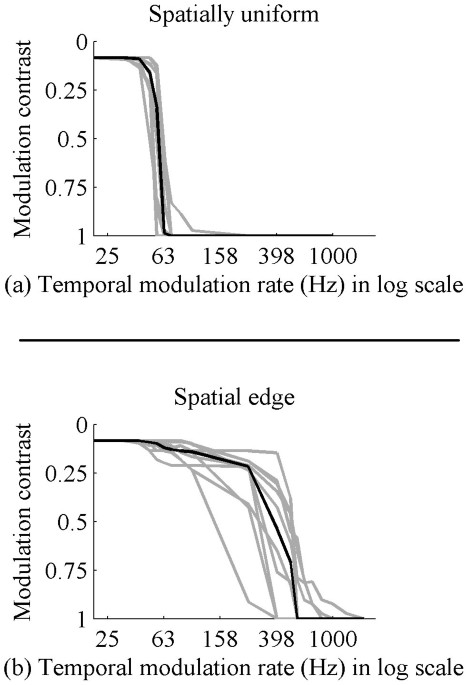

Humans perceive flicker artifacts at 500 Hz - Scientific Reports

Humans perceive a stable average intensity image without flicker artifacts when a television or monitor updates at a sufficiently fast rate. This rate, known as the critical flicker fusion rate, has been studied for both spatially uniform lights and spatio-temporal displays. These studies have...

Blur Busters Law: The Amazing Journey To Future 1000Hz Displays - Blur Busters

A Blur Busters Holiday 2017 Special Feature by Mark D. Rejhon 2020 Update: NVIDIA and ASUS now has a long-term road map to 1000 Hz displays by the 2030s! Contacts at NVIDIA and vendors has confirmed this article is scientifically accurate. Before I Explain Blur Busters Law for Display Motion...

Last edited:

I think is not just a matter of Numbers, I was surprised when I look my FPS meter, because I was sure it was much lower than that.

To my experience, something on Odyssey messes up my on-foot experience, I've played many games at the same 60fps but looking much smoother, my feeling frame rate feels lower than what shown on digits. This game was just rushed, was not ready for release, that's the sad truth.

To my experience, something on Odyssey messes up my on-foot experience, I've played many games at the same 60fps but looking much smoother, my feeling frame rate feels lower than what shown on digits. This game was just rushed, was not ready for release, that's the sad truth.

It's also continued to have a place as it's really good at concealing the dodginess of some of the vfx. From stop-motion animation to modern CG work. Look at the Hobbit films for why you don't combine high frame rates with lots of computer generated foreground characters.Yeah, to save film, and the projector's (projectionist's?) arm (they were originally hand-cranked). It then became a standard because it was just good enough, mostly because of motion blur.

Yes, exactly that, in the source I found quoted.That they stop being able to distinguish single frames of aircraft silhouettes?

Nope, average photographic flash is 1ms, 20 times longer. It oversaturates the cells in our eyes to the extent that we continue to 'see' it for literal seconds after, if viewed directly, and smaller durations when reflected by the objects in view.Can you see a camera flash on low? That's typically in the ballpark of 1/20000th of a second in duration.

In any case, a super-high contrast in light levels is very different from a similarly-bright moving image.We can detect instants of flashes are much shorter durations than we can perceive movement, because the difference in the scene is far greater.

Everything I hear from the fps fetishsits reminds me of audiophiles.

Everyone's a little different on this, so it's hard to know what's acceptable for you until you test it and find out for yourself.

I did a test about a year ago where I tried capping games to different frame rates to see where they started looking 'smooth' - where I couldn't detect any stepping or judder when turning or moving forward in a scene. Used a few different games - a racer, an FPS and an RPG. I have a 144hz freesync panel (freesync range 30-144hz)

For me the threshold is a little over 90fps - below that I can see that the movement isn't smooth. There's a small difference from ~95 to 144hz for me, but it's only really visible in FPS titles when I'm snapping from side to side very quickly, which doesn't happen in other genres.

For Odyssey I'm getting low 40s in larger planetary ports, 60-ish in the the smaller ones and on foot in a station it varies from 60-ish to 90-ish depending on which way I look. It's playable, it's just not visually smooth to me is all.

I did a test about a year ago where I tried capping games to different frame rates to see where they started looking 'smooth' - where I couldn't detect any stepping or judder when turning or moving forward in a scene. Used a few different games - a racer, an FPS and an RPG. I have a 144hz freesync panel (freesync range 30-144hz)

For me the threshold is a little over 90fps - below that I can see that the movement isn't smooth. There's a small difference from ~95 to 144hz for me, but it's only really visible in FPS titles when I'm snapping from side to side very quickly, which doesn't happen in other genres.

For Odyssey I'm getting low 40s in larger planetary ports, 60-ish in the the smaller ones and on foot in a station it varies from 60-ish to 90-ish depending on which way I look. It's playable, it's just not visually smooth to me is all.

TL;DR — I'll take a stable 25fps anytime, over the constant drops from 60-20 that I had in the alpha.

Acceptable as in "I can or can not play" or are talking when drops are noticable?

I don't know my minimum playable tolerance with modern games, but I used play a fast paced PvP first/3rd person shooter at 15-20fps back in 2004. It was bad, but objectively speaking not the "LITERALLY UNPLAYABLE" meme.

My list goes (within a plus or minus 6-8fps temporary drop margin)

30 is acceptable

45 is nice

60 is good

120 is very good

Higher is omg-amazing!!111

My monitor is an ultra-wide 3840x1080 capable of 144Hz. But I mostly keep it locked at 60fps in games to keep recording filesizes low.

Besides, high frame-rates is not gonna change my slow reaction time to a bullet that I saw come flying towards my head at frame 22 and hits me a few frames later.

Acceptable as in "I can or can not play" or are talking when drops are noticable?

I don't know my minimum playable tolerance with modern games, but I used play a fast paced PvP first/3rd person shooter at 15-20fps back in 2004. It was bad, but objectively speaking not the "LITERALLY UNPLAYABLE" meme.

My list goes (within a plus or minus 6-8fps temporary drop margin)

30 is acceptable

45 is nice

60 is good

120 is very good

Higher is omg-amazing!!111

My monitor is an ultra-wide 3840x1080 capable of 144Hz. But I mostly keep it locked at 60fps in games to keep recording filesizes low.

Besides, high frame-rates is not gonna change my slow reaction time to a bullet that I saw come flying towards my head at frame 22 and hits me a few frames later.

Last edited: